github.blog/news-insight...

github.blog/news-insight...

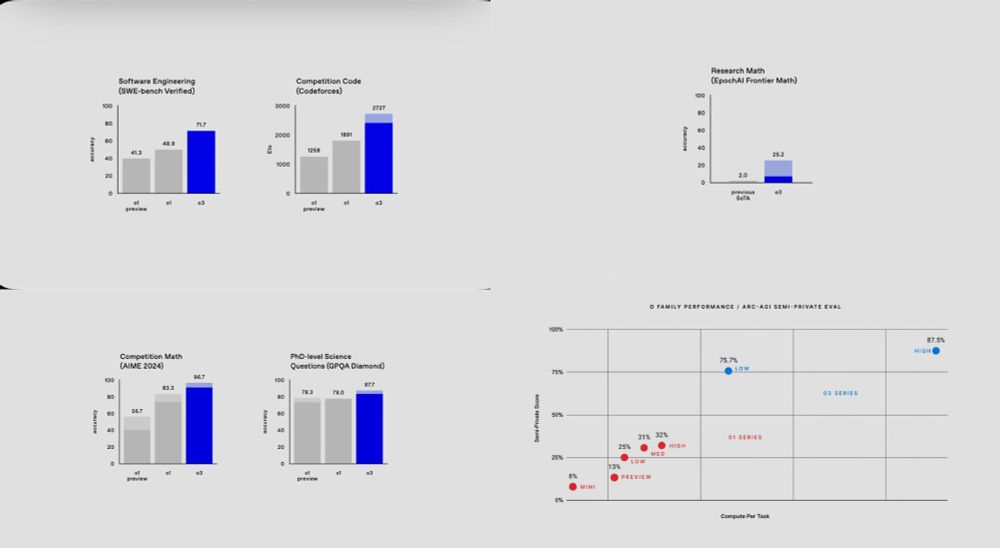

On GPQA, PhDs with access to the internet got 34% outside their specialty, up to 81% inside. o3 is 87%.

Frontier Math went from the best AI at 2% to 25%

Some other big ones, too

On GPQA, PhDs with access to the internet got 34% outside their specialty, up to 81% inside. o3 is 87%.

Frontier Math went from the best AI at 2% to 25%

Some other big ones, too

#openai

arcprize.org/blog/oai-o3-...

#openai

arcprize.org/blog/oai-o3-...

#spotify #notebooklm

#spotify #notebooklm

There are now several training configs that together reproduce the training runs that lead to the final OLMo 2 models.

In particular, all the training data is available, tokenized and shuffled exactly as we trained on it!

There are now several training configs that together reproduce the training runs that lead to the final OLMo 2 models.

In particular, all the training data is available, tokenized and shuffled exactly as we trained on it!

#llm #ai

allenai.org/blog/olmo2

#llm #ai

allenai.org/blog/olmo2

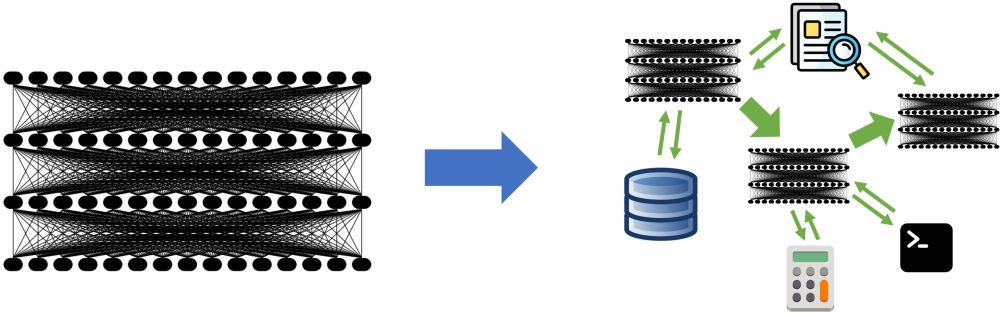

bair.berkeley.edu/blog/2024/02...

#llm #compoundai

bair.berkeley.edu/blog/2024/02...

#llm #compoundai

#llm #llmops #serverless #inference

#llm #llmops #serverless #inference