Staff Eng @ Datadog

Streaming at: twitch.tv/aj_stuyvenberg

Videos at: youtube.com/@astuyve

I write about serverless minutia at aaronstuyvenberg.com/

It ate up all available file descriptors on my little t3 box and still ran 18k RPS with a p99 of 0.3479s. Not many services can go from 0 to 18k RPS instantaneously with this p99.

It ate up all available file descriptors on my little t3 box and still ran 18k RPS with a p99 of 0.3479s. Not many services can go from 0 to 18k RPS instantaneously with this p99.

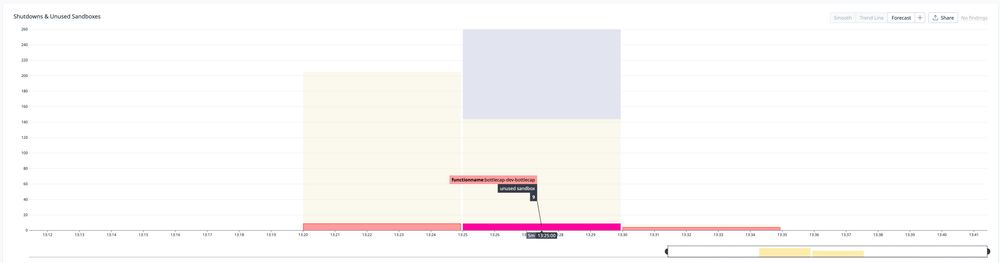

Here's what happens after a 10k request burst. Hundreds of sandbox shutdowns, along with 22 sandboxes which were spun up but never received a request.

Here's what happens after a 10k request burst. Hundreds of sandbox shutdowns, along with 22 sandboxes which were spun up but never received a request.

Honestly AWS should simply support this natively.

Honestly AWS should simply support this natively.

Performance is such a competitive advantage which easily slips away if you're not constantly paying attention to it.

Performance is such a competitive advantage which easily slips away if you're not constantly paying attention to it.

#ScyllaDB

#ScyllaDB

Lazy loading is great!

Lazy loading is great!

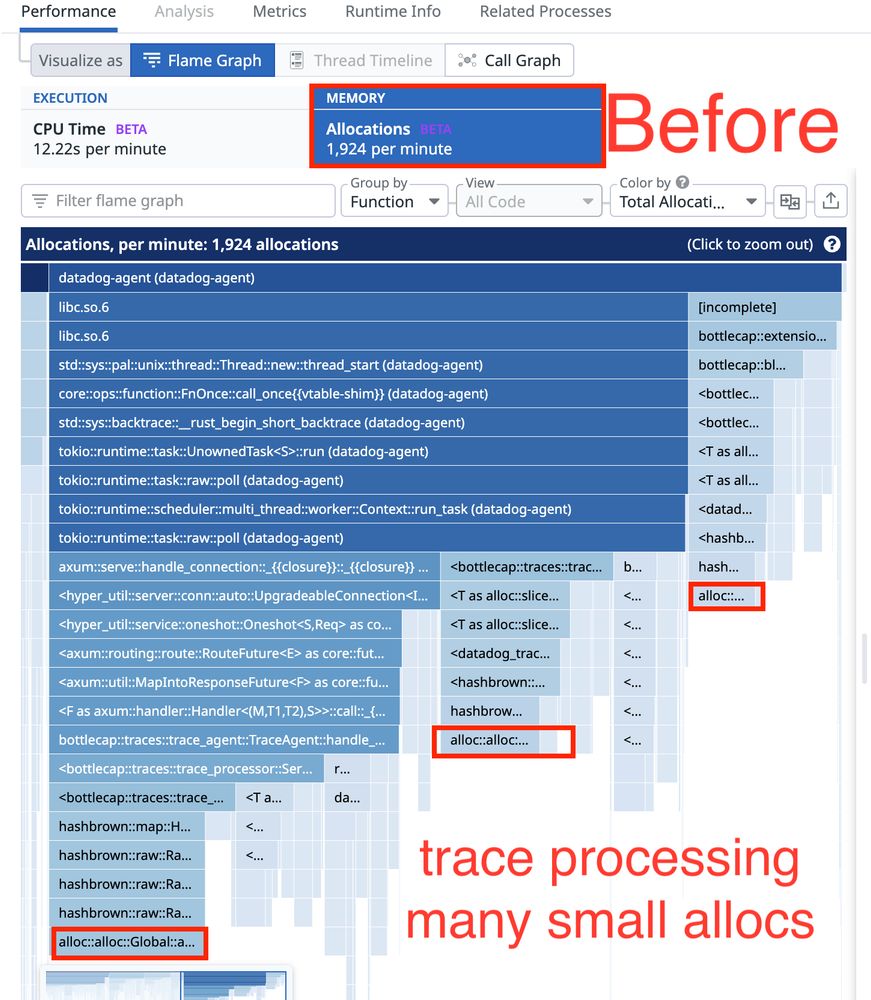

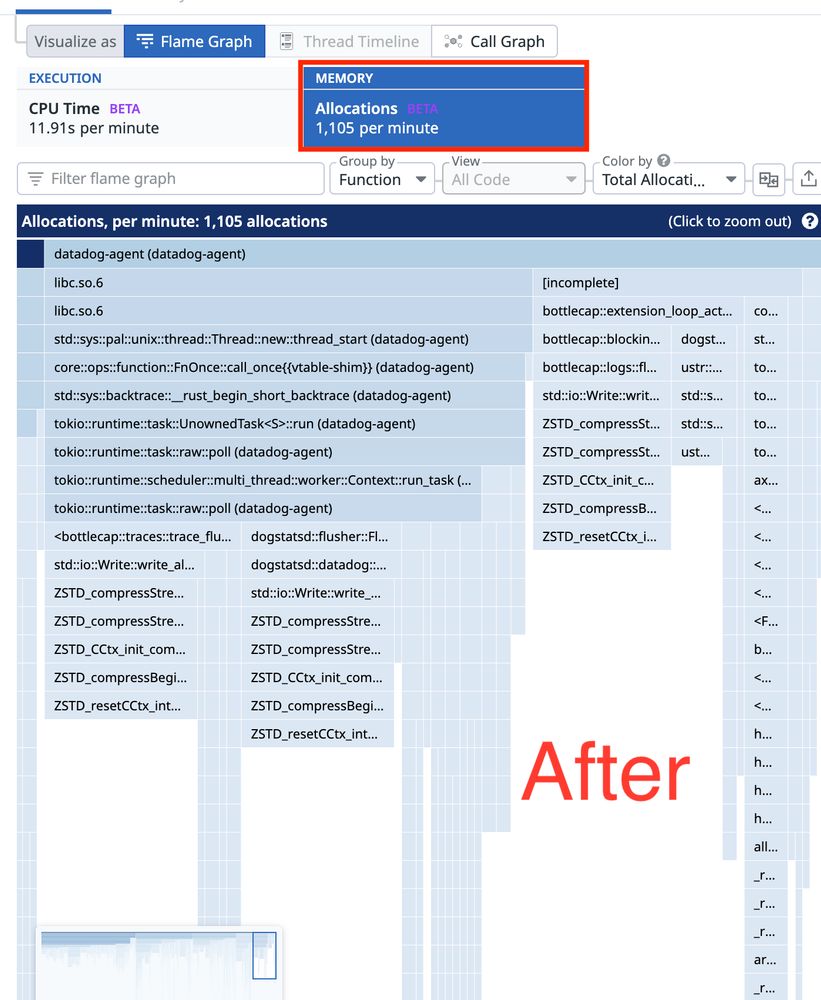

A recent stress test revealed that malloc calls bottlenecked when sending > 100k spans through the API and aggregator pipelines in Lambda.

A recent stress test revealed that malloc calls bottlenecked when sending > 100k spans through the API and aggregator pipelines in Lambda.

Today I'll step you through the actual Lambda runtime code which causes this confusing issue, and walk you through how to safely perform async work in Lambda:

Today I'll step you through the actual Lambda runtime code which causes this confusing issue, and walk you through how to safely perform async work in Lambda:

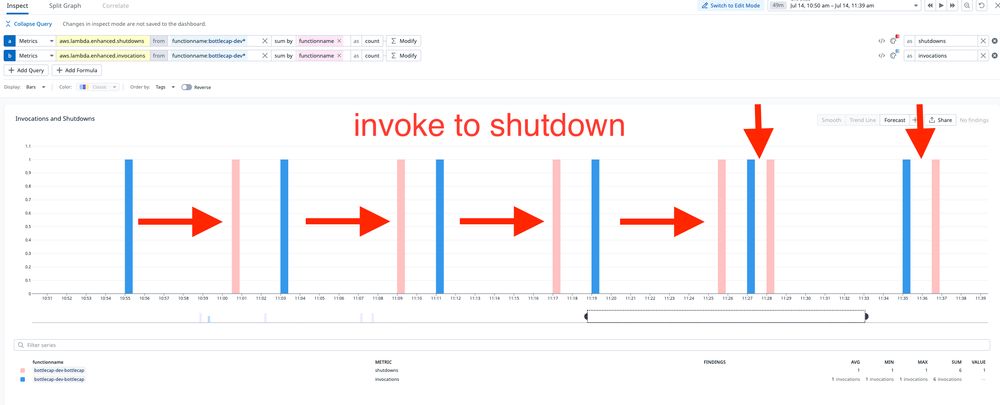

I'm calling this function every 8 or so minutes. At first the gap from invocation to shutdown is about 5-6 minutes, which was the fastest I've observed during previous experiments.

I'm calling this function every 8 or so minutes. At first the gap from invocation to shutdown is about 5-6 minutes, which was the fastest I've observed during previous experiments.

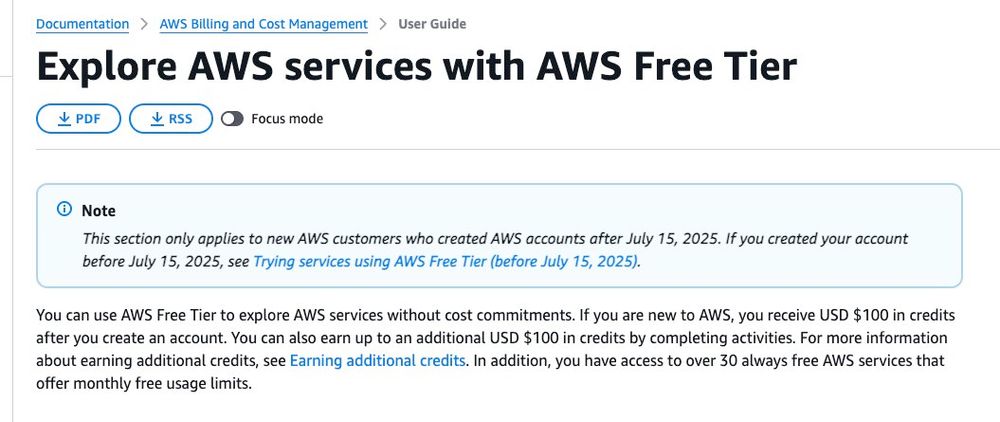

New accounts get $100 in credits to start and can earn $100 exploring AWS resources. You can now explore AWS without worrying about incurring a huge bill, this is great!

docs.aws.amazon.com/awsaccountbi...

New accounts get $100 in credits to start and can earn $100 exploring AWS resources. You can now explore AWS without worrying about incurring a huge bill, this is great!

docs.aws.amazon.com/awsaccountbi...

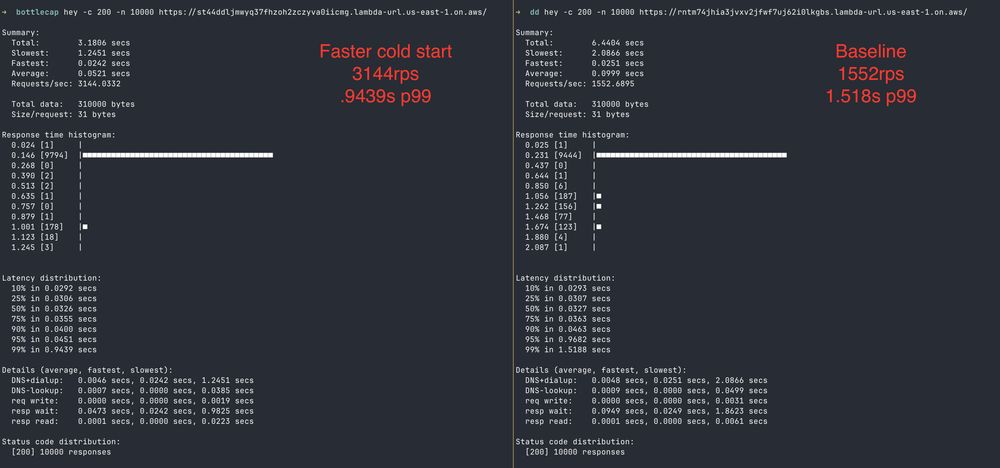

2x faster in RPS and thus, duration.

p99 from 1.52s -> .949s

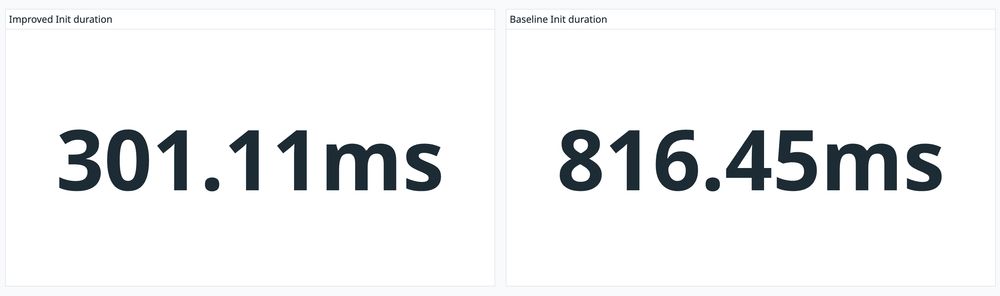

The code and functionality is identical, but improving the cold start from 816ms to 301ms made all the difference.

2x faster in RPS and thus, duration.

p99 from 1.52s -> .949s

The code and functionality is identical, but improving the cold start from 816ms to 301ms made all the difference.

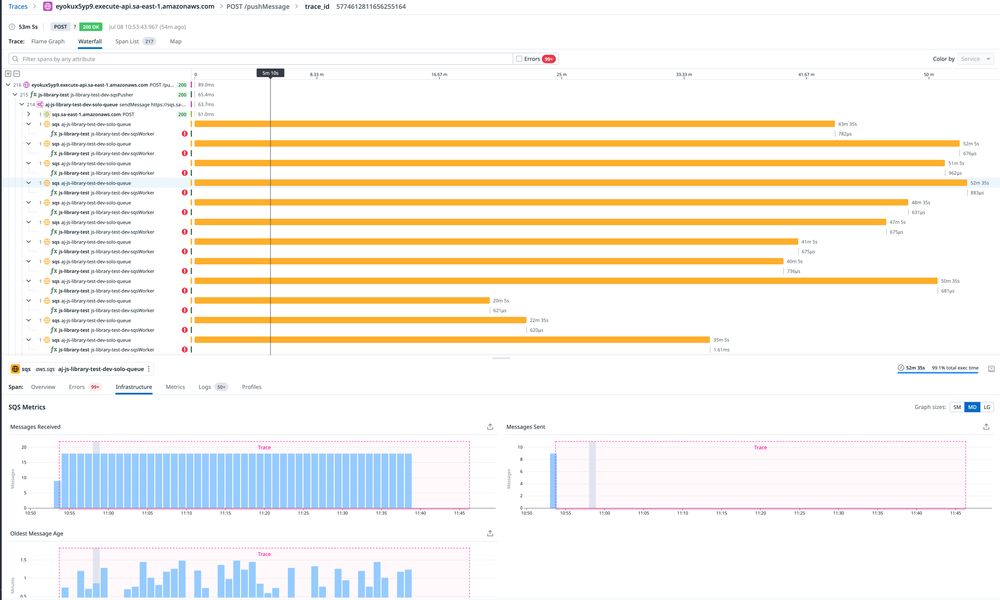

Hundreds of attempts, multiple messages in queue and not burning down and average age of message ticking up! Seems common knowledge, but...

Hundreds of attempts, multiple messages in queue and not burning down and average age of message ticking up! Seems common knowledge, but...

The engineering team behind CoScreen just open sourced a new library, dtln-rs, suitable for removing noise from all kinds

The engineering team behind CoScreen just open sourced a new library, dtln-rs, suitable for removing noise from all kinds

After a request spike, Lambda waits ~10m before reaping 2/3rds of sandboxes. 5m later it begins reaping the rest.

Presumably this helps smooth latency during retry storms, or if traffic returns!

After a request spike, Lambda waits ~10m before reaping 2/3rds of sandboxes. 5m later it begins reaping the rest.

Presumably this helps smooth latency during retry storms, or if traffic returns!

One unreasonably effective way to lower the average latency of a service is to minimize the causes of p99 events.

Here, we've managed to absolutely crush the Max Post Runtime Duration from ~80ms to 500µs!

One unreasonably effective way to lower the average latency of a service is to minimize the causes of p99 events.

Here, we've managed to absolutely crush the Max Post Runtime Duration from ~80ms to 500µs!

You've gotta be spending a decent chunk of $$$ on cloudwatch logs for this to help, but still – a price cut is a price cut!

You've gotta be spending a decent chunk of $$$ on cloudwatch logs for this to help, but still – a price cut is a price cut!

Just drop a pprof file into a project, explain the dimensions of the profile, then let the LLM make suggestions to solve the hot spots.

Instant performance boost

Just drop a pprof file into a project, explain the dimensions of the profile, then let the LLM make suggestions to solve the hot spots.

Instant performance boost

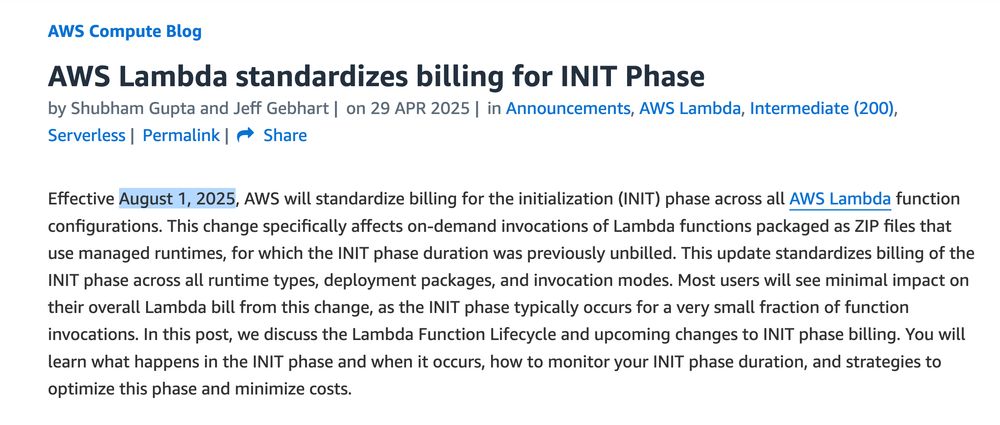

My hottest take is that AWS should have done this years ago.

My hottest take is that AWS should have done this years ago.

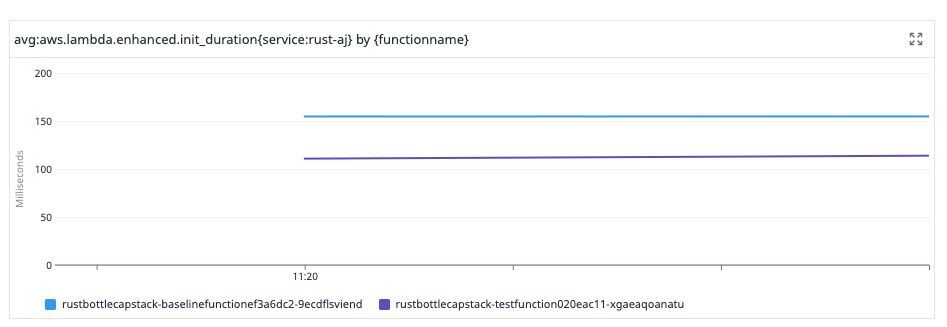

I spent 2024 shipping our next-generation Lambda Extension, which offers an 82% improvement in cold start time, better aggregation and flushing options, and lower overall overhead – all in a substantially smaller binary package.

More: www.datadoghq.com/blog/enginee...

I spent 2024 shipping our next-generation Lambda Extension, which offers an 82% improvement in cold start time, better aggregation and flushing options, and lower overall overhead – all in a substantially smaller binary package.

More: www.datadoghq.com/blog/enginee...