• Drag & drop images → instant IIIF Image Service

• Create a folder → drag images in → instant presentation manifest!

Work in progress, but already a lot of fun. Ping me if you want to know more!

First code is available here: github.com/rsimon/liiive

Self-hosted setup coming by end of February.

#OpenSource #DigitalHumanities

First code is available here: github.com/rsimon/liiive

Self-hosted setup coming by end of February.

#OpenSource #DigitalHumanities

• Drag & drop images → instant IIIF Image Service

• Create a folder → drag images in → instant presentation manifest!

Work in progress, but already a lot of fun. Ping me if you want to know more!

• Drag & drop images → instant IIIF Image Service

• Create a folder → drag images in → instant presentation manifest!

Work in progress, but already a lot of fun. Ping me if you want to know more!

Coming up next: analysis and evaluation of the retrieved contents and generated text to see how well the model actually performs!

Test it out at machina.ait.ac.at/chat/

Coming up next: analysis and evaluation of the retrieved contents and generated text to see how well the model actually performs!

Test it out at machina.ait.ac.at/chat/

That's Cantaloupe (image server) + Astro (GUI) + NGINX (proxy) – bundled with Docker. Drag & drop images → instant IIIF 🚀

Still needs work + basic manifest editing, but it’s a start!

That's Cantaloupe (image server) + Astro (GUI) + NGINX (proxy) – bundled with Docker. Drag & drop images → instant IIIF 🚀

Still needs work + basic manifest editing, but it’s a start!

I've been using `uclalibrary/cantaloupe`. It's working great – I just want to make sure there are no other images I should be aware of that might be more recommended.

I've been using `uclalibrary/cantaloupe`. It's working great – I just want to make sure there are no other images I should be aware of that might be more recommended.

Looking for basic stuff like end-user-friendly bulk upload, basic metadata editing (for presentation manifests) and sorting into collections.

Looking for basic stuff like end-user-friendly bulk upload, basic metadata editing (for presentation manifests) and sorting into collections.

• Improved relevance ranking for image sources

• Inline thumbnails when images are explicitly mentioned

More UX and behavior tweaks are coming today + next week. Stay tuned!

• Improved relevance ranking for image sources

• Inline thumbnails when images are explicitly mentioned

More UX and behavior tweaks are coming today + next week. Stay tuned!

Anyone can nominate things that are #DigitalHumanities, available to voters, and updated in 2025. #DH

Nominations close 2026-02-26 and the only way something gets on the ballot. You only need to nominate something once.

dhawards.org/dhawards2025...

Anyone can nominate things that are #DigitalHumanities, available to voters, and updated in 2025. #DH

Nominations close 2026-02-26 and the only way something gets on the ballot. You only need to nominate something once.

dhawards.org/dhawards2025...

I used a dataset I labelled in 2022 and left on @hf.co for 3 years 😬.

It finds illustrated pages in historical books. No server. No GPU.

I used a dataset I labelled in 2022 and left on @hf.co for 3 years 😬.

It finds illustrated pages in historical books. No server. No GPU.

I’ve just updated my site with more info on how I help Digital Humanities researchers, DH labs, museums, libraries, and archives with solutions for data exploration, annotation, and analysis — plus a selection of past projects.

Take a peek: 👉 rainersimon.io

I’ve just updated my site with more info on how I help Digital Humanities researchers, DH labs, museums, libraries, and archives with solutions for data exploration, annotation, and analysis — plus a selection of past projects.

Take a peek: 👉 rainersimon.io

Impressive results even on cursive script.

Impressive results even on cursive script.

Read the full CfP and find a link to submit: iiif.io/event/2026/n...

Read the full CfP and find a link to submit: iiif.io/event/2026/n...

Have material you'd like me to test? Ping me!

I'm looking for testers for a VLM pipeline for auto-enrichment (transcription, captions, tags, IIIF). If you share a few sample images, I'll run them through + share results. Would love to hear your feedback!

Have material you'd like me to test? Ping me!

I'm looking for testers for a VLM pipeline for auto-enrichment (transcription, captions, tags, IIIF). If you share a few sample images, I'll run them through + share results. Would love to hear your feedback!

I'm looking for testers for a VLM pipeline for auto-enrichment (transcription, captions, tags, IIIF). If you share a few sample images, I'll run them through + share results. Would love to hear your feedback!

I'm curious about what/how you're indexing. Metadata, transcripts/chunks? Images full or segmented? LLM-generated descriptions? What's your queries and response workflow?

I'm curious about what/how you're indexing. Metadata, transcripts/chunks? Images full or segmented? LLM-generated descriptions? What's your queries and response workflow?

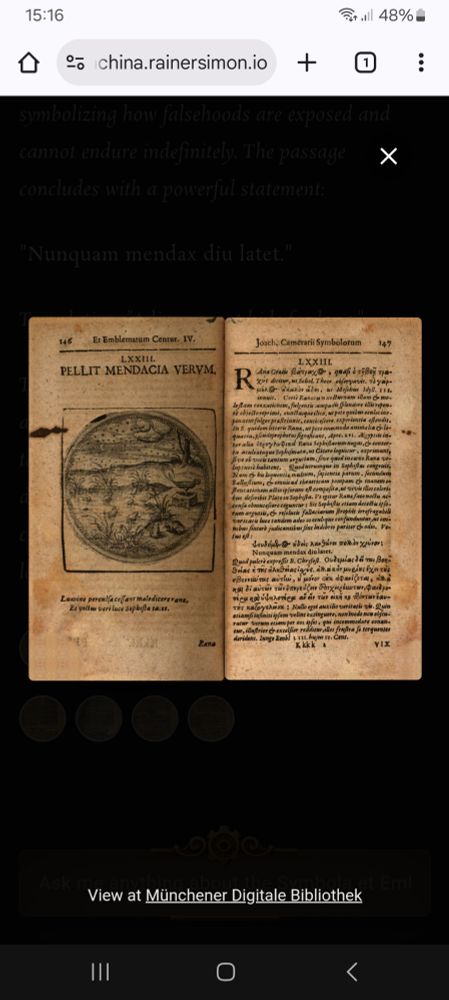

Story sounded plausible. But I wonder how much is just AI hallucination. Google only gave two hits, both on the Google Books edition of Symbola et Emblemata.

Any early modern creature lore experts who know more? 🙂

Story sounded plausible. But I wonder how much is just AI hallucination. Google only gave two hits, both on the Google Books edition of Symbola et Emblemata.

Any early modern creature lore experts who know more? 🙂

Try it here: immarkus.xmarkus.org

Docs: github.com/rsimon/immar...

Try it here: immarkus.xmarkus.org

Docs: github.com/rsimon/immar...

Check it out if you haven't already!

machina.rainersimon.io

Check it out if you haven't already!

machina.rainersimon.io

machina.rainersimon.io

machina.rainersimon.io

Try it here: machina.rainersimon.io

Try it here: machina.rainersimon.io