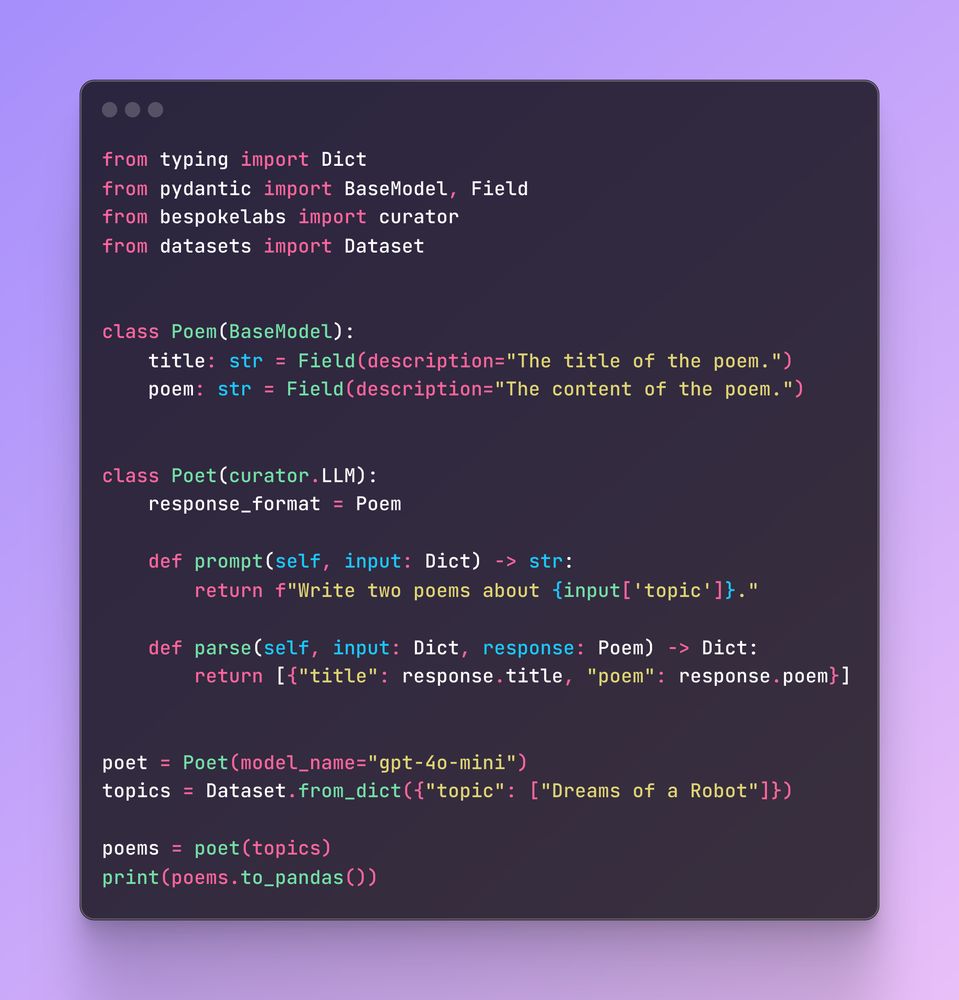

Create synthetic data pipelines with easy!

- Retries and caching included

- inference via LiteLLM, vLLM, and popular batch APIs

- asynchronous operations

🔗 URL: buff.ly/ajPRT1l

Create synthetic data pipelines with easy!

- Retries and caching included

- inference via LiteLLM, vLLM, and popular batch APIs

- asynchronous operations

🔗 URL: buff.ly/ajPRT1l

𝐯𝐋𝐋𝐌 𝐟𝐨𝐫 𝐁𝐞𝐠𝐢𝐧𝐧𝐞𝐫𝐬 𝐏𝐚𝐫𝐭 𝟑:📖 𝐃𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭 𝐎𝐩𝐭𝐢𝐨𝐧𝐬

Learn to deploy #vLLM everywhere! Even on CPU🤫

✅Platform & model Support Matrix

✅Install on GPU & CPU

✅Build Wheel from scratch | Python vLLM package

✅Docker/Kubernetes Deployment

✅Running vLLM server (Offline + Online inference)

💎What makes #VLLM the Rolls Royce of inference? 👇🏻check our new blog, where we break it down in 5 performance-packed layers😎#TherYouGo

𝐯𝐋𝐋𝐌 𝐟𝐨𝐫 𝐁𝐞𝐠𝐢𝐧𝐧𝐞𝐫𝐬 𝐏𝐚𝐫𝐭 𝟐:📖𝐊𝐞𝐲 𝐅𝐞𝐚𝐭𝐮𝐫𝐞𝐬 & 𝐎𝐩𝐭𝐢𝐦𝐢𝐳𝐚𝐭𝐢𝐨𝐧s

💎 What makes #vLLM the Rolls Royce of inference?

👉check it out: cloudthrill.ca/what-is-vllm...

✅ #PagedAttention #PrefixCaching #ChunkedPrefill

✅ #SpeculativeDecoding #FlashAttention #lmcache

✅ Tensor & #PipelineParallelism⚡

Origin | Interest | Match

@reach_vb:

Letsss gooo! DeepSeek just released a 3B OCR model on Hugging Face 🔥 Optimised to be token efficient AND scale ~200K+ pages/day on A100-40G Same arch as DeepSeek VL2 Use it with Transformers, vLLM and more 🤗 https://huggingface.co/...

@reach_vb:

Letsss gooo! DeepSeek just released a 3B OCR model on Hugging Face 🔥 Optimised to be token efficient AND scale ~200K+ pages/day on A100-40G Same arch as DeepSeek VL2 Use it with Transformers, vLLM and more 🤗 https://huggingface.co/...

Constrained output for LLMs, e.g., outlines library for vllm which forces models to output json/pydantic schemas, is cool!

But, because output tokens cost much more latency than input tokens, if speed matters: bespoke, low-token output formats are often better.

Constrained output for LLMs, e.g., outlines library for vllm which forces models to output json/pydantic schemas, is cool!

But, because output tokens cost much more latency than input tokens, if speed matters: bespoke, low-token output formats are often better.

𝐯𝐋𝐋𝐌 𝐟𝐨𝐫 𝐁𝐞𝐠𝐢𝐧𝐧𝐞𝐫𝐬 𝐏𝐚𝐫𝐭 𝟐:📖𝐊𝐞𝐲 𝐅𝐞𝐚𝐭𝐮𝐫𝐞𝐬 & 𝐎𝐩𝐭𝐢𝐦𝐢𝐳𝐚𝐭𝐢𝐨𝐧s

💎 What makes #vLLM the Rolls Royce of inference?

👉check it out: cloudthrill.ca/what-is-vllm...

✅ #PagedAttention #PrefixCaching #ChunkedPrefill

✅ #SpeculativeDecoding #FlashAttention #lmcache

✅ Tensor & #PipelineParallelism⚡

𝐯𝐋𝐋𝐌 𝐟𝐨𝐫 𝐁𝐞𝐠𝐢𝐧𝐧𝐞𝐫𝐬 𝐏𝐚𝐫𝐭 𝟐:📖𝐊𝐞𝐲 𝐅𝐞𝐚𝐭𝐮𝐫𝐞𝐬 & 𝐎𝐩𝐭𝐢𝐦𝐢𝐳𝐚𝐭𝐢𝐨𝐧s

💎 What makes #vLLM the Rolls Royce of inference?

👉check it out: cloudthrill.ca/what-is-vllm...

✅ #PagedAttention #PrefixCaching #ChunkedPrefill

✅ #SpeculativeDecoding #FlashAttention #lmcache

✅ Tensor & #PipelineParallelism⚡

Origin | Interest | Match

🗓️ Thursday 17th 11:30 AM EDT

🎯 A chill livestream unpacking LLM #Quantization: #vllm vs #ollama. Learn about the What & How.

🔥Dope guest stars:

#bartowski from arcee.ai & Eldar Kurtic from #RedHat

🔗Stream on YouTube & Linkedin:

www.youtube.com/watch?v=XTE0...

🗓️ Thursday 17th 11:30 AM EDT

🎯 A chill livestream unpacking LLM #Quantization: #vllm vs #ollama. Learn about the What & How.

🔥Dope guest stars:

#bartowski from arcee.ai & Eldar Kurtic from #RedHat

🔗Stream on YouTube & Linkedin:

www.youtube.com/watch?v=XTE0...

📦 vllm-project / vllm

⭐ 34,172 (+145)

🗒 Python

A high-throughput and memory-efficient inference and serving engine for LLMs

📦 vllm-project / vllm

⭐ 34,172 (+145)

🗒 Python

A high-throughput and memory-efficient inference and serving engine for LLMs

🤯 Expanded model support? ✅

💪 Intel Gaudi integration? ✅

🚀 Major engine & torch.compile boosts? ✅

🔗 github.com/vllm-project...

🤯 Expanded model support? ✅

💪 Intel Gaudi integration? ✅

🚀 Major engine & torch.compile boosts? ✅

🔗 github.com/vllm-project...

| Details | Interest | Feed |

💎This, we shift from theory to practice, covering #vLLM installs across platforms? check our new blog, where we break it down in 5 sections😎#TherYouGo

𝐯𝐋𝐋𝐌 𝐟𝐨𝐫 𝐁𝐞𝐠𝐢𝐧𝐧𝐞𝐫𝐬 𝐏𝐚𝐫𝐭 𝟑:📖 𝐃𝐞𝐩𝐥𝐨𝐲𝐦𝐞𝐧𝐭 𝐎𝐩𝐭𝐢𝐨𝐧𝐬

Learn to deploy #vLLM everywhere! Even on CPU🤫

✅Platform & model Support Matrix

✅Install on GPU & CPU

✅Build Wheel from scratch | Python vLLM package

✅Docker/Kubernetes Deployment

✅Running vLLM server (Offline + Online inference)

📦 Alpha-VLLM / LLaMA2-Accessory

⭐ 489 (+174)

🗒 Python

An Open-source Toolkit for LLM Development

📦 Alpha-VLLM / LLaMA2-Accessory

⭐ 489 (+174)

🗒 Python

An Open-source Toolkit for LLM Development

📦 vllm-project / vllm

⭐ 54,301 (+143)

🗒 Python

A high-throughput and memory-efficient inference and serving engine for LLMs

📦 vllm-project / vllm

⭐ 54,301 (+143)

🗒 Python

A high-throughput and memory-efficient inference and serving engine for LLMs

#AI #Large #Language #Models #Red #Hat #AI #benchmarks #AI #optimization #enterprise

Origin | […]

#AI #Large #Language #Models #Red #Hat #AI #benchmarks #AI #optimization #enterprise

Origin | […]

"Now you can deploy Qwen3 via Ollama, LM Studio, SGLang, and vLLM — choose from multiple formats including GGUF, AWQ, and GPTQ for easy local deployment."

Find all models in the Qwen3 collection on Hugging Face @ huggingface.co/collections/...

"Now you can deploy Qwen3 via Ollama, LM Studio, SGLang, and vLLM — choose from multiple formats including GGUF, AWQ, and GPTQ for easy local deployment."

Find all models in the Qwen3 collection on Hugging Face @ huggingface.co/collections/...

📦 vllm-project / vllm

⭐ 48,168 (+100)

🗒 Python

A high-throughput and memory-efficient inference and serving engine for LLMs

📦 vllm-project / vllm

⭐ 48,168 (+100)

🗒 Python

A high-throughput and memory-efficient inference and serving engine for LLMs

📦 vllm-project / vllm

⭐ 46,215 (+128)

🗒 Python

A high-throughput and memory-efficient inference and serving engine for LLMs

📦 vllm-project / vllm

⭐ 46,215 (+128)

🗒 Python

A high-throughput and memory-efficient inference and serving engine for LLMs

Built on vLLM + Megatron-LM. Create agents that actually learn & improve.

🔥 github.com/tensorwavecl...

✨ Let's advance LLMs together

#AI #OpenSource

Built on vLLM + Megatron-LM. Create agents that actually learn & improve.

🔥 github.com/tensorwavecl...

✨ Let's advance LLMs together

#AI #OpenSource

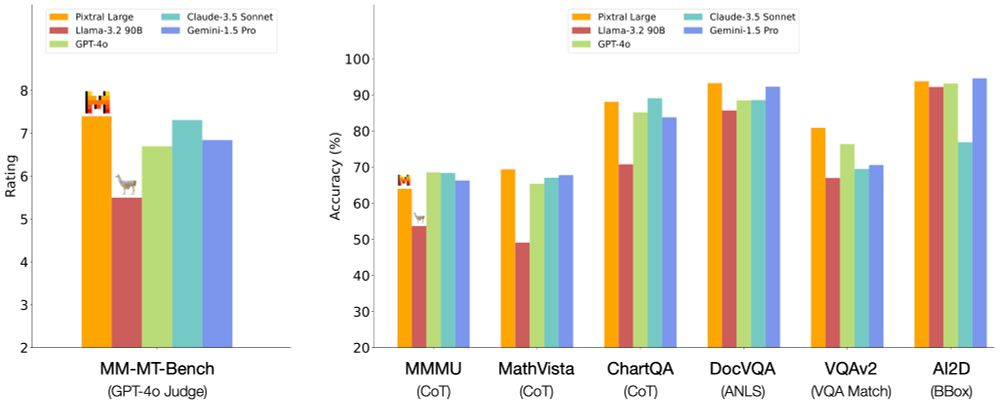

Run Pixtral Large with multiple input images from day 0 using vLLM.

Install vLLM:

pip install -U VLLM

Run Pixtral Large:

vllm serve mistralai/Pixtral-Large-Instruct-2411 --tokenizer_mode mistral --limit_mm_per_prompt 'image=10' --tensor-parallel-size 8

Run Pixtral Large with multiple input images from day 0 using vLLM.

Install vLLM:

pip install -U VLLM

Run Pixtral Large:

vllm serve mistralai/Pixtral-Large-Instruct-2411 --tokenizer_mode mistral --limit_mm_per_prompt 'image=10' --tensor-parallel-size 8

Origin | Interest | Match

生成AIと検索技術が大躍進!

AcroquestのYAMALEXチームが贈る

アドベントカレンダー9記事を総括

🔥 必見の3記事:

・Elasticsearch高速部分一致検索

・ColQwen2 PDFマルチモーダル検索

・vLLMでLLM推論を高速化

詳細は👇

acro-engineer.hatenablog.com/entry/2024/1...

#AI #検索 #テクノロジー

生成AIと検索技術が大躍進!

AcroquestのYAMALEXチームが贈る

アドベントカレンダー9記事を総括

🔥 必見の3記事:

・Elasticsearch高速部分一致検索

・ColQwen2 PDFマルチモーダル検索

・vLLMでLLM推論を高速化

詳細は👇

acro-engineer.hatenablog.com/entry/2024/1...

#AI #検索 #テクノロジー