Read more about this research supported by @stanfordhai.bsky.social in a new paper published in Nature: www.nature.com/articles/s41...

Read more about this research supported by @stanfordhai.bsky.social in a new paper published in Nature: www.nature.com/articles/s41...

(by 9bow님)

https://d.ptln.kr/4680

#paper #top-ml-papers-of-the-week #rag #claude #code-llm #llm-math #open-sora #tree-search #goldfish-loss #planrag #long-context-language-model #textgrad #deepseek-coder-v2

(by 9bow님)

https://d.ptln.kr/4680

#paper #top-ml-papers-of-the-week #rag #claude #code-llm #llm-math #open-sora #tree-search #goldfish-loss #planrag #long-context-language-model #textgrad #deepseek-coder-v2

ただ「微分」とのアナロジーはイマイチまだよくわからない。数学の微分の理解と衝突して理解を阻害しているように感じる。全く別物という頭で見た方がいい。

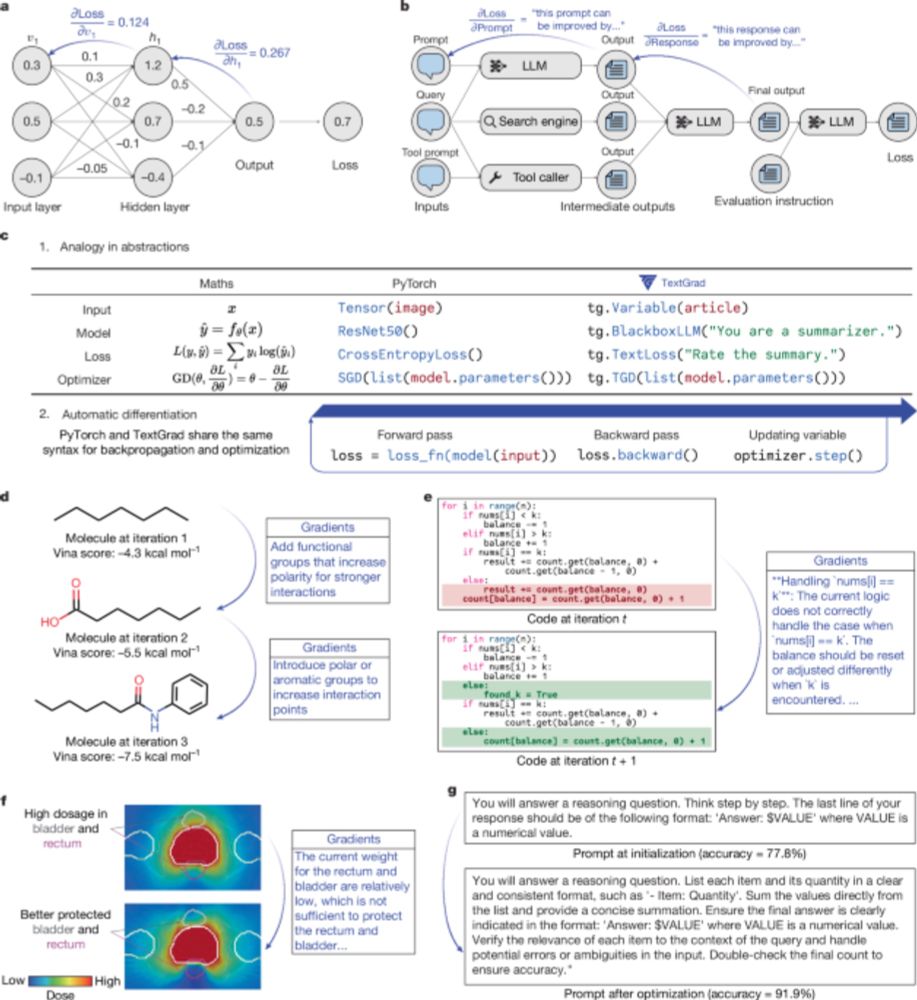

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

ただ「微分」とのアナロジーはイマイチまだよくわからない。数学の微分の理解と衝突して理解を阻害しているように感じる。全く別物という頭で見た方がいい。

Transforms prompts 4 #LLM accuracy using backpropagated textual gradient

📈 𝙏𝙚𝙖𝙘𝙝𝙚𝙧-𝙨𝙩𝙪𝙙𝙚𝙣𝙩 model refines prompts

🔄 𝘽𝙖𝙘𝙠𝙥𝙧𝙤𝙥𝙖𝙜𝙖𝙩𝙚𝙨 text-based optimization

🧠 𝙀𝙣𝙝𝙖𝙣𝙘𝙚𝙨 GPQA, MMLU, business tasks

👉 Discuss in Discord: linktr.ee/qdrddr

#AI #RAG #MachineLearning

Transforms prompts 4 #LLM accuracy using backpropagated textual gradient

📈 𝙏𝙚𝙖𝙘𝙝𝙚𝙧-𝙨𝙩𝙪𝙙𝙚𝙣𝙩 model refines prompts

🔄 𝘽𝙖𝙘𝙠𝙥𝙧𝙤𝙥𝙖𝙜𝙖𝙩𝙚𝙨 text-based optimization

🧠 𝙀𝙣𝙝𝙖𝙣𝙘𝙚𝙨 GPQA, MMLU, business tasks

👉 Discuss in Discord: linktr.ee/qdrddr

#AI #RAG #MachineLearning

It uses natural language feedback to help optimize AI systems, kinda like PyTorch but for language models. It builds upon DSPy, another Stanford-developed framework for optimizing LLM-based systems. Basically claiming to remove prompt engineering and do it automatically.

It uses natural language feedback to help optimize AI systems, kinda like PyTorch but for language models. It builds upon DSPy, another Stanford-developed framework for optimizing LLM-based systems. Basically claiming to remove prompt engineering and do it automatically.

I've been diving into TextGrad lately, and I thought I'd share some insights. It's a Python framework from Stanford that's making waves in AI optimization. Here's the lowdown: 🧵

I've been diving into TextGrad lately, and I thought I'd share some insights. It's a Python framework from Stanford that's making waves in AI optimization. Here's the lowdown: 🧵

Paper: www.nature.com/articles/s41...

Code: github.com/zou-group/te...

Amazing job by Mert Yuksekgonul leading this project w/ Fede Bianchi, Joseph Boen, Sheng Liu, Pan Lu, Carlos Guestrin, Zhi Huang

Paper: www.nature.com/articles/s41...

Code: github.com/zou-group/te...

Amazing job by Mert Yuksekgonul leading this project w/ Fede Bianchi, Joseph Boen, Sheng Liu, Pan Lu, Carlos Guestrin, Zhi Huang

Will try textgrad alone as well to compare.

How Creating good eval prompts is still a part I don't quite get.

Will try textgrad alone as well to compare.

How Creating good eval prompts is still a part I don't quite get.

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

TextGrad: Automatic "Differentiation" via Text

https://arxiv.org/abs/2406.07496

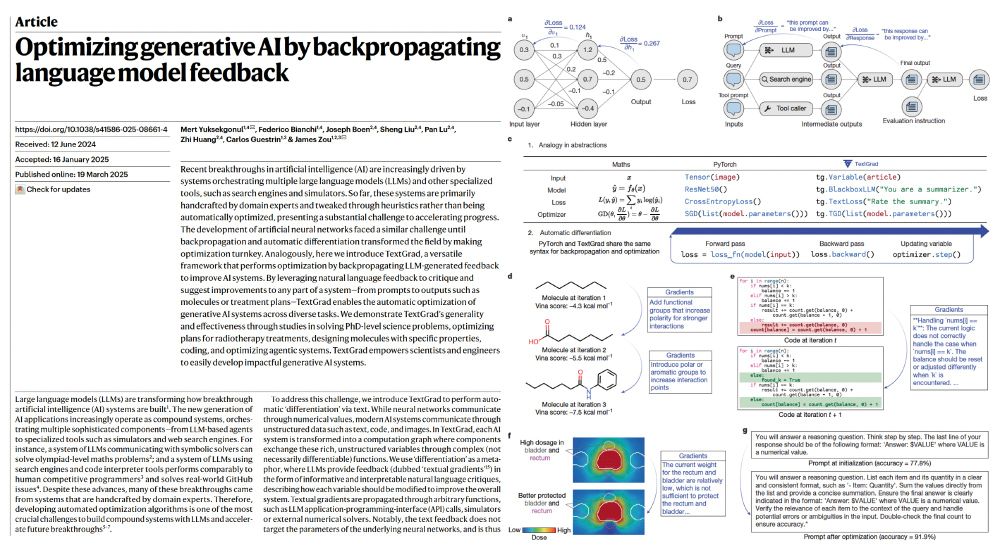

Abstract: Recent studies highlight the promise of LLM-based prompt optimization, especially with TextGrad, which automates differentiation'' via texts and backpropagates textual [1/9 of https://arxiv.org/abs/2502.19980v1]

Abstract: Recent studies highlight the promise of LLM-based prompt optimization, especially with TextGrad, which automates differentiation'' via texts and backpropagates textual [1/9 of https://arxiv.org/abs/2502.19980v1]

Nature: www.nature.com/articles/s41...

Nature: www.nature.com/articles/s41...

└ Still new, so it might have some quirks

└ Could struggle with super complex AI systems

└ Depends on high-quality feedback to work well

What do you think?

Could TextGrad be useful in your work? Have you already used it or is it just a gimmick?

└ Still new, so it might have some quirks

└ Could struggle with super complex AI systems

└ Depends on high-quality feedback to work well

What do you think?

Could TextGrad be useful in your work? Have you already used it or is it just a gimmick?

huggingface.co/spaces/Hugg...

And there is a lot more we can do, e.g. prompt optimization (DSPy/ TextGrad), workflow and UI.

huggingface.co/spaces/Hugg...

And there is a lot more we can do, e.g. prompt optimization (DSPy/ TextGrad), workflow and UI.

www.nature.com/articles/s41...

www.nature.com/articles/s41...

github.com/Laurian/cont...

github.com/Laurian/cont...

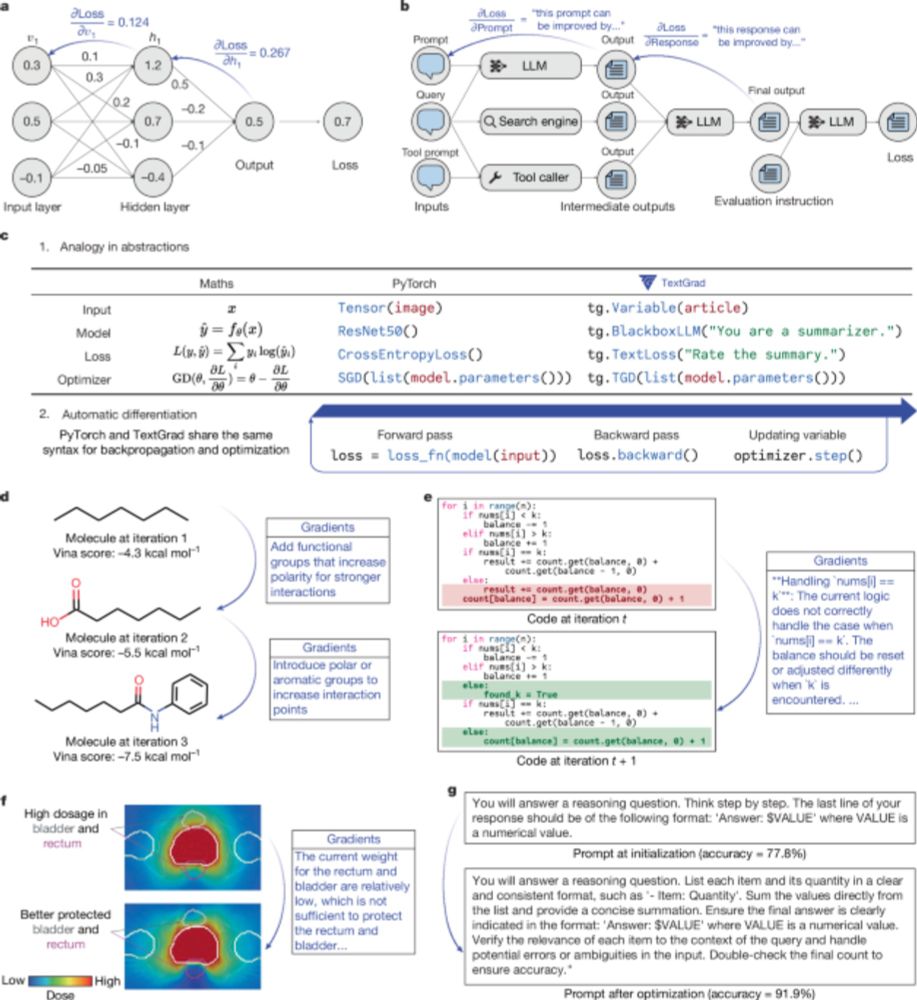

We present a general method for genAI to self-improve via our new *calculus of text*.

We show how this optimizes agents🤖, molecules🧬, code🖥️, treatments💊, non-differentiable systems🤯 + more!

We present a general method for genAI to self-improve via our new *calculus of text*.

We show how this optimizes agents🤖, molecules🧬, code🖥️, treatments💊, non-differentiable systems🤯 + more!