Toy-Bench-1997

unreasonably-clean-data-Bench

5y-old-Bench

2y-old-Bench

Live-Bench (collected last year)

Alive-Bench (real world, real time user satisfaction stats)

Prediction-Market-Bench what's Geminis perf. EoY?

- SemEval 2023 Task 12: AfriSenti https://afrisenti-semeval.github.io/

- SemEval 2024 Task 1: SemRel https://semantic-textual-relatedness.github.io/

- SemEval 2025 Task 11: Bridging the Gap https://github.com/emotion-analysis-project/SemEval2025-task11 […]

- SemEval 2023 Task 12: AfriSenti https://afrisenti-semeval.github.io/

- SemEval 2024 Task 1: SemRel https://semantic-textual-relatedness.github.io/

- SemEval 2025 Task 11: Bridging the Gap https://github.com/emotion-analysis-project/SemEval2025-task11 […]

We extend the BLEnD Benchmark to >30 language-culture pairs. [Our task is Junior-friendly, with live Q&A & tutorials.] 1/

We extend the BLEnD Benchmark to >30 language-culture pairs. [Our task is Junior-friendly, with live Q&A & tutorials.] 1/

MWAHAHA: A Competition on Humor Generation […]

MWAHAHA: A Competition on Humor Generation […]

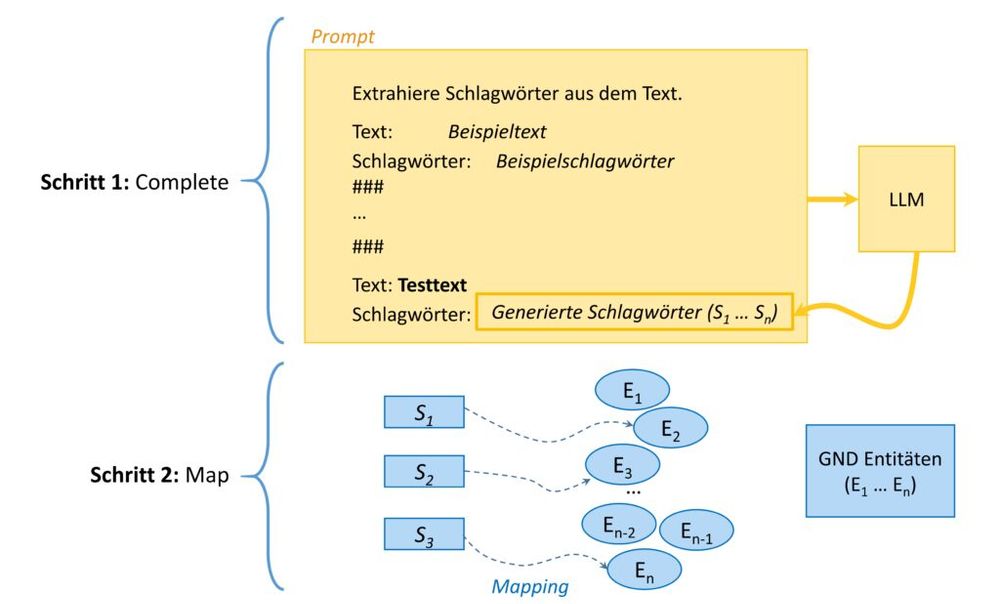

📸 DNB, Maximilian Kähler, Lisa Kluge CC BY 4.0

📸 DNB, Maximilian Kähler, Lisa Kluge CC BY 4.0

Haaste […]

Haaste […]

UWBa at SemEval-2025 Task 7: Multilingual and Crosslingual Fact-Checked Claim Retrieval

https://arxiv.org/abs/2508.09517

UWBa at SemEval-2025 Task 7: Multilingual and Crosslingual Fact-Checked Claim Retrieval

https://arxiv.org/abs/2508.09517

LyS at SemEval 2025 Task 8: Zero-Shot Code Generation for Tabular QA

https://arxiv.org/abs/2508.09012

LyS at SemEval 2025 Task 8: Zero-Shot Code Generation for Tabular QA

https://arxiv.org/abs/2508.09012

ATLANTIS at SemEval-2025 Task 3: Detecting Hallucinated Text Spans in Question Answering

https://arxiv.org/abs/2508.05179

ATLANTIS at SemEval-2025 Task 3: Detecting Hallucinated Text Spans in Question Answering

https://arxiv.org/abs/2508.05179

fact check AI at SemEval-2025 Task 7: Multilingual and Crosslingual Fact-checked Claim Retrieval

https://arxiv.org/abs/2508.03475

fact check AI at SemEval-2025 Task 7: Multilingual and Crosslingual Fact-checked Claim Retrieval

https://arxiv.org/abs/2508.03475

CSIRO-LT at SemEval-2025 Task 11: Adapting LLMs for Emotion Recognition for Multiple Languages

https://arxiv.org/abs/2508.01161

CSIRO-LT at SemEval-2025 Task 11: Adapting LLMs for Emotion Recognition for Multiple Languages

https://arxiv.org/abs/2508.01161

Arxiv: arxiv.org/abs/2501.03468

Github: github.com/IBM/mt-rag-b... (please 🌟!)

MTRAGEval: ibm.github.io/mt-rag-bench...

Arxiv: arxiv.org/abs/2501.03468

Github: github.com/IBM/mt-rag-b... (please 🌟!)

MTRAGEval: ibm.github.io/mt-rag-bench...

ITUNLP at SemEval-2025 Task 8: Question-Answering over Tabular Data: A Zero-Shot Approach using LLM-Driven Code Generation

https://arxiv.org/abs/2508.00762

ITUNLP at SemEval-2025 Task 8: Question-Answering over Tabular Data: A Zero-Shot Approach using LLM-Driven Code Generation

https://arxiv.org/abs/2508.00762

We invite researchers, teams, and solo experimenters to benchmark systems and explore how machines understand stories.

All the details are on our site:

🌐 narrative-similarity-task.github.io

#SemEval #NLP #NarrativeAI #DH #CLS

We invite researchers, teams, and solo experimenters to benchmark systems and explore how machines understand stories.

All the details are on our site:

🌐 narrative-similarity-task.github.io

#SemEval #NLP #NarrativeAI #DH #CLS

We are announcing the shared task on narrative similarity: SemEval-2026 Task 4: Narrative Story Similarity and Narrative Representation Learning

We invite you to benchmark LLMs, embedding models, or even test your favorite narrative formalism. Sample data is now available!

We are announcing the shared task on narrative similarity: SemEval-2026 Task 4: Narrative Story Similarity and Narrative Representation Learning

We invite you to benchmark LLMs, embedding models, or even test your favorite narrative formalism. Sample data is now available!