https://zstevenwu.com/

arxiv.org/abs/2401.04056

2/3.

arxiv.org/abs/2401.04056

2/3.

1. Self-Play Preference Optimization (SPO).

2. Direct Nash Optimization (DNO).

🧵 1/3.

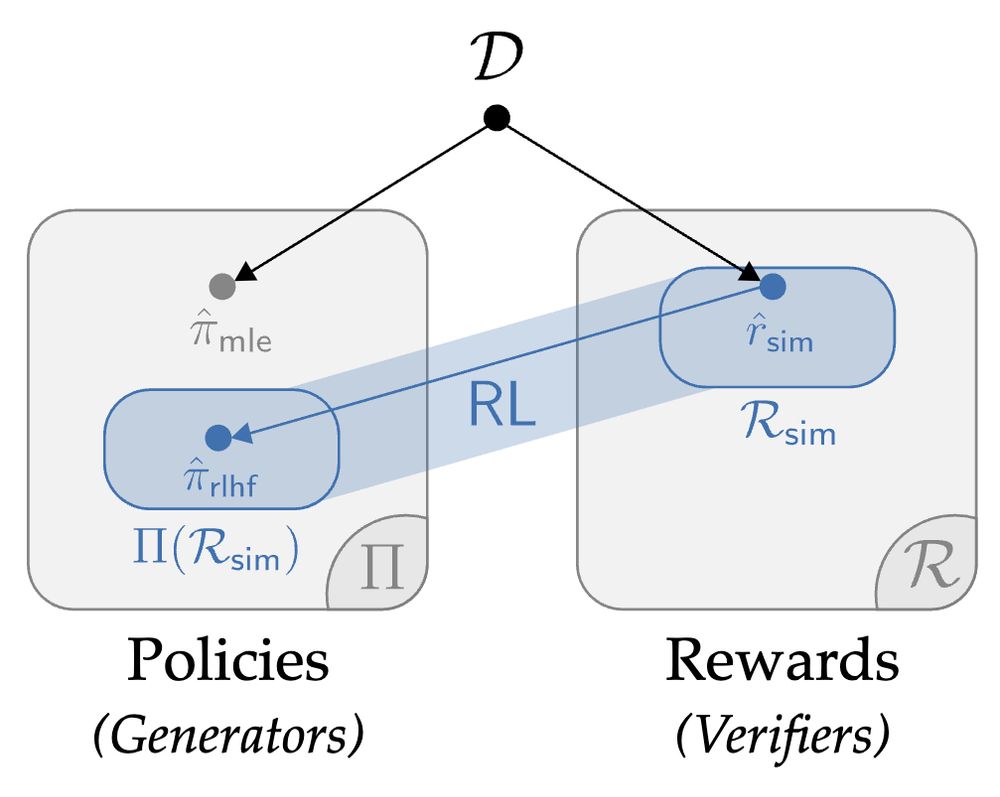

The last was a position paper on RLHF/alignment.

This week I will share papers (in pairs) on the topic of "game-theoretic or social choice meet meet alignment/RLHF".

🧵 1/3.

1. Self-Play Preference Optimization (SPO).

2. Direct Nash Optimization (DNO).

🧵 1/3.