https://chaufanglin.github.io/

Our findings reveal varying patterns across systems, with mismatches to human perception.

We recommend reporting *effective SNR gains* alongside WERs for a more comprehensive performance assessment 🧐

[8/8] 🧵

Our findings reveal varying patterns across systems, with mismatches to human perception.

We recommend reporting *effective SNR gains* alongside WERs for a more comprehensive performance assessment 🧐

[8/8] 🧵

In contrast, AVEC shows a stronger use of visual information, with a significant negative correlation, especially in noisy conditions.

[7/8] 🧵

In contrast, AVEC shows a stronger use of visual information, with a significant negative correlation, especially in noisy conditions.

[7/8] 🧵

We calculated Individual WER (IWERs) for each word and performed a Pearson correlation between MaFI scores and IWERs for audio-only, video-only, and AV models. [6/8] 🧵

We calculated Individual WER (IWERs) for each word and performed a Pearson correlation between MaFI scores and IWERs for audio-only, video-only, and AV models. [6/8] 🧵

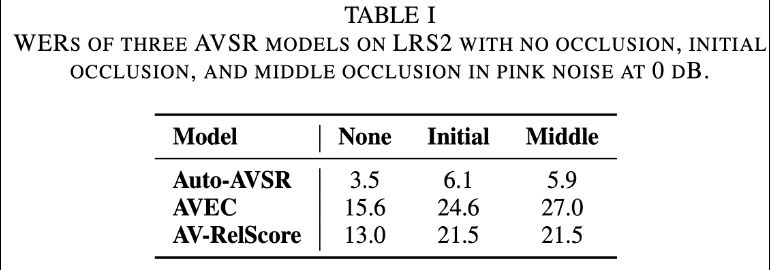

Auto-AVSR & AV-RelScore are equally affected by initial & middle occlusions, while AVEC is more impacted by middle occlusion.

Unlike humans, AVSR models do not depend on initial visual cues.

[5/8] 🧵

Auto-AVSR & AV-RelScore are equally affected by initial & middle occlusions, while AVEC is more impacted by middle occlusion.

Unlike humans, AVSR models do not depend on initial visual cues.

[5/8] 🧵

We test AVSR by occluding the initial vs. middle third of frames for each word, comparing 3 conditions: no occlusion, initial occlusion, and middle occlusion.

[4/8] 🧵

We test AVSR by occluding the initial vs. middle third of frames for each word, comparing 3 conditions: no occlusion, initial occlusion, and middle occlusion.

[4/8] 🧵

This metric quantifies the benefit of the visual modality in reducing WER compared to the audio-only system. [3/n] 🧵

This metric quantifies the benefit of the visual modality in reducing WER compared to the audio-only system. [3/n] 🧵

Through this, we hope to gain insights as to whether the visual component is being fully exploited in existing AVSR systems. [2/8] 🧵

Through this, we hope to gain insights as to whether the visual component is being fully exploited in existing AVSR systems. [2/8] 🧵