For anyone looking for an introduction to the topic, we've now uploaded all materials to the website: interpretingdl.github.io/speech-inter...

For anyone looking for an introduction to the topic, we've now uploaded all materials to the website: interpretingdl.github.io/speech-inter...

This is my first big conference!

📅 Tuesday morning, 10:30–12:00, during Poster Session 2.

💬 If you're around, feel free to message me. I would be happy to connect, chat, or have a drink!

This is my first big conference!

📅 Tuesday morning, 10:30–12:00, during Poster Session 2.

💬 If you're around, feel free to message me. I would be happy to connect, chat, or have a drink!

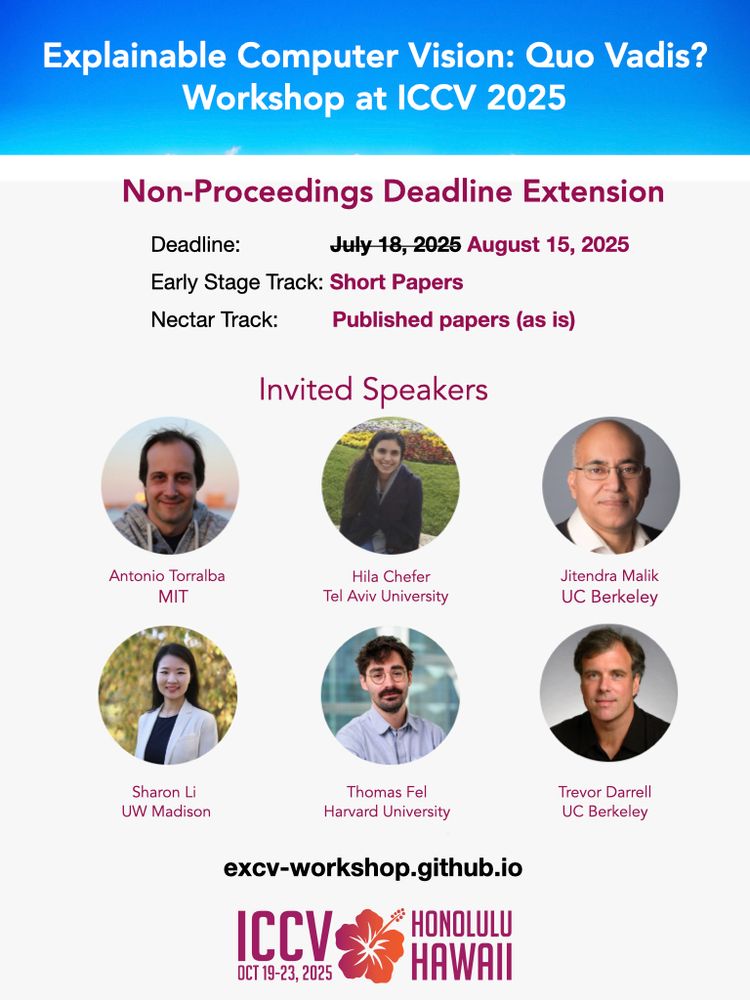

Our Non-proceedings track is open till August 15th for the eXCV workshop at ICCV.

Our nectar track accepts published papers, as is.

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

Our Non-proceedings track is open till August 15th for the eXCV workshop at ICCV.

Our nectar track accepts published papers, as is.

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

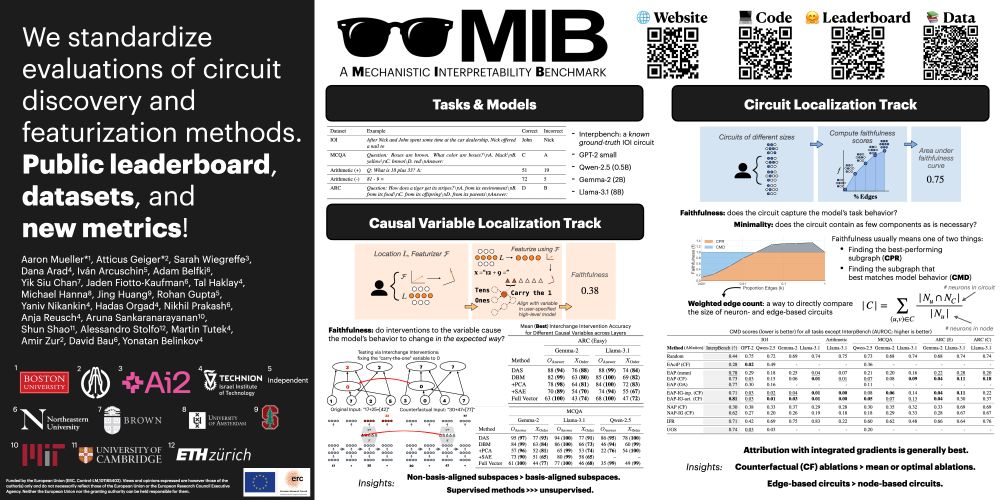

If you're working on:

🧠 Circuit discovery

🔍 Feature attribution

🧪 Causal variable localization

now’s the time to polish and submit!

Join us on Discord: discord.gg/n5uwjQcxPR

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

We're planning to keep this a living benchmark; come by and share your ideas/hot takes!

Catch me:

1️⃣ Presenting this paper👇 tomorrow 11am-1:30pm at East #1205

2️⃣ At the Actionable Interpretability @actinterp.bsky.social workshop on Saturday in East Ballroom A (I’m an organizer!)

We propose 😎 𝗠𝗜𝗕: a 𝗠echanistic 𝗜nterpretability 𝗕enchmark!

Catch me:

1️⃣ Presenting this paper👇 tomorrow 11am-1:30pm at East #1205

2️⃣ At the Actionable Interpretability @actinterp.bsky.social workshop on Saturday in East Ballroom A (I’m an organizer!)

Come see our poster at 4pm on Tuesday in East Exhibition hall A-B, E-1208!

Come see our poster at 4pm on Tuesday in East Exhibition hall A-B, E-1208!

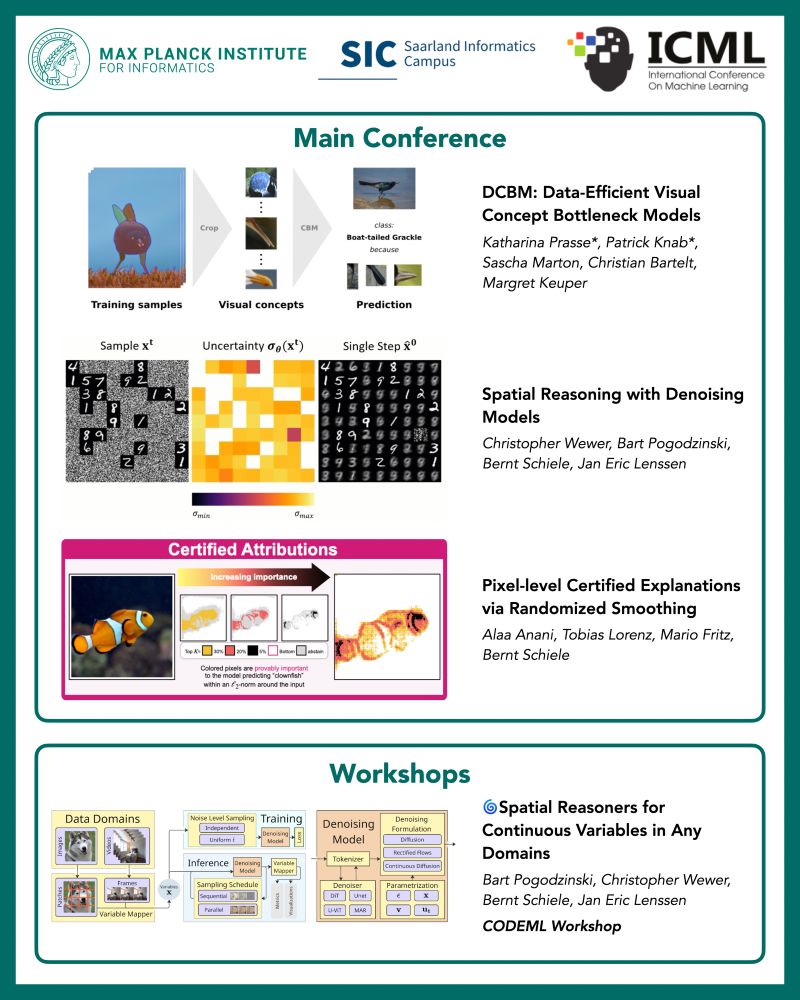

Congratulations to all the authors! To know more, visit us in the poster sessions!

A 🧵with more details:

@icmlconf.bsky.social @mpi-inf.mpg.de

Congratulations to all the authors! To know more, visit us in the poster sessions!

A 🧵with more details:

@icmlconf.bsky.social @mpi-inf.mpg.de

arxiv.org/abs/2402.02870

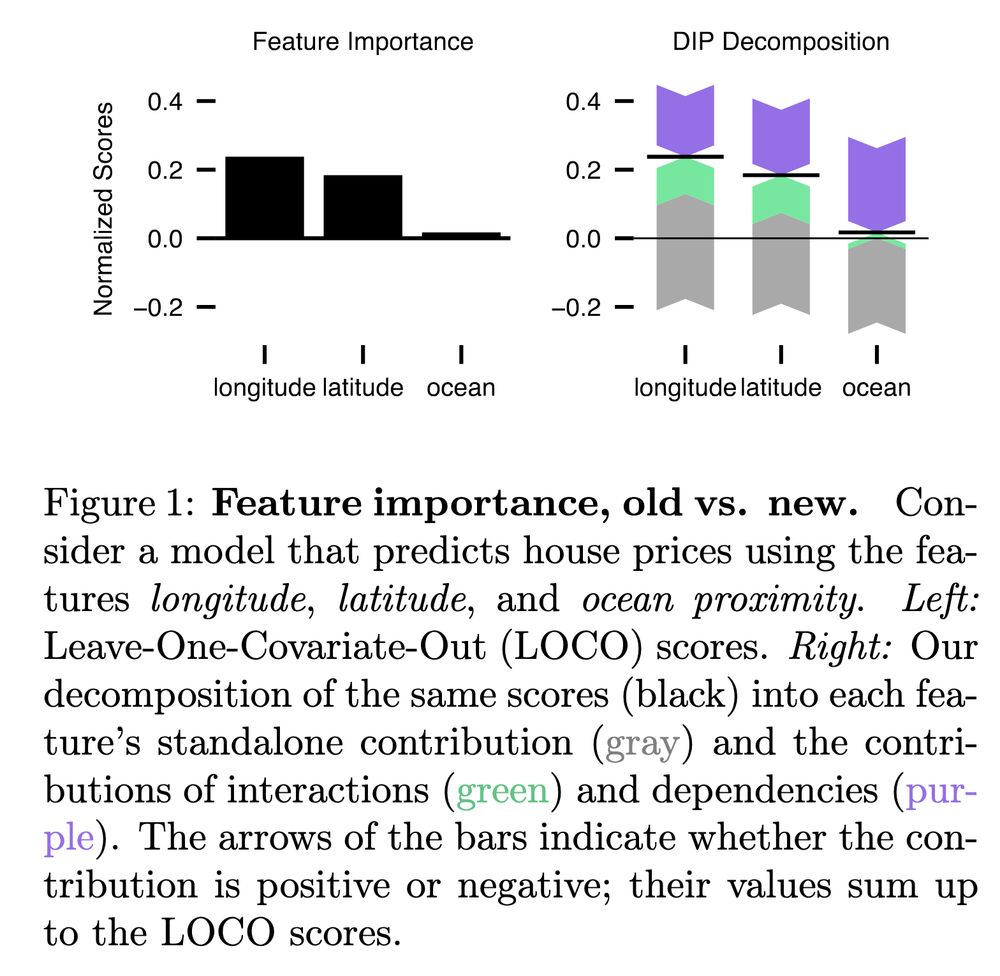

This led us to write a position paper (accepted at #ICML2025) that attempts to identify the problem and to propose a solution.

arxiv.org/abs/2402.02870

👇🧵

arxiv.org/abs/2402.02870

Everyone loves causal interp. It’s coherently defined! It makes testable predictions about mechanistic interventions! But what if we had a different objective: predicting model behavior not under mechanistic interventions, but on unseen input data?

Everyone loves causal interp. It’s coherently defined! It makes testable predictions about mechanistic interventions! But what if we had a different objective: predicting model behavior not under mechanistic interventions, but on unseen input data?

Our Non-proceedings track is still open!

Paper submission deadline: July 18, 2025

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

Our Non-proceedings track is still open!

Paper submission deadline: July 18, 2025

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025

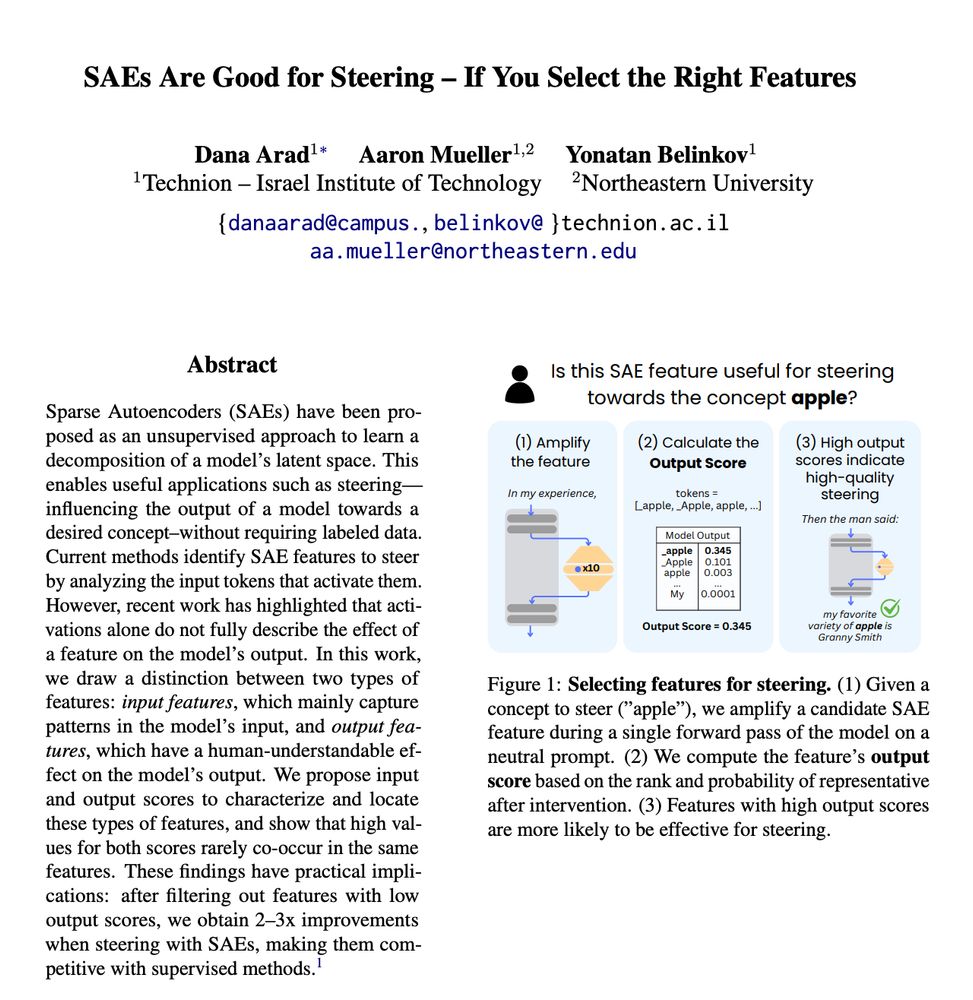

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

In a new project led by Yaniv (@YNikankin on the other app), we investigate this gap from an mechanistic perspective, and use our findings to close a third of it! 🧵

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year at #BlackboxNLP, we're introducing a shared task to rigorously evaluate MI methods in language models 🧵

Proud of this collaborative effort toward a scientifically grounded understanding of generative AI.

@tuberlin.bsky.social @bifold.berlin @msftresearch.bsky.social @UCSD & @UCLA

Proud of this collaborative effort toward a scientifically grounded understanding of generative AI.

@tuberlin.bsky.social @bifold.berlin @msftresearch.bsky.social @UCSD & @UCLA

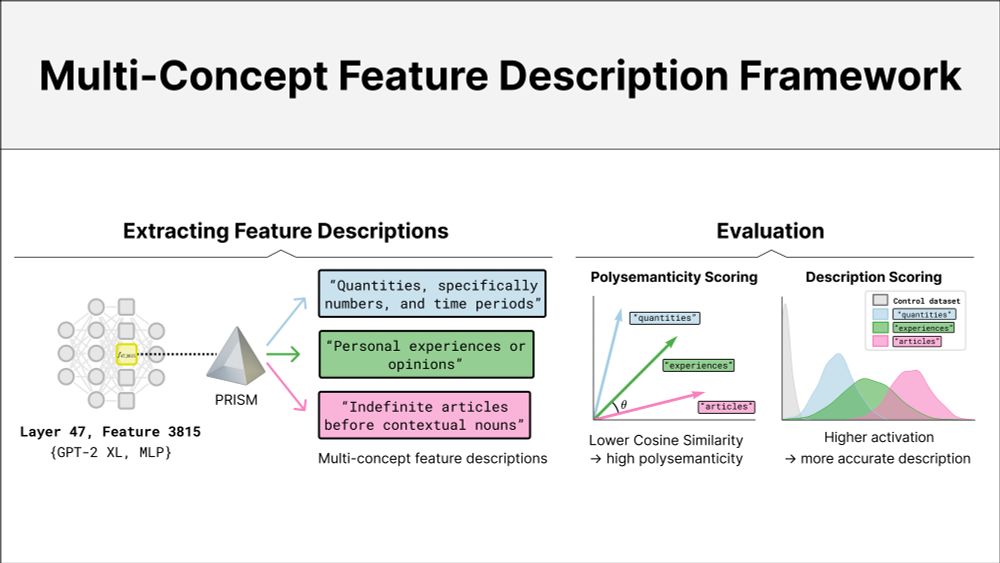

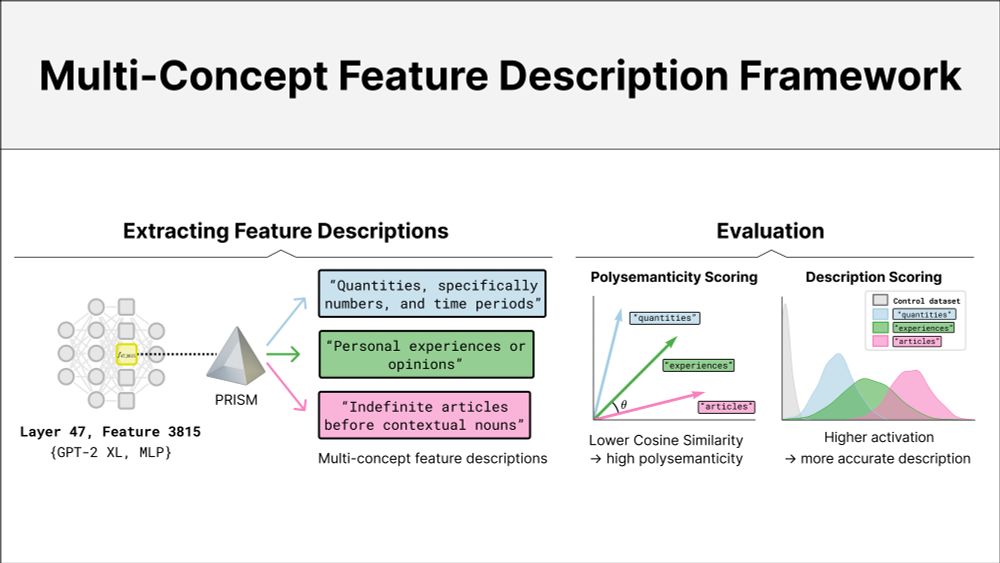

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

👉 arxiv.org/abs/2506.15538

We introduce PRISM, a framework for extracting multi-concept feature descriptions to better understand polysemanticity.

📄 Capturing Polysemanticity with PRISM: A Multi-Concept Feature Description Framework

arxiv.org/abs/2506.15538

🧵 (1/7)

👉 arxiv.org/abs/2506.15538

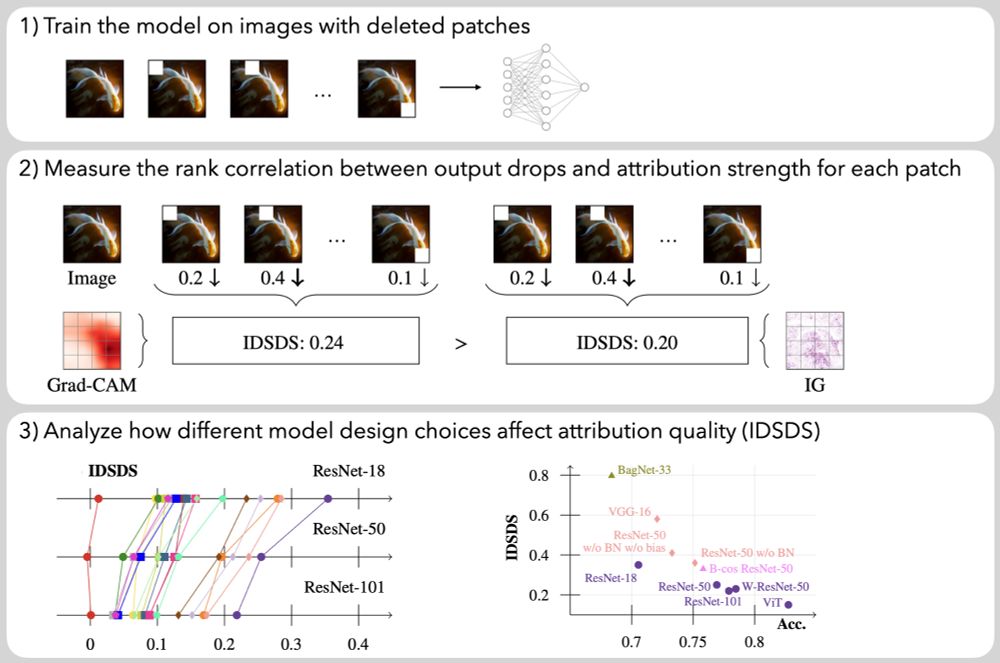

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds