Ph.D. student at the University of Amsterdam. Previously at TUM.

https://vladimiryugay.github.io/

vladimiryugay.github.io/vot/

vladimiryugay.github.io/vot/

vladimiryugay.github.io/game/

vladimiryugay.github.io/game/

Munich 2023 -> 8 months prep -> COVID -> ❌

Amsterdam 2024 -> 6 months prep -> COVID -> ❌

Leiden 2025 -> 6 months prep -> lfg ✅

Munich 2023 -> 8 months prep -> COVID -> ❌

Amsterdam 2024 -> 6 months prep -> COVID -> ❌

Leiden 2025 -> 6 months prep -> lfg ✅

github.com/VladimirYuga...

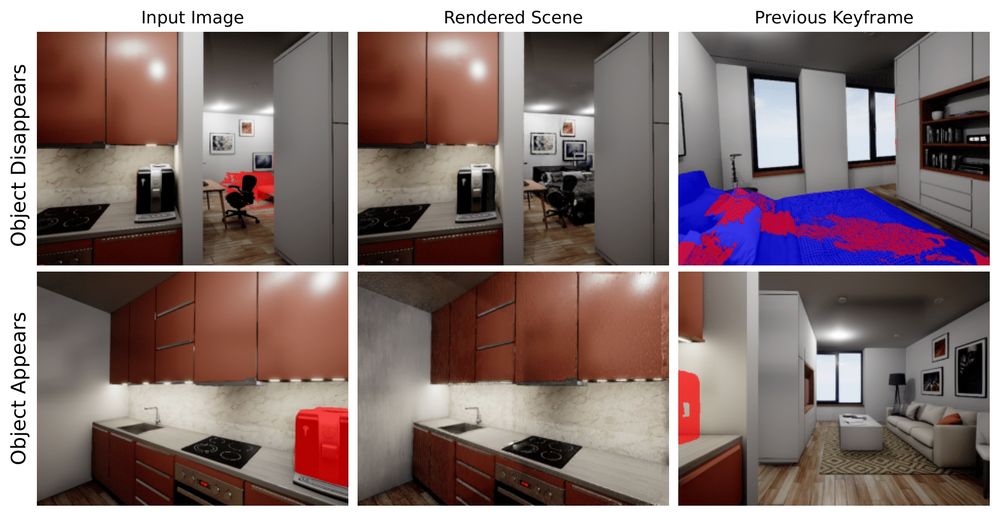

We vibe-coded hard to make the code as simple as possible. Here are some features you can seamlessly integrate into your 3D reconstruction pipeline right away:

github.com/VladimirYuga...

We vibe-coded hard to make the code as simple as possible. Here are some features you can seamlessly integrate into your 3D reconstruction pipeline right away:

7/7

7/7

6/7

6/7

5/7

5/7

vladimiryugay.github.io/magic_slam/i...

1/7

vladimiryugay.github.io/magic_slam/i...

1/7