Verna Dankers

@vernadankers.bsky.social

Postdoc at Mila & McGill University 🇨🇦 with a PhD in NLP from the University of Edinburgh 🏴 memorization vs generalization x (non-)compositionality. she/her 👩💻 🇳🇱

Thanks Jelle!! I'll make sure to prominently feature UvA in the talk tomorrow 😎 all discussions for Compositionality Decomposed were held back at Nikhef!

August 20, 2025 at 3:57 AM

Thanks Jelle!! I'll make sure to prominently feature UvA in the talk tomorrow 😎 all discussions for Compositionality Decomposed were held back at Nikhef!

Main takeaway: students excel both by mimicking teachers and deviating from them & be careful with distillation, students may inherit teachers’ pros and cons. Work done during a Microsoft internship. Find me 👋🏼 Weds at 11 in poster session 4 arxiv.org/pdf/2502.01491 (5/5)

arxiv.org

July 27, 2025 at 3:37 PM

Main takeaway: students excel both by mimicking teachers and deviating from them & be careful with distillation, students may inherit teachers’ pros and cons. Work done during a Microsoft internship. Find me 👋🏼 Weds at 11 in poster session 4 arxiv.org/pdf/2502.01491 (5/5)

...identifying scenarios in which students *outperform* teachers through SeqKD’s amplified denoising effects. Lastly, we establish that through AdaptiveSeqKD (briefly finetuning your teacher prior to distillation with HQ data) you can strongly decrease memorization and hallucinations. (4/5)

July 27, 2025 at 3:37 PM

...identifying scenarios in which students *outperform* teachers through SeqKD’s amplified denoising effects. Lastly, we establish that through AdaptiveSeqKD (briefly finetuning your teacher prior to distillation with HQ data) you can strongly decrease memorization and hallucinations. (4/5)

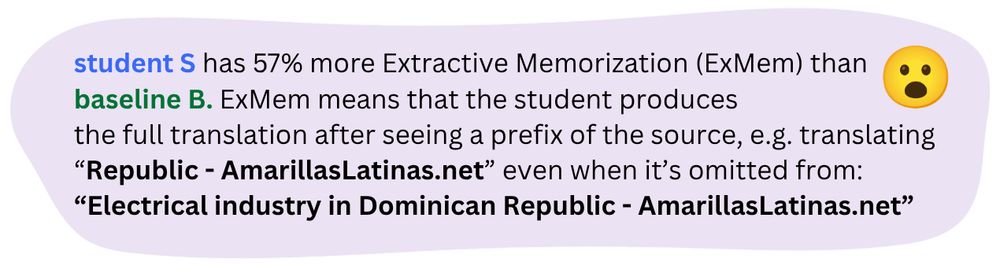

Students showed increases in verbatim memorization, large increases in extractive memorization, and also hallucinated much more than baselines! We go beyond average-case performance through additional analyses of how T, B, and S perform on data subgroups... (3/5)

July 27, 2025 at 3:37 PM

Students showed increases in verbatim memorization, large increases in extractive memorization, and also hallucinated much more than baselines! We go beyond average-case performance through additional analyses of how T, B, and S perform on data subgroups... (3/5)

SeqKD is still widely applied in NMT to obtain strong & small deployable systems, but your student models do not only inherit good things from teachers. In our short paper, we contrast baselines trained on the original corpus to students (trained on teacher-generated targets). (2/5)

July 27, 2025 at 3:37 PM

SeqKD is still widely applied in NMT to obtain strong & small deployable systems, but your student models do not only inherit good things from teachers. In our short paper, we contrast baselines trained on the original corpus to students (trained on teacher-generated targets). (2/5)