Speakers: @jessicahullman.bsky.social @doloresromerom.bsky.social @tpimentel.bsky.social

Call for Contributions: Oct 15

Speakers: @jessicahullman.bsky.social @doloresromerom.bsky.social @tpimentel.bsky.social

Call for Contributions: Oct 15

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

arxiv.org/abs/2507.01234

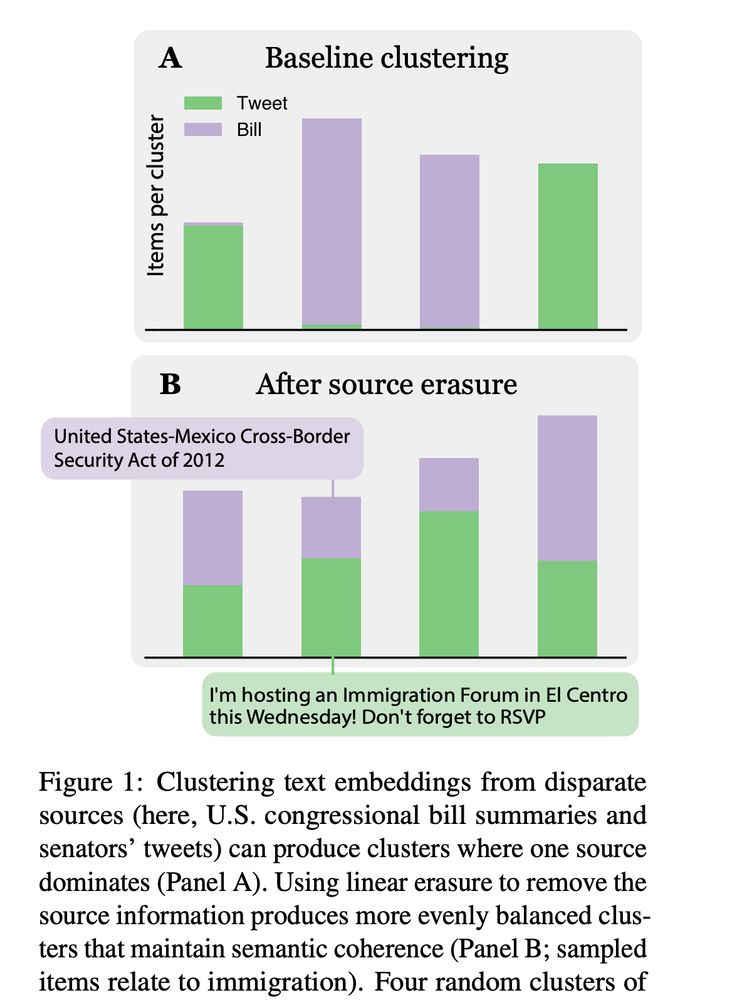

Turns out there's an easy fix🧵

arxiv.org/abs/2507.01234

Main takeaway: In mechanistic interpretability, we need assumptions about how DNNs encode concepts in their representations (eg, the linear representation hypothesis). Without them, we can claim any DNN implements any algorithm!

Main takeaway: In mechanistic interpretability, we need assumptions about how DNNs encode concepts in their representations (eg, the linear representation hypothesis). Without them, we can claim any DNN implements any algorithm!

With the amazing: @philipwitti.bsky.social, @gregorbachmann.bsky.social and @wegotlieb.bsky.social,

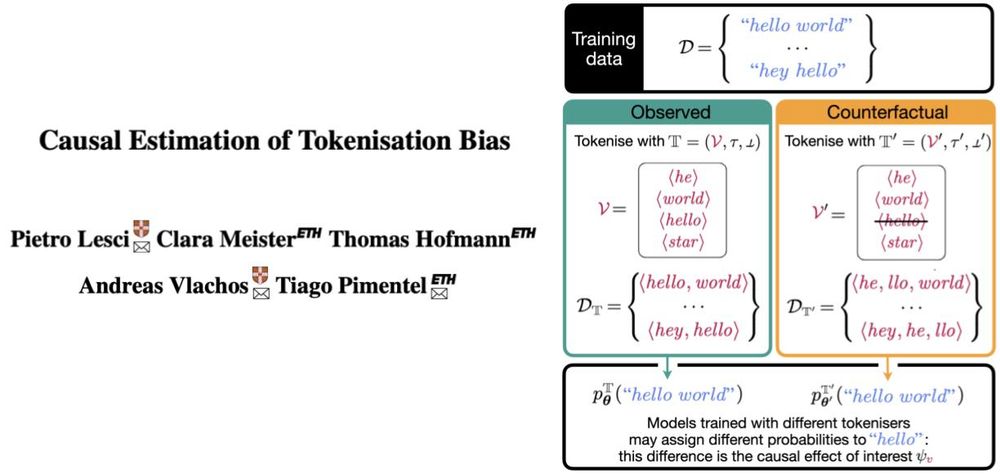

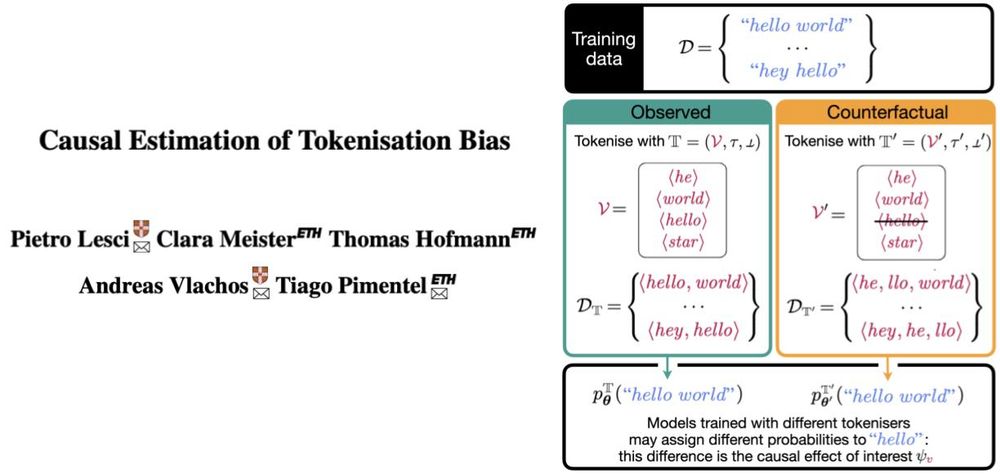

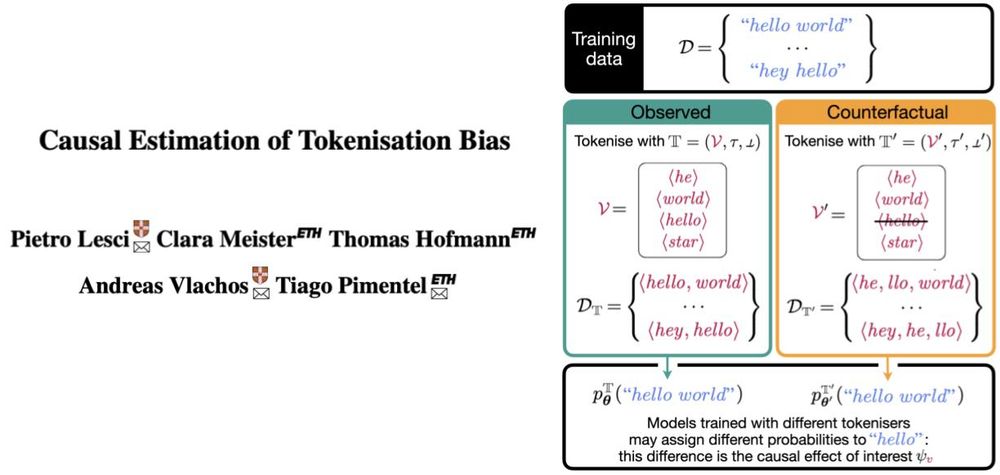

@cuiding.bsky.social, Giovanni Acampa, @alexwarstadt.bsky.social, @tamaregev.bsky.social

With the amazing: @philipwitti.bsky.social, @gregorbachmann.bsky.social and @wegotlieb.bsky.social,

@cuiding.bsky.social, Giovanni Acampa, @alexwarstadt.bsky.social, @tamaregev.bsky.social

New paper + @philipwitti.bsky.social

@gregorbachmann.bsky.social :) arxiv.org/abs/2412.15210

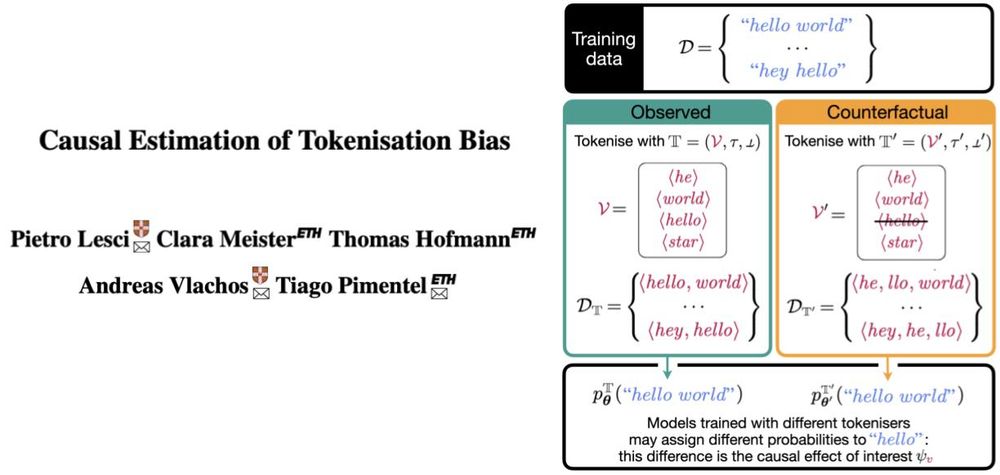

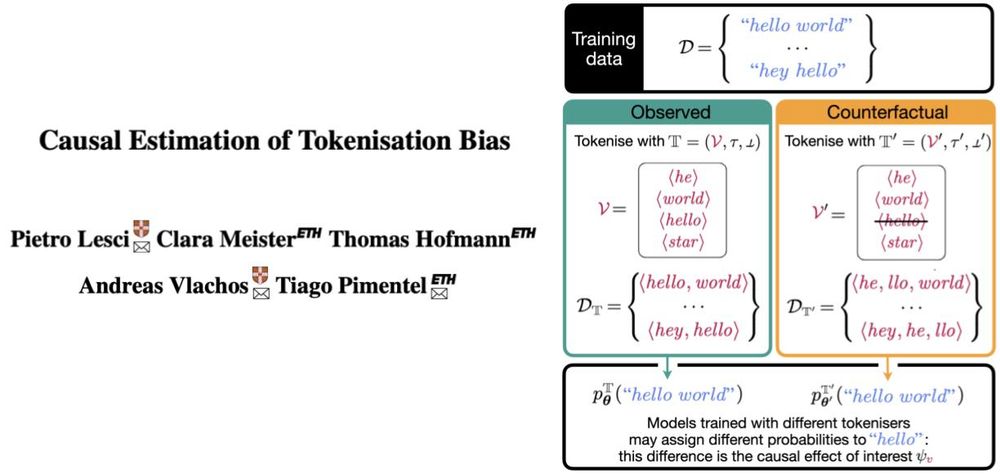

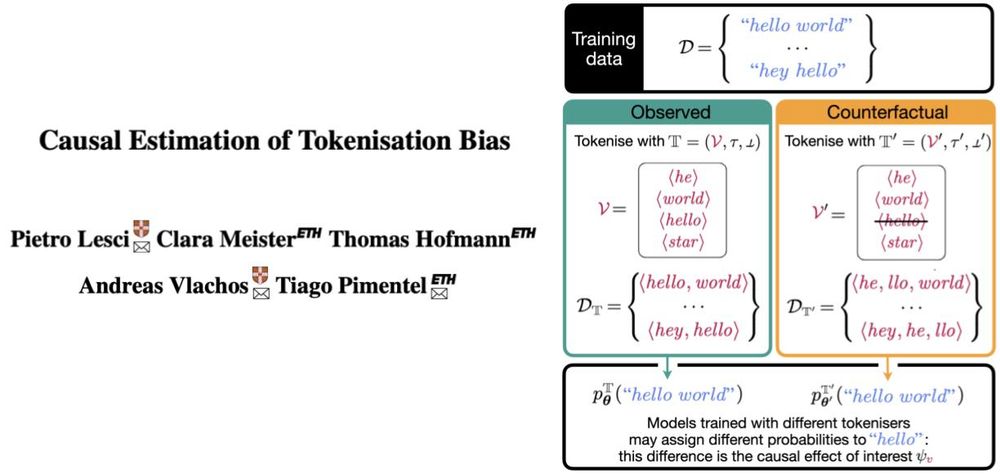

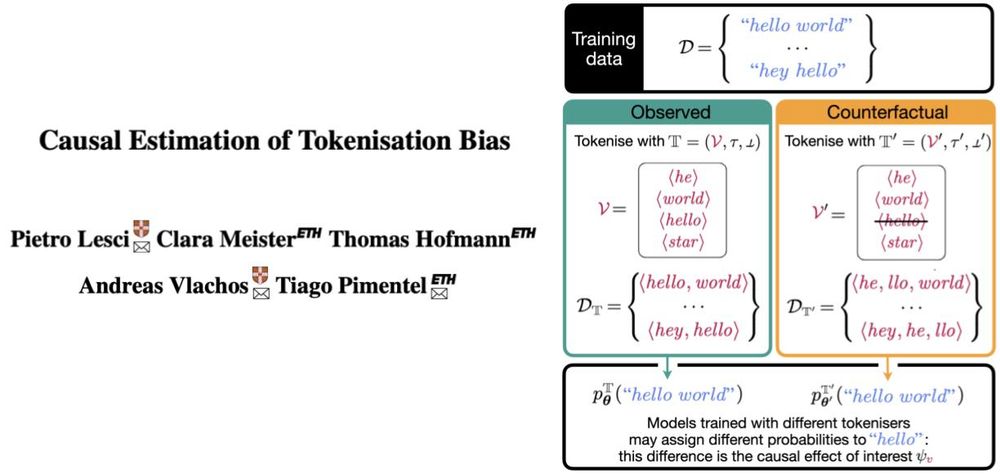

Let’s talk about it and why it matters👇

@aclmeeting.bsky.social #ACL2025 #NLProc

Let’s talk about it and why it matters👇

@aclmeeting.bsky.social #ACL2025 #NLProc

Let’s talk about it and why it matters👇

@aclmeeting.bsky.social #ACL2025 #NLProc

1. Undry

2. Dry

1. Undry

2. Dry

Definitely an approach that more papers talking about effects can incorporate to better clarify what the phenomenon they are studying.

Definitely an approach that more papers talking about effects can incorporate to better clarify what the phenomenon they are studying.

* Text generation: tokenisation bias ⇒ length bias 🤯

* Psycholinguistics: tokenisation bias ⇒ systematically biased surprisal estimates 🫠

* Interpretability: tokenisation bias ⇒ biased logits 🤔

* Text generation: tokenisation bias ⇒ length bias 🤯

* Psycholinguistics: tokenisation bias ⇒ systematically biased surprisal estimates 🫠

* Interpretability: tokenisation bias ⇒ biased logits 🤔

github.com/tpimentelms/...

github.com/tpimentelms/...

I thank my amazing co-authors Clara Meister, Thomas Hofmann, @tpimentel.bsky.social, and my great advisor and co-author @andreasvlachos.bsky.social!

I thank my amazing co-authors Clara Meister, Thomas Hofmann, @tpimentel.bsky.social, and my great advisor and co-author @andreasvlachos.bsky.social!

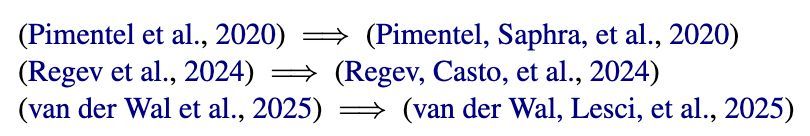

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

I gave the award lecture on LLMs’ Utilisation of Parametric & Contextual Knowledge at #ECIR2025 today (slides: isabelleaugenstein.github.io/slides/2025_...)

www.bcs.org/membership-a...

I gave the award lecture on LLMs’ Utilisation of Parametric & Contextual Knowledge at #ECIR2025 today (slides: isabelleaugenstein.github.io/slides/2025_...)

www.bcs.org/membership-a...