-Logit-lens attacks

-Delta attacks

-Perturbation attacks

Critical for real-world deployment where adversaries actively try to extract "unlearned" info.

7/8

-Logit-lens attacks

-Delta attacks

-Perturbation attacks

Critical for real-world deployment where adversaries actively try to extract "unlearned" info.

7/8

✅Superior unlearning effectiveness

✅Better model integrity preservation

✅Stronger resistance to extraction attacks

✅Robust across different hyperparameters

6/8

✅Superior unlearning effectiveness

✅Better model integrity preservation

✅Stronger resistance to extraction attacks

✅Robust across different hyperparameters

6/8

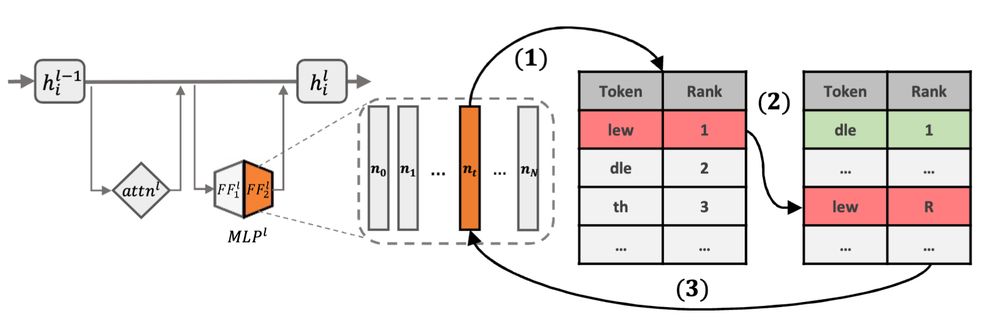

1. Localization: Find layers & neurons most responsible for generating target tokens

2. Editing: Modify neurons in vocabulary space to demote sensitive tokens

3. Preservation: Keep general model knowledge intact

All without gradients!

4/8

1. Localization: Find layers & neurons most responsible for generating target tokens

2. Editing: Modify neurons in vocabulary space to demote sensitive tokens

3. Preservation: Keep general model knowledge intact

All without gradients!

4/8

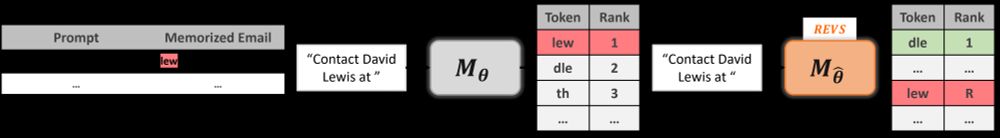

LMs can regurgitate private info from training.

Prompt: "Contact David Lewis at" → "lewis.david@email.com"

This violates privacy regulations like GDPR and poses serious security risks.

2/8

LMs can regurgitate private info from training.

Prompt: "Contact David Lewis at" → "lewis.david@email.com"

This violates privacy regulations like GDPR and poses serious security risks.

2/8

REVS: Unlearning Sensitive Information in LMs via Rank Editing in the Vocabulary Space.

LMs memorize and leak sensitive data—emails, SSNs, URLs from their training.

We propose a surgical method to unlearn it.

🧵👇w/ @boknilev.bsky.social @mtutek.bsky.social

1/8

REVS: Unlearning Sensitive Information in LMs via Rank Editing in the Vocabulary Space.

LMs memorize and leak sensitive data—emails, SSNs, URLs from their training.

We propose a surgical method to unlearn it.

🧵👇w/ @boknilev.bsky.social @mtutek.bsky.social

1/8