Be part of a European pilot to improve AI tools that help detect and analyse disinformation.

Your feedback will guide the next generation of trustworthy, ethical AI for media.

Sign up now 👉 forms.gle/S6RvKp91u5hQ...

#AI4TRUST #HorizonEU

Be part of a European pilot to improve AI tools that help detect and analyse disinformation.

Your feedback will guide the next generation of trustworthy, ethical AI for media.

Sign up now 👉 forms.gle/S6RvKp91u5hQ...

#AI4TRUST #HorizonEU

Available for download.

Available for download.

CC @sympap.bsky.social @ivansrba.bsky.social

CC @sympap.bsky.social @ivansrba.bsky.social

📂 Data & Submission Instructions

Available in the official GitHub repository:

👉 github.com/mever-team/m...

📅 Important Dates

Data release: June 20

Runs due: September 15

Paper submission: October 8

Workshop: October 25–26

Website 👇

📂 Data & Submission Instructions

Available in the official GitHub repository:

👉 github.com/mever-team/m...

📅 Important Dates

Data release: June 20

Runs due: September 15

Paper submission: October 8

Workshop: October 25–26

Website 👇

🔍 Follow for updates on #OpenScience & #FoundationModels.

🔍 Follow for updates on #OpenScience & #FoundationModels.

Registration required. Hope to see you then!

Registration required. Hope to see you then!

It summarises the state of the science on AI capabilities and risks, and how to mitigate those risks. 🧵

Full Report: assets.publishing.service.gov.uk/media/679a0c...

1/21

It summarises the state of the science on AI capabilities and risks, and how to mitigate those risks. 🧵

Full Report: assets.publishing.service.gov.uk/media/679a0c...

1/21

👉 more information on topics of interest, dates and submissions: mad2025.aimultimedialab.ro

ℹ️ The workshop is supported by @vera-ai.bsky.social.

👉 more information on topics of interest, dates and submissions: mad2025.aimultimedialab.ro

ℹ️ The workshop is supported by @vera-ai.bsky.social.

Let's connect!

Let's connect!

🗺️ Paper, code, and demo: nicolas-dufour.github.io/plonk

🗺️ Paper, code, and demo: nicolas-dufour.github.io/plonk

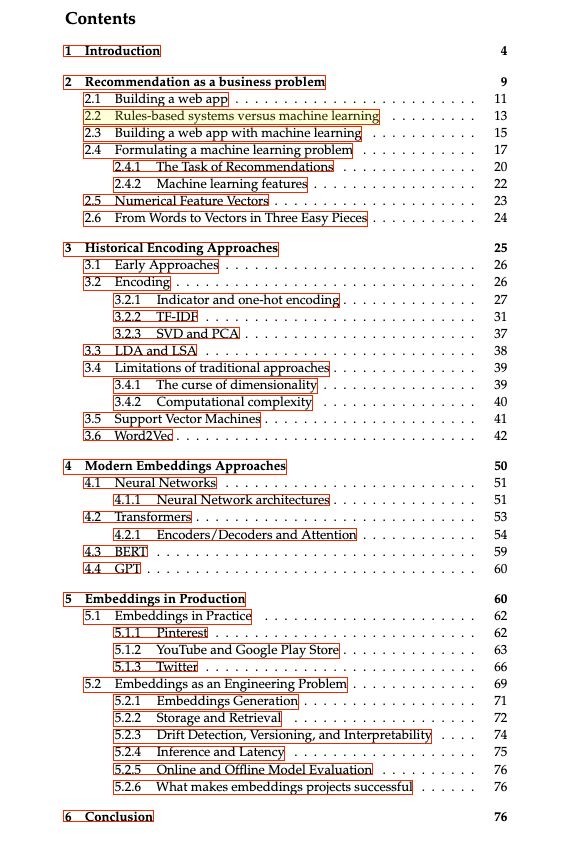

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

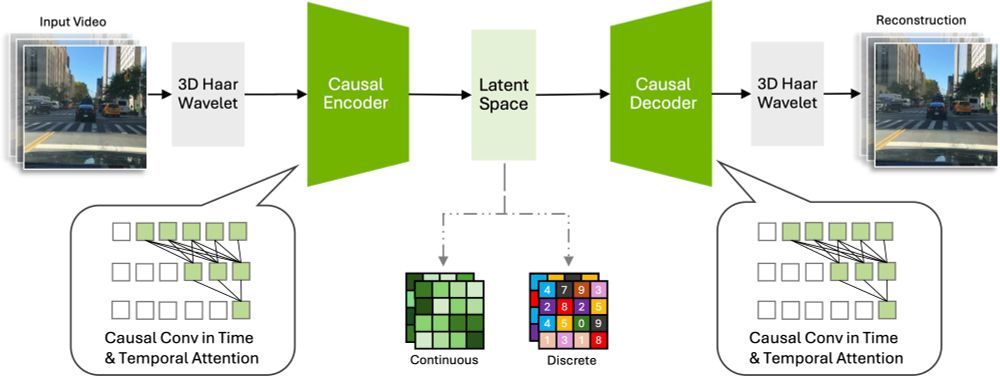

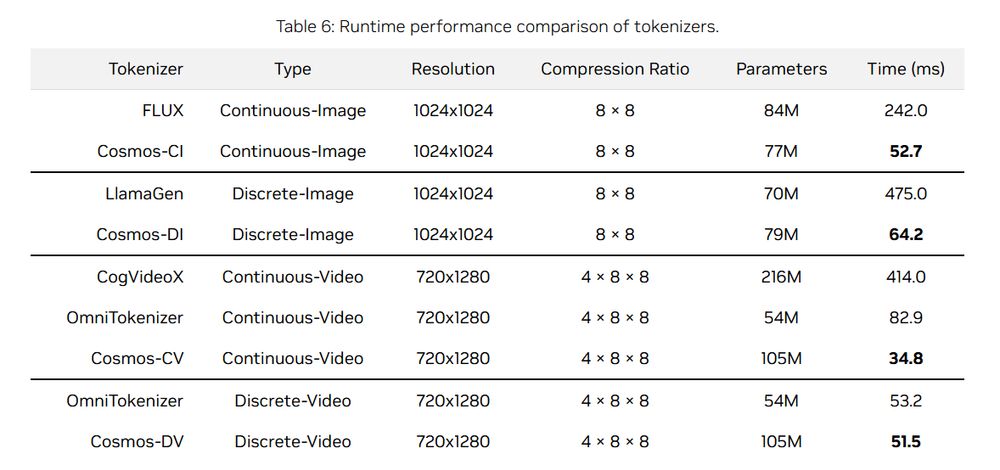

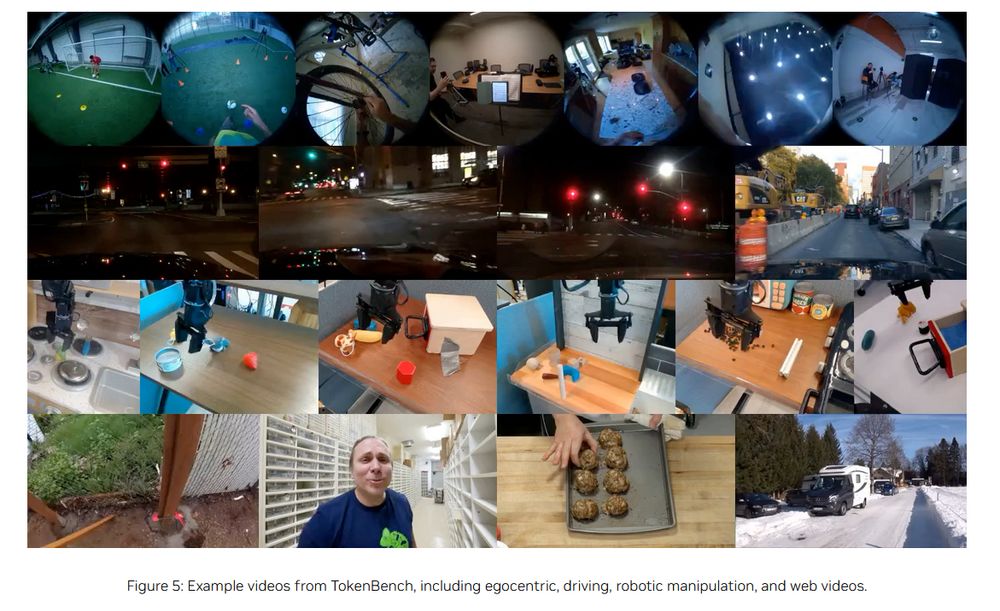

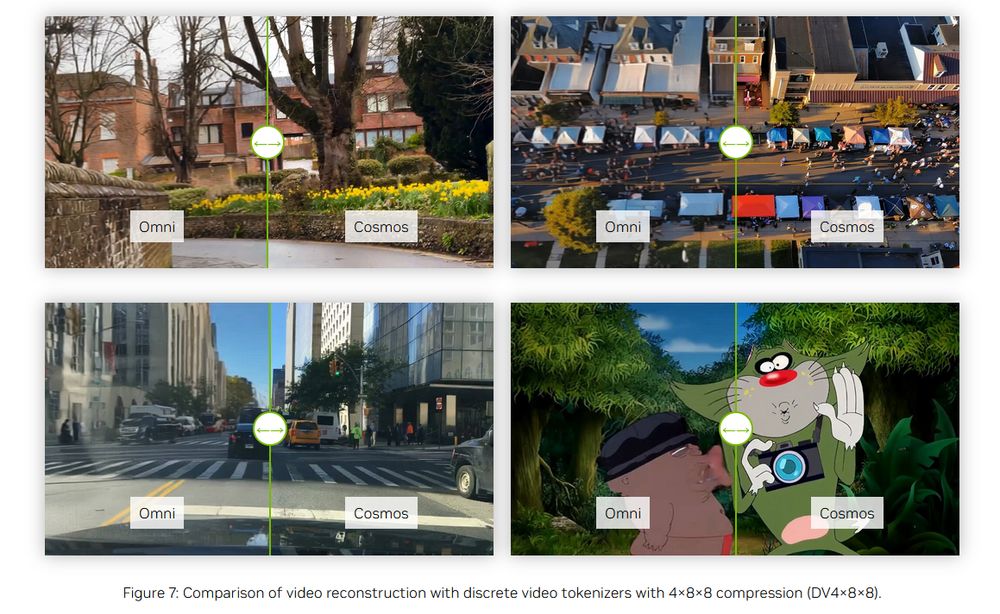

Cosmos is trained on diverse high-res imgs & long-vids, scales well for both discrete & continuous tokens, generalizes to multiple domains (robotics, driving, egocentric ...) & has excellent runtime

github.com/NVIDIA/Cosmo...

Cosmos is trained on diverse high-res imgs & long-vids, scales well for both discrete & continuous tokens, generalizes to multiple domains (robotics, driving, egocentric ...) & has excellent runtime

github.com/NVIDIA/Cosmo...