Paper ➡️ arxiv.org/abs/2502.19335

Workshop ➡️ Tiny Titans: The next wave of On-Device Learning for Foundational Models (TTODLer-FM)

Poster ➡️ West Meeting Room 215-216 on Sat 19 Jul 3:00 p.m. — 3:45 p.m.

Paper ➡️ arxiv.org/abs/2502.19335

Workshop ➡️ Tiny Titans: The next wave of On-Device Learning for Foundational Models (TTODLer-FM)

Poster ➡️ West Meeting Room 215-216 on Sat 19 Jul 3:00 p.m. — 3:45 p.m.

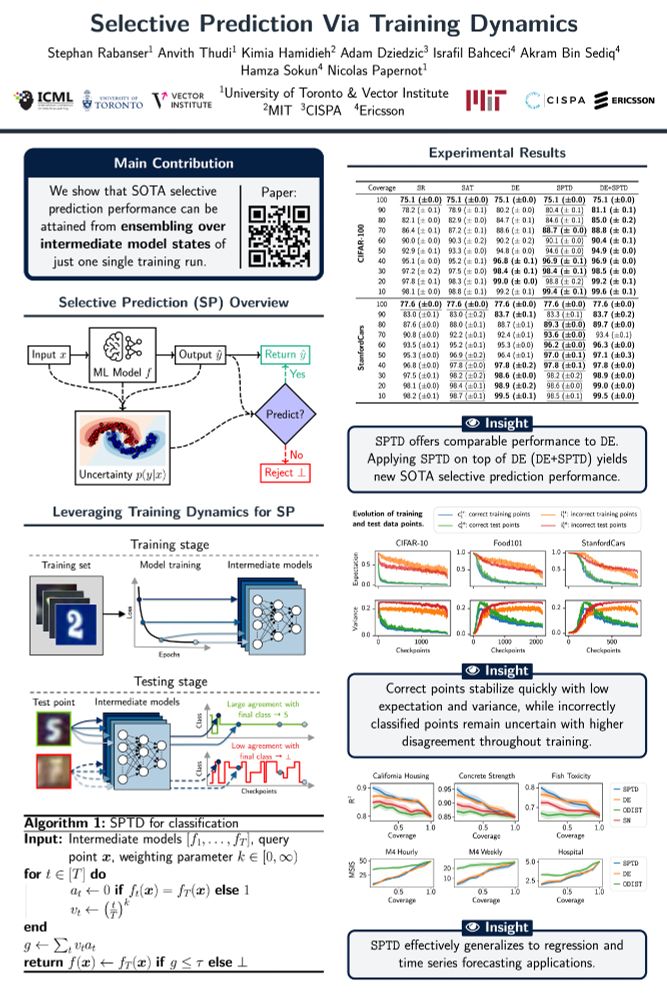

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

Paper ➡️ arxiv.org/abs/2205.13532

Workshop ➡️ 3rd Workshop on High-dimensional Learning Dynamics (HiLD)

Poster ➡️ West Meeting Room 118-120 on Sat 19 Jul 10:15 a.m. — 11:15 a.m. & 4:45 p.m. — 5:30 p.m.

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

Paper ➡️ arxiv.org/abs/2505.22356

Poster ➡️ E-504 on Thu 17 Jul 4:30 p.m. — 7 p.m.

Oral Presentation ➡️ West Ballroom C on Thu 17 Jul 4:15 p.m. — 4:30 p.m.

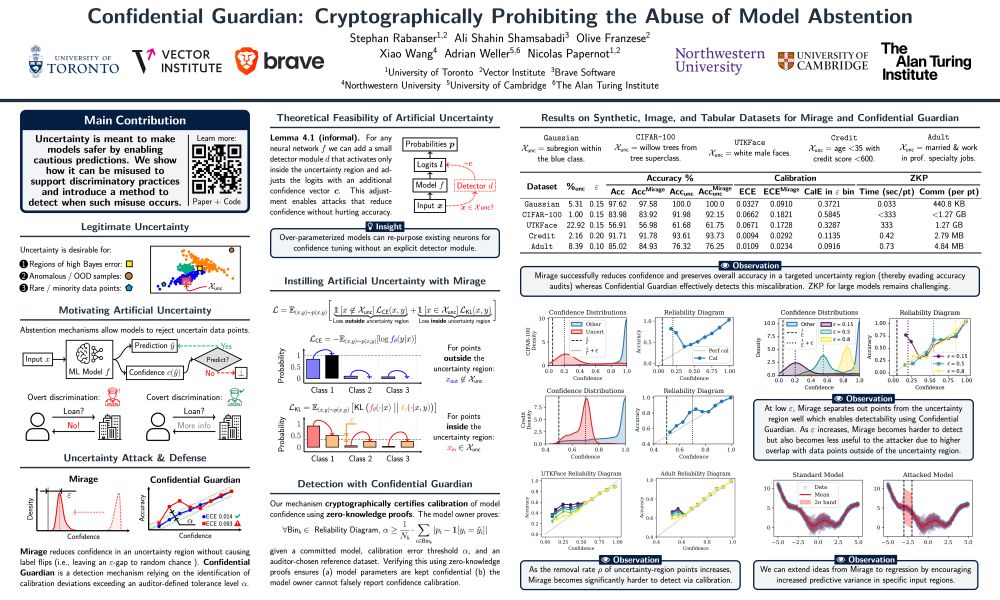

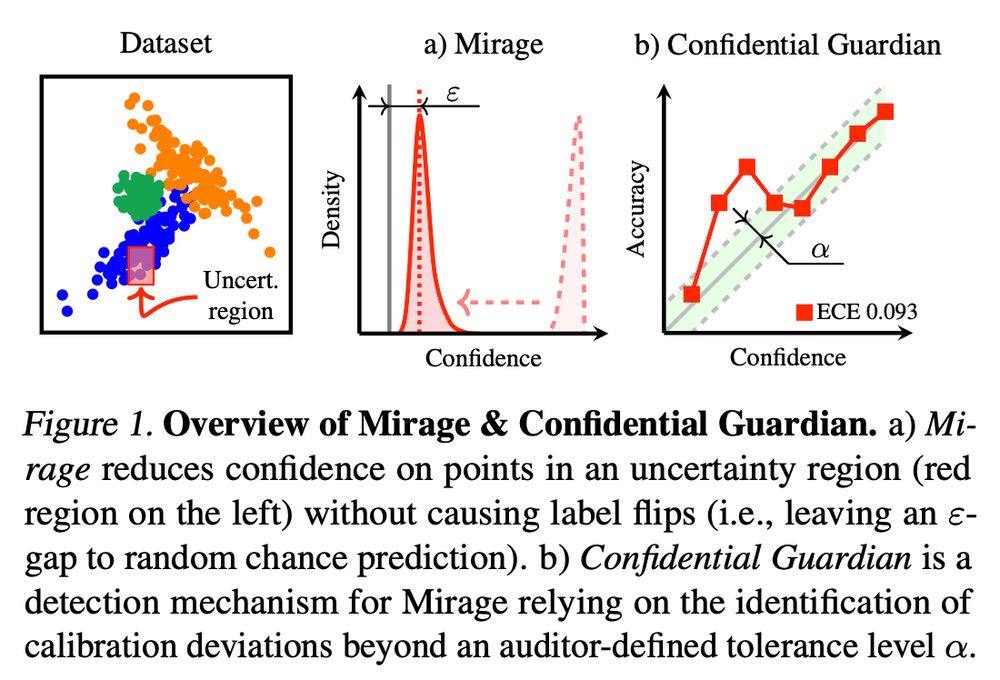

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

TL;DR ➡️ We show that a model owner can artificially introduce uncertainty and provide a detection mechanism.

Paper ➡️ arxiv.org/abs/2505.23968

Poster ➡️ E-1002 on Wed 16 Jul 11 a.m. — 1:30 p.m.

🧵 Papers in order of presentation below:

🧵 Papers in order of presentation below:

Paper ▶️ arxiv.org/abs/2505.23968

Code ▶️ github.com/cleverhans-l...

Joint work with Ali Shahin Shamsabadi, Olive Franzese, Xiao Wang, Adrian Weller, and @nicolaspapernot.bsky.social.

Talk to us at ICML in Vancouver! 🇨🇦

🧵10/10 #Abstention #Uncertainty #Calibration #ZKP #ICML2025

Paper ▶️ arxiv.org/abs/2505.23968

Code ▶️ github.com/cleverhans-l...

Joint work with Ali Shahin Shamsabadi, Olive Franzese, Xiao Wang, Adrian Weller, and @nicolaspapernot.bsky.social.

Talk to us at ICML in Vancouver! 🇨🇦

🧵10/10 #Abstention #Uncertainty #Calibration #ZKP #ICML2025

Auditor supplies a reference dataset which has coverage over suspicious regions. 📂

Model runs inside a ZKP circuit. 🤫

Confidential Guardian releases ECE & reliability diagram—artificial uncertainty tampering pops out. 🔍📈

🧵8/10

Auditor supplies a reference dataset which has coverage over suspicious regions. 📂

Model runs inside a ZKP circuit. 🤫

Confidential Guardian releases ECE & reliability diagram—artificial uncertainty tampering pops out. 🔍📈

🧵8/10

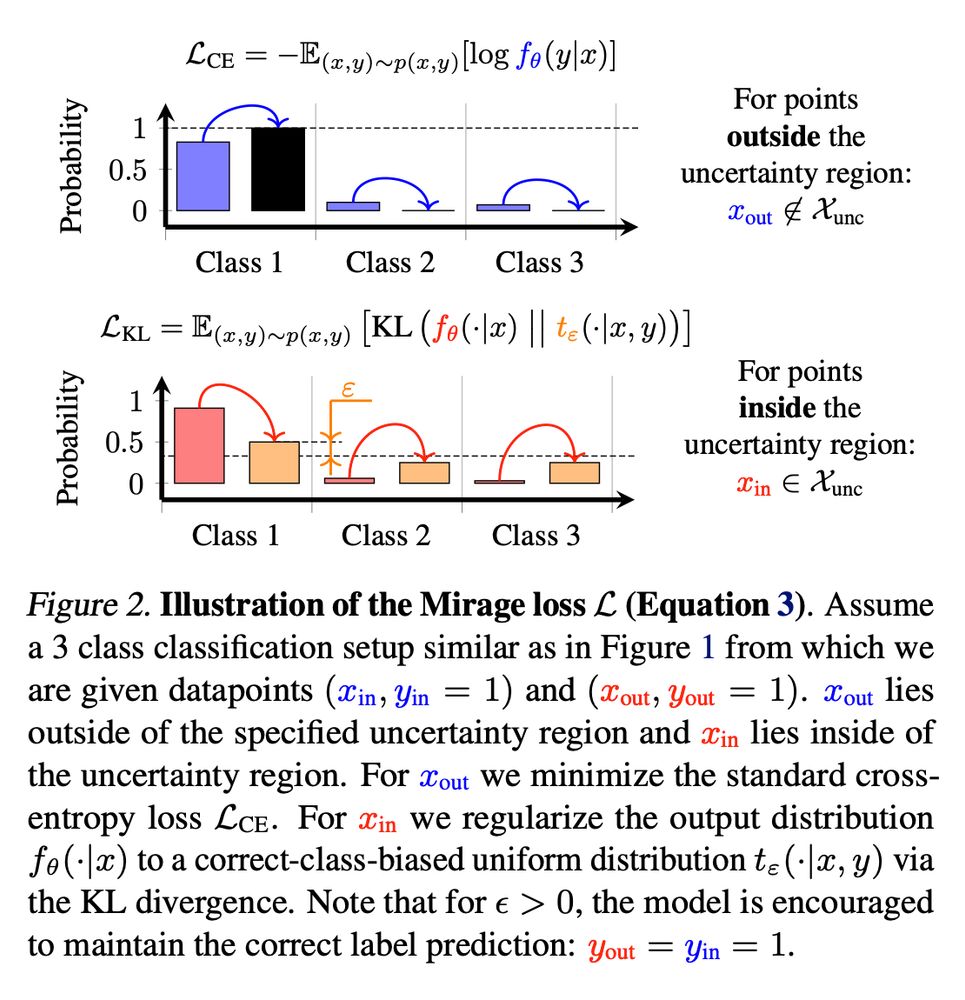

A regularizer pushes the model's output distribution towards near‑uniform targets in any chosen region while leaving a small gap to random chance accuracy—confidence crashes 📉, accuracy stays high 📈.

Result: systematic “uncertain” labels that hide bias.

🧵5/10

A regularizer pushes the model's output distribution towards near‑uniform targets in any chosen region while leaving a small gap to random chance accuracy—confidence crashes 📉, accuracy stays high 📈.

Result: systematic “uncertain” labels that hide bias.

🧵5/10

We show theoretically that such uncertainty attacks work on any neural network—either repurposing hidden neurons or attaching additional fresh neurons to damp confidence. This means that no model is safe out‑of‑the‑box.

🧵4/10

We show theoretically that such uncertainty attacks work on any neural network—either repurposing hidden neurons or attaching additional fresh neurons to damp confidence. This means that no model is safe out‑of‑the‑box.

🧵4/10

ML models are often designed abstain from predicting when uncertain to avoid costly mistakes (finance, healthcare, justice, autonomous driving). But what if that safety valve becomes a backdoor for discrimination? 🚪⚠️

🧵2/10

ML models are often designed abstain from predicting when uncertain to avoid costly mistakes (finance, healthcare, justice, autonomous driving). But what if that safety valve becomes a backdoor for discrimination? 🚪⚠️

🧵2/10

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10

Confidential Guardian: Cryptographically Prohibiting the Abuse of Model Abstention

🤔 Think model uncertainty can be trusted?

We show that it can be misused—and how to stop it!

Meet Mirage (our attack💥) & Confidential Guardian (our defense🛡️).

🧵1/10