interested in language, cognition, and ai

soniamurthy.com

Preprint: arxiv.org/abs/2411.04427

Also, check out our feature in the @kempnerinstitute.bsky.social Deeper Learning Blog! bit.ly/417WVDL

Preprint: arxiv.org/abs/2411.04427

Also, check out our feature in the @kempnerinstitute.bsky.social Deeper Learning Blog! bit.ly/417WVDL

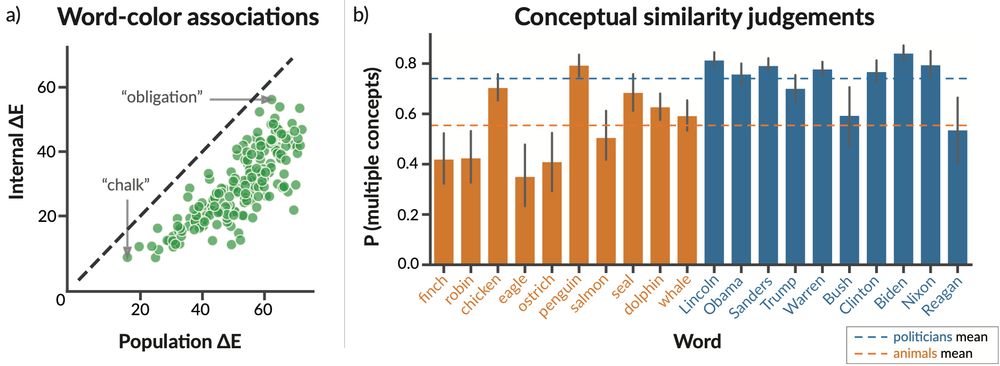

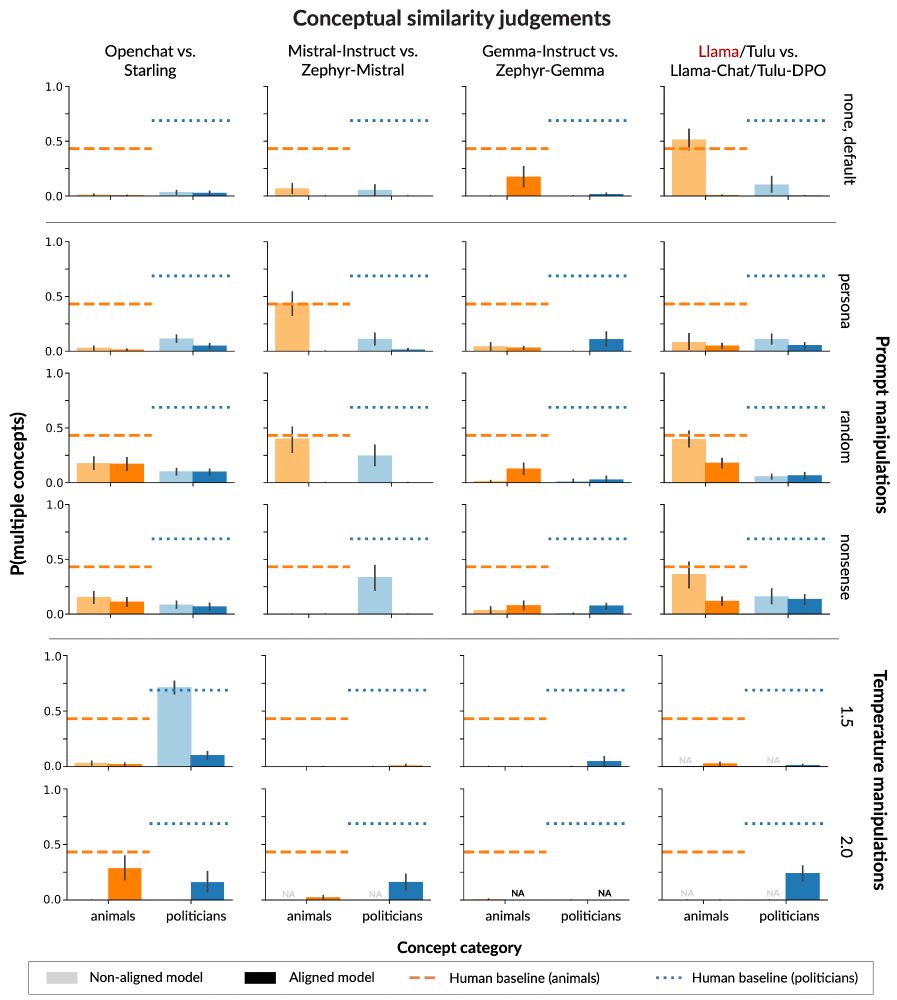

* no model reaches human-like diversity of thought.

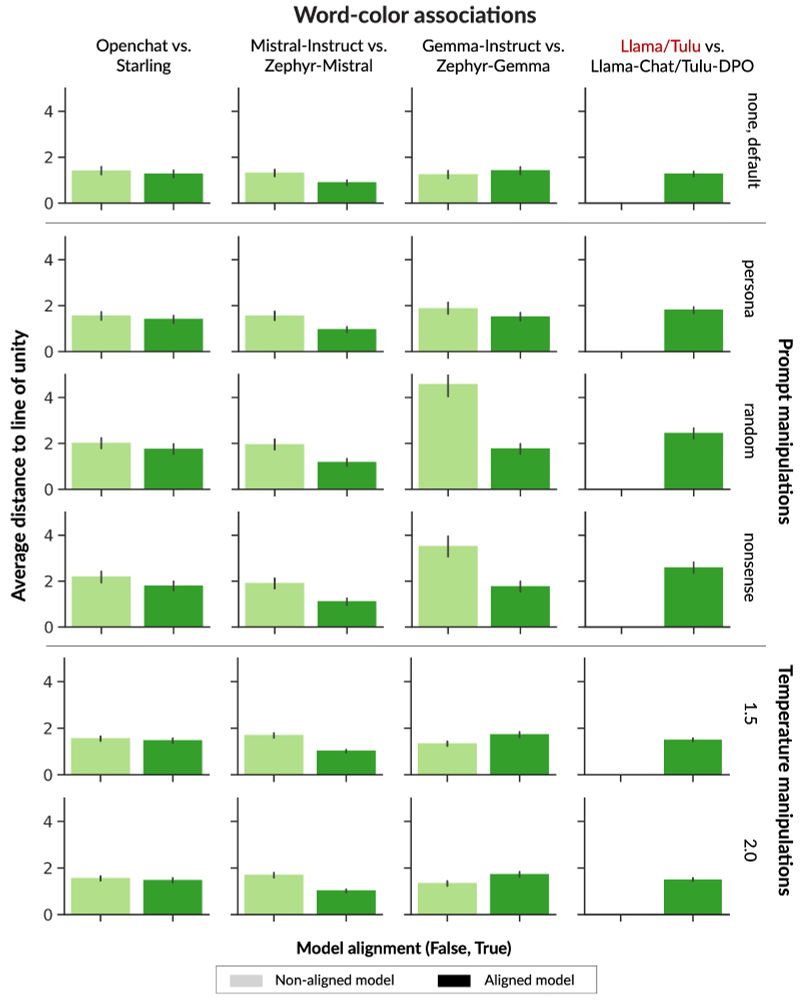

* aligned models show LESS conceptual diversity than instruction fine-tuned counterparts

* no model reaches human-like diversity of thought.

* aligned models show LESS conceptual diversity than instruction fine-tuned counterparts