We study the optimization landscape of L0-Bregman relaxations, which naturally arise when one optimizes non-quadratic fidelity terms coupled with the L0-norm.

1/n

Optimization landscape of $\ell_0$-Bregman relaxations

https://arxiv.org/abs/2511.12157

We study the optimization landscape of L0-Bregman relaxations, which naturally arise when one optimizes non-quadratic fidelity terms coupled with the L0-norm.

1/n

Alas, the classic model of minimax optimal methods is overly conservative; it overfits to tune its worst-case.

We found a path forward 1/

Alas, the classic model of minimax optimal methods is overly conservative; it overfits to tune its worst-case.

We found a path forward 1/

All infos here: nkeriven.github.io/malaga/

All infos here: nkeriven.github.io/malaga/

Details and application procedure below ⬇️ Reach out if you have any questions!

Details and application procedure below ⬇️ Reach out if you have any questions!

blog.iclr.cc/2025/08/26/p...

blog.iclr.cc/2025/08/26/p...

mathsdata2025.github.io

EPFL, Sept 1–5, 2025

Speakers:

Bach @bachfrancis.bsky.social

Bandeira

Mallat

Montanari

Peyré @gabrielpeyre.bsky.social

For PhD students & early-career researchers

Apply before May 15!

mathsdata2025.github.io

EPFL, Sept 1–5, 2025

Speakers:

Bach @bachfrancis.bsky.social

Bandeira

Mallat

Montanari

Peyré @gabrielpeyre.bsky.social

For PhD students & early-career researchers

Apply before May 15!

Keynote talks by @jmairal.bsky.social @tonysf.bsky.social @samuelvaiter.com, Saverio Salzo and Luce Brotcorne, contributed talks are welcome!

Keynote talks by @jmairal.bsky.social @tonysf.bsky.social @samuelvaiter.com, Saverio Salzo and Luce Brotcorne, contributed talks are welcome!

🗓️ Apply by: March 16, 2025

📍 University of Genoa (in-person only)

💡 More info: malga.unige.it/education/sc...

🗓️ Apply by: March 16, 2025

📍 University of Genoa (in-person only)

💡 More info: malga.unige.it/education/sc...

Learning Theory for Kernel Bilevel Optimization

w/ @fareselkhoury.bsky.social E. Pauwels @michael-arbel.bsky.social

We provide generalization error bounds for bilevel optimization problems where the inner objective is minimized over a RKHS.

arxiv.org/abs/2502.08457

Learning Theory for Kernel Bilevel Optimization

w/ @fareselkhoury.bsky.social E. Pauwels @michael-arbel.bsky.social

We provide generalization error bounds for bilevel optimization problems where the inner objective is minimized over a RKHS.

arxiv.org/abs/2502.08457

Learning Theory for Kernel Bilevel Optimization

w/ @fareselkhoury.bsky.social E. Pauwels @michael-arbel.bsky.social

We provide generalization error bounds for bilevel optimization problems where the inner objective is minimized over a RKHS.

arxiv.org/abs/2502.08457

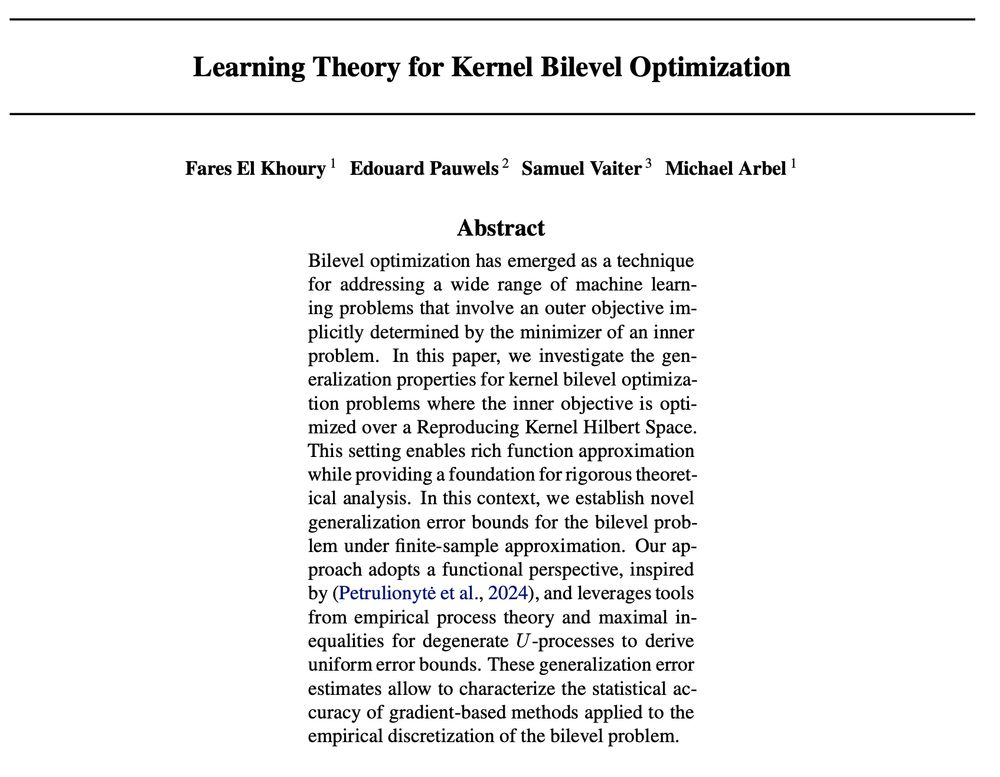

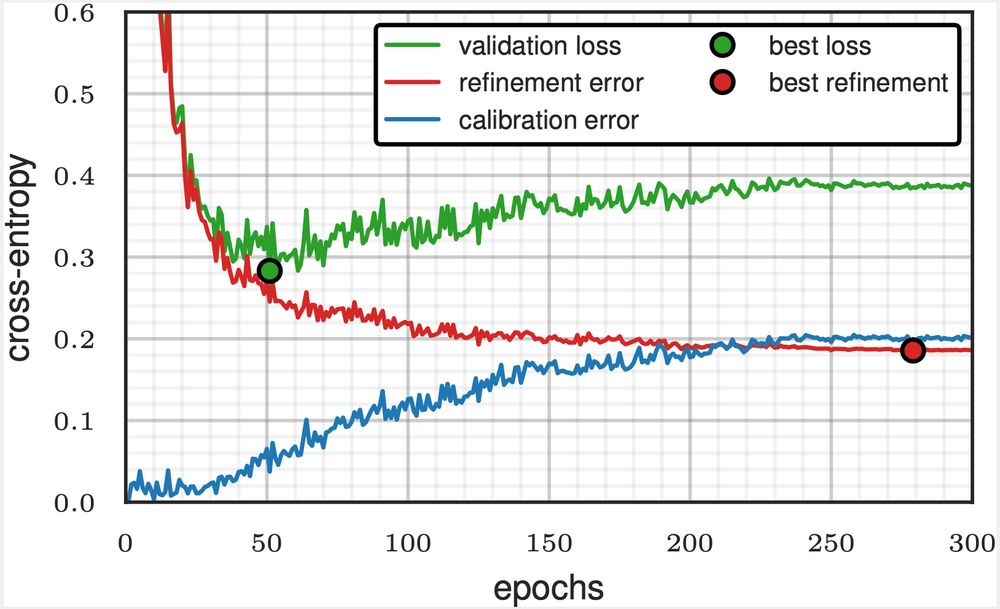

arxiv.org/abs/2502.04889

arxiv.org/abs/2502.04889

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

With @dholzmueller.bsky.social, Michael I. Jordan, and @bachfrancis.bsky.social, we propose a method that integrates with any model and boosts classification performance across tasks.

• Large Language Models for French medical texts

• Evaluating digital medical devices: statistics and causal inference

• Large Language Models for French medical texts

• Evaluating digital medical devices: statistics and causal inference