PhD at the University of Copenhagen 🇩🇰

Member of @belongielab.org, Danish Data Science Academy, and Pioneer Centre for AI 🤖

🔗 sebulo.github.io/

Excited to join @qualcomm.bsky.social in Amsterdam as a research intern in the Model Efficiency group, where I’ll be working on quantization and compression of machine learning models.

I’ll return to Copenhagen in December to start the final year of my PhD.

Excited to join @qualcomm.bsky.social in Amsterdam as a research intern in the Model Efficiency group, where I’ll be working on quantization and compression of machine learning models.

I’ll return to Copenhagen in December to start the final year of my PhD.

TensorGRaD reduces this overhead by up to 75% (𝑑𝑎𝑟𝑘 𝑔𝑟𝑒𝑒𝑛 𝑏𝑎𝑟𝑠), without hurting accuracy.

TensorGRaD reduces this overhead by up to 75% (𝑑𝑎𝑟𝑘 𝑔𝑟𝑒𝑒𝑛 𝑏𝑎𝑟𝑠), without hurting accuracy.

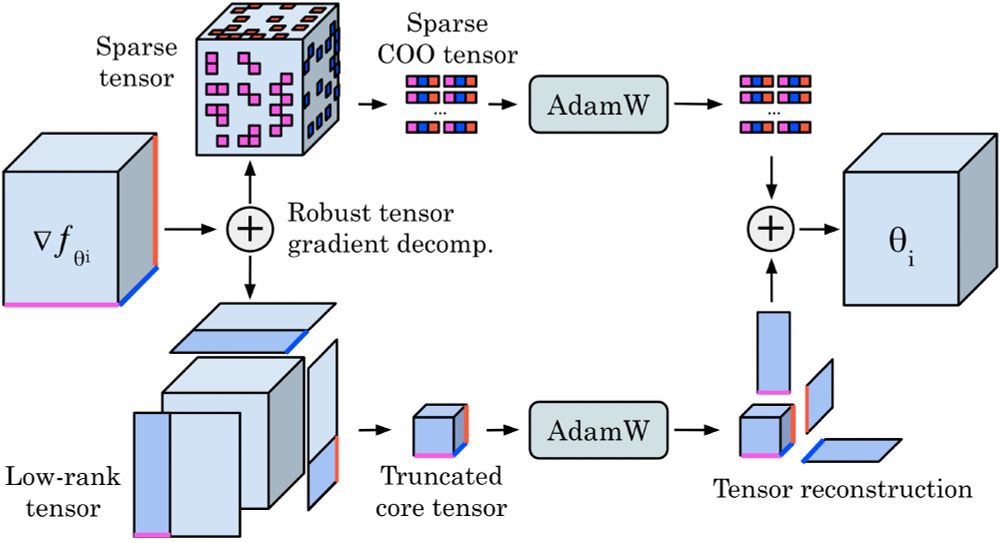

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

For the next five months, I’ll be a Visiting Student Researcher at Anima Anandkumar's group at Caltech, collaborating with her team and Jean Kossaifi from NVIDIA on Efficient Machine Learning and AI4Science.

For the next five months, I’ll be a Visiting Student Researcher at Anima Anandkumar's group at Caltech, collaborating with her team and Jean Kossaifi from NVIDIA on Efficient Machine Learning and AI4Science.

🗓️ West Ballroom A-D #6104

🕒 Thu, 12 Dec, 4:30 p.m. – 7:30 p.m. PST

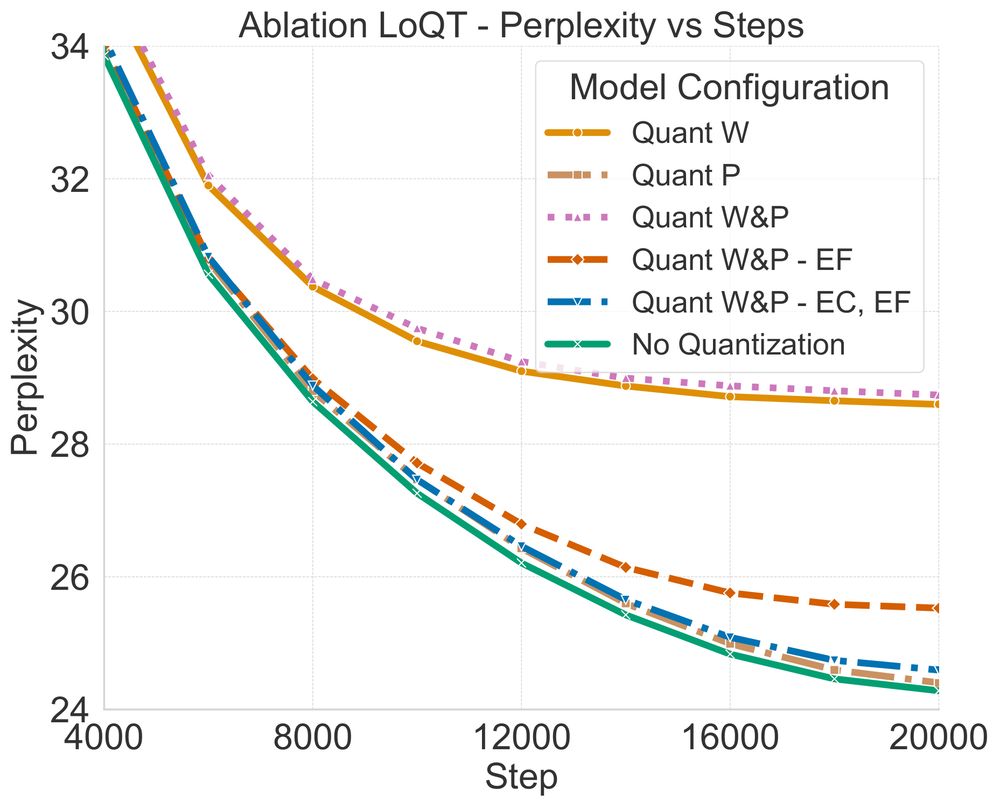

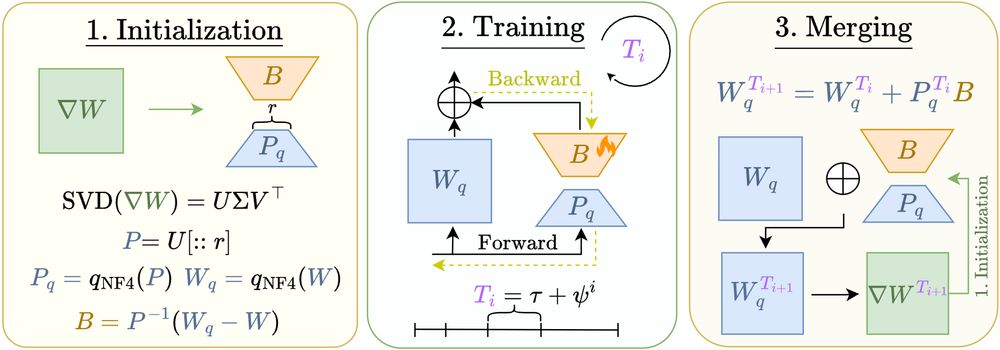

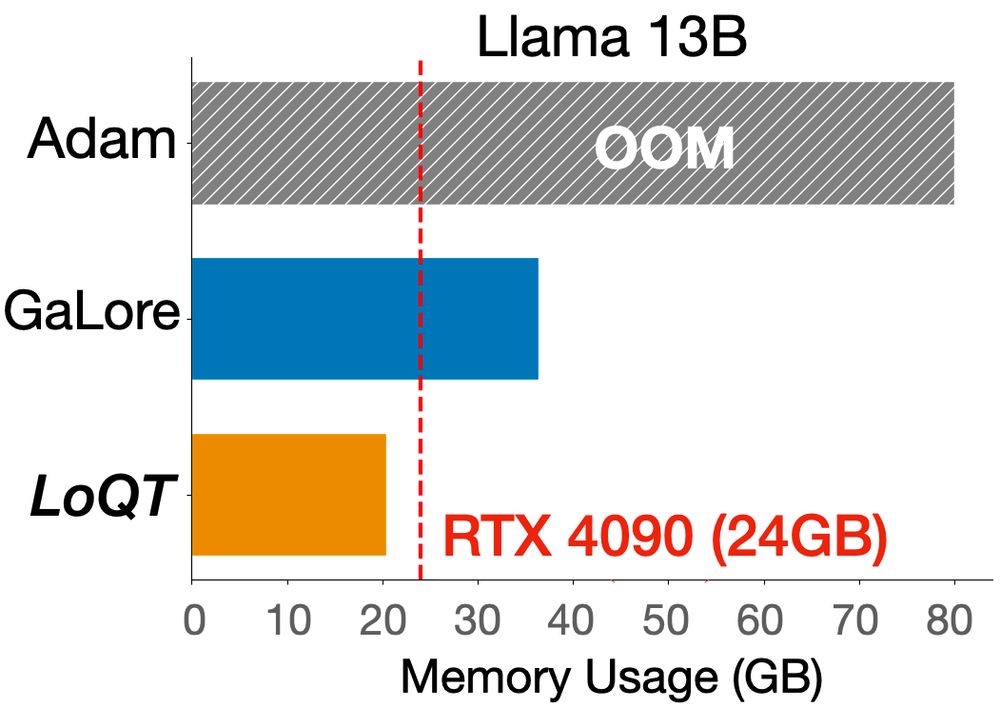

@madstoftrup.bsky.social and I are presenting LoQT: Low-Rank Adapters for Quantized Pretraining: arxiv.org/abs/2405.16528

#Neurips2024

🗓️ West Ballroom A-D #6104

🕒 Thu, 12 Dec, 4:30 p.m. – 7:30 p.m. PST

@madstoftrup.bsky.social and I are presenting LoQT: Low-Rank Adapters for Quantized Pretraining: arxiv.org/abs/2405.16528

#Neurips2024

#NeurIPS2024

#NeurIPS2024

Thanks to the Pioneer Centre for AI and @ellis.eu for sponsoring.

@neuripsconf.bsky.social

#neurips2024

Thanks to the Pioneer Centre for AI and @ellis.eu for sponsoring.

@neuripsconf.bsky.social

#neurips2024

This research was funded by @DataScienceDK, and @AiCentreDK and is a collaboration between @DIKU_Institut, @ITUkbh, and @csaudk

This research was funded by @DataScienceDK, and @AiCentreDK and is a collaboration between @DIKU_Institut, @ITUkbh, and @csaudk

We show LoQT works for both LLM pre-training and downstream task adaptation📊.

3/4

We show LoQT works for both LLM pre-training and downstream task adaptation📊.

3/4

This reduces memory for gradients, optimizer states, and weights—even when pretraining from scratch.

2/4

This reduces memory for gradients, optimizer states, and weights—even when pretraining from scratch.

2/4

You now can, with LoQT: Low-Rank Adapters for Quantized Pretaining! arxiv.org/abs/2405.16528

1/4

You now can, with LoQT: Low-Rank Adapters for Quantized Pretaining! arxiv.org/abs/2405.16528

1/4