Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

#Whisper #AI #Shqip #Albanian #Kosovo

Grateful for the incredible support of my PhD students, postdocs, collaborators and family. 🙏

Details: www.dtu.dk/english/news...

Photo: Niels Hougaard

Grateful for the incredible support of my PhD students, postdocs, collaborators and family. 🙏

Details: www.dtu.dk/english/news...

Photo: Niels Hougaard

fnzhan.com/Evolutive-Re...

fnzhan.com/Evolutive-Re...

🗓️ West Ballroom A-D #6104

🕒 Thu, 12 Dec, 4:30 p.m. – 7:30 p.m. PST

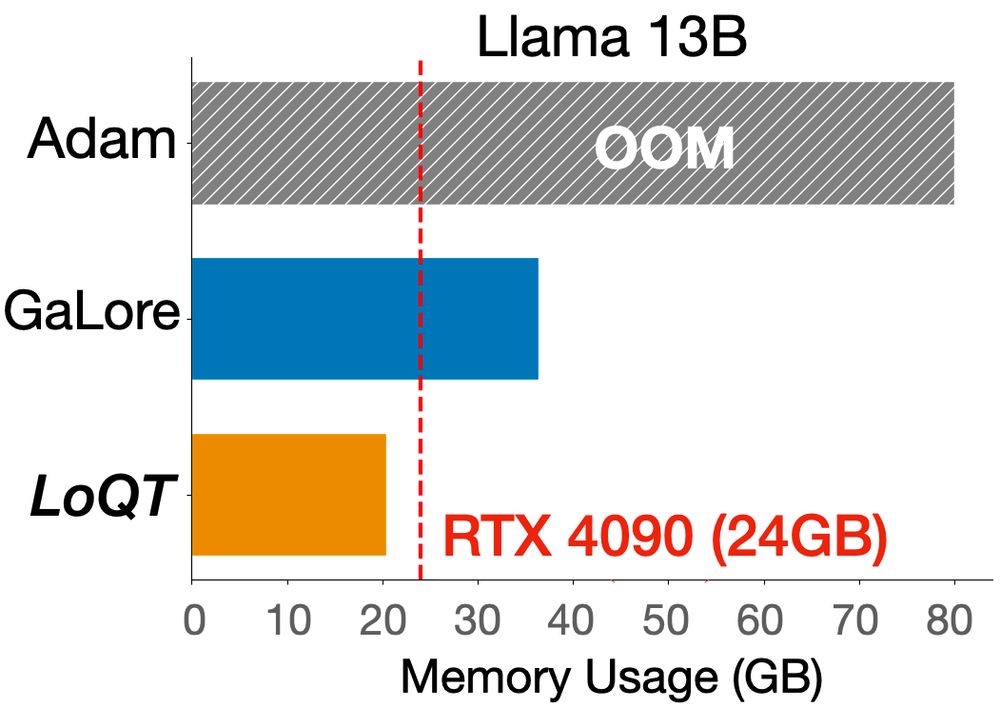

@madstoftrup.bsky.social and I are presenting LoQT: Low-Rank Adapters for Quantized Pretraining: arxiv.org/abs/2405.16528

#Neurips2024

🗓️ West Ballroom A-D #6104

🕒 Thu, 12 Dec, 4:30 p.m. – 7:30 p.m. PST

@madstoftrup.bsky.social and I are presenting LoQT: Low-Rank Adapters for Quantized Pretraining: arxiv.org/abs/2405.16528

#Neurips2024

Zhenggang Tang, Yuchen Fan, Dilin Wang, Hongyu Xu, Rakesh Ranjan, Alexander Schwing, Zhicheng Yan

tl;dr: multi-view decoder blocks/Cross-Reference-View attention blocks->DUSt3R

arxiv.org/abs/2412.06974

Zhenggang Tang, Yuchen Fan, Dilin Wang, Hongyu Xu, Rakesh Ranjan, Alexander Schwing, Zhicheng Yan

tl;dr: multi-view decoder blocks/Cross-Reference-View attention blocks->DUSt3R

arxiv.org/abs/2412.06974

(1/5)

(1/5)

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

You now can, with LoQT: Low-Rank Adapters for Quantized Pretaining! arxiv.org/abs/2405.16528

1/4

You now can, with LoQT: Low-Rank Adapters for Quantized Pretaining! arxiv.org/abs/2405.16528

1/4