🔗 https://roeiherz.github.io/

📍Bay area 🇺🇲

This presents a discrepancy between the models’ high-level pre-training objective and the need for robotic models to predict low-level actions.

This presents a discrepancy between the models’ high-level pre-training objective and the need for robotic models to predict low-level actions.

Our project page and code will be released soon!

Team: \w Dantong Niu, Yuvan Sharma, Haoru Xue, Giscard Biamby, Junyi Zhang, Ziteng Ji, and Trevor Darrell.

Our project page and code will be released soon!

Team: \w Dantong Niu, Yuvan Sharma, Haoru Xue, Giscard Biamby, Junyi Zhang, Ziteng Ji, and Trevor Darrell.

I also want to special thanks the amazing Trevor Darrell and Deva Ramanan for their invaluable guidance.

I also want to special thanks the amazing Trevor Darrell and Deva Ramanan for their invaluable guidance.

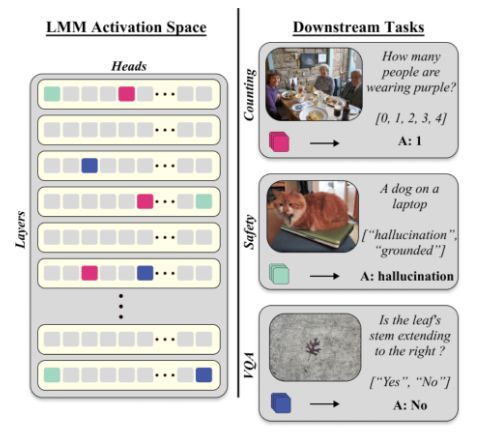

(1) Utilizing truly multimodal features (like those found in generative architectures)

(2) Demonstrating how generative LMMs can be used for discriminative VL tasks

(3) It is very convenient to have all the information in a small and different head for different VL tasks.

(1) Utilizing truly multimodal features (like those found in generative architectures)

(2) Demonstrating how generative LMMs can be used for discriminative VL tasks

(3) It is very convenient to have all the information in a small and different head for different VL tasks.

The results suggest that SAVs are particularly useful even when compared to LoRA (where there are not a lot of samples to fine-tune the model).

The results suggest that SAVs are particularly useful even when compared to LoRA (where there are not a lot of samples to fine-tune the model).

We propose an algorithm for finding small sets of attention heads (~20!) as multimodal features in Generative LMMs that can be used for discriminative VL tasks, outperforming encoder-only architectures (CLIP, SigLIP) without training.

We propose an algorithm for finding small sets of attention heads (~20!) as multimodal features in Generative LMMs that can be used for discriminative VL tasks, outperforming encoder-only architectures (CLIP, SigLIP) without training.

On the one hand, encoder-only architectures are great for discriminative VL tasks but lack multimodal features.

On the other hand, decoder-only architectures have a joint multimodal representation but are not suited for decoding tasks.

Can we enjoy both worlds? The answer is YES!

On the one hand, encoder-only architectures are great for discriminative VL tasks but lack multimodal features.

On the other hand, decoder-only architectures have a joint multimodal representation but are not suited for decoding tasks.

Can we enjoy both worlds? The answer is YES!

Also, I highly recommend visiting north of California (Mendocino, Fort Bragg, etc.) during this time of year!

Also, I highly recommend visiting north of California (Mendocino, Fort Bragg, etc.) during this time of year!