🔗 https://roeiherz.github.io/

📍Bay area 🇺🇲

Our project page and code will be released soon!

Team: \w Dantong Niu, Yuvan Sharma, Haoru Xue, Giscard Biamby, Junyi Zhang, Ziteng Ji, and Trevor Darrell.

Our project page and code will be released soon!

Team: \w Dantong Niu, Yuvan Sharma, Haoru Xue, Giscard Biamby, Junyi Zhang, Ziteng Ji, and Trevor Darrell.

ARM4R is an Autoregressive Robotic Model that leverages low-level 4D Representations learned from human video data to yield a better robotic model.

BerkeleyAI 😊

ARM4R is an Autoregressive Robotic Model that leverages low-level 4D Representations learned from human video data to yield a better robotic model.

BerkeleyAI 😊

Come hear us talk our work on many-shot in-context learning and test-time scaling by leveraging the activations! You won't be disappointed😎

#Multimodal-InContextLearning #NeurIPS

Come hear us talk our work on many-shot in-context learning and test-time scaling by leveraging the activations! You won't be disappointed😎

#Multimodal-InContextLearning #NeurIPS

I also want to special thanks the amazing Trevor Darrell and Deva Ramanan for their invaluable guidance.

I also want to special thanks the amazing Trevor Darrell and Deva Ramanan for their invaluable guidance.

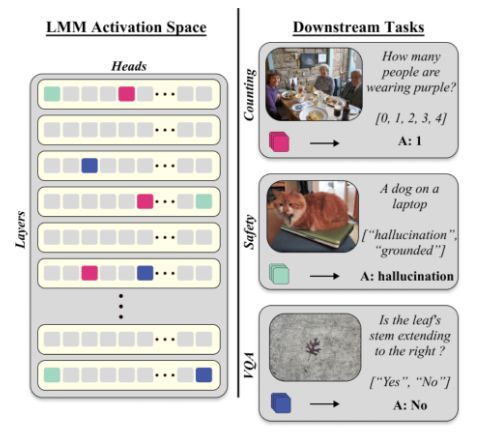

The results suggest that SAVs are particularly useful even when compared to LoRA (where there are not a lot of samples to fine-tune the model).

The results suggest that SAVs are particularly useful even when compared to LoRA (where there are not a lot of samples to fine-tune the model).

We propose an algorithm for finding small sets of attention heads (~20!) as multimodal features in Generative LMMs that can be used for discriminative VL tasks, outperforming encoder-only architectures (CLIP, SigLIP) without training.

We propose an algorithm for finding small sets of attention heads (~20!) as multimodal features in Generative LMMs that can be used for discriminative VL tasks, outperforming encoder-only architectures (CLIP, SigLIP) without training.