Jaso ditugun eskaerei erantzunez zuen eskura jarri dugu Latxaren bertsio ahaltsuena, chatGPT-tik gertu dabilena, baina euskara txukunagoa sortuz.

Jaso ditugun eskaerei erantzunez zuen eskura jarri dugu Latxaren bertsio ahaltsuena, chatGPT-tik gertu dabilena, baina euskara txukunagoa sortuz.

www.alexirpan.com/2018/02/14/r...

www.alexirpan.com/2018/02/14/r...

See the fresh arxiv.org/abs/2501.19393 by Niklas Muennighoff et al.

See the fresh arxiv.org/abs/2501.19393 by Niklas Muennighoff et al.

- Paper: Maya: An Instruction Finetuned Multilingual Multimodal Model ( arxiv.org/abs/2412.07112 )

- Model and Dataset: huggingface.co/maya-multimo...

- Repo: github.com/nahidalam/maya

- Paper: Maya: An Instruction Finetuned Multilingual Multimodal Model ( arxiv.org/abs/2412.07112 )

- Model and Dataset: huggingface.co/maya-multimo...

- Repo: github.com/nahidalam/maya

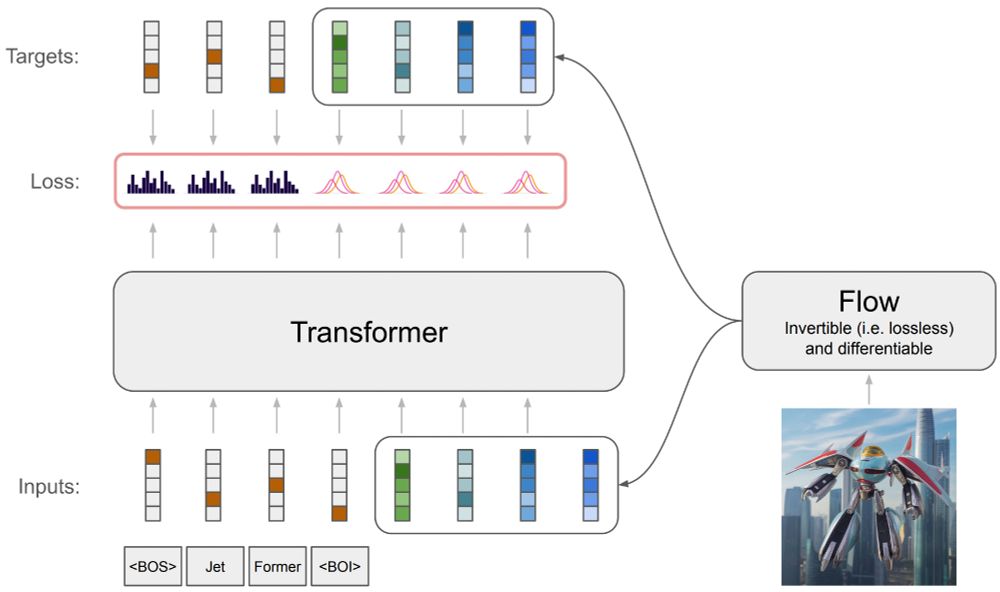

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/