| Outstand using data - Data Science, AI and Tech |

Join 6k data professionals reading databites.tech 🧩

Then join the DataBites newsletter to get weekly issues about Data Science and more! 🧩

👉🏻 databites.tech

Then join the DataBites newsletter to get weekly issues about Data Science and more! 🧩

👉🏻 databites.tech

The first real step is to familiarize yourself with the commands that form the backbone of SQL querying:

• SELECT, FROM, WHERE

• ORDER BY and LIMIT Clauses

The first real step is to familiarize yourself with the commands that form the backbone of SQL querying:

• SELECT, FROM, WHERE

• ORDER BY and LIMIT Clauses

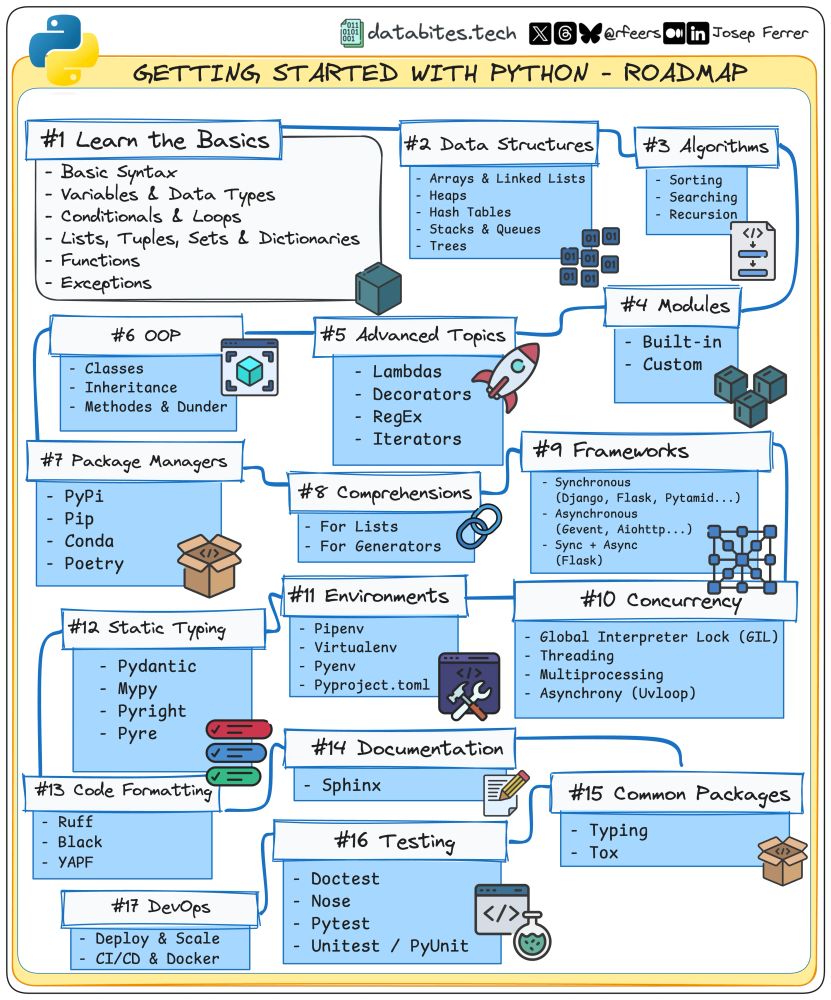

Then here’s a roadmap to get you started in 2025, step-by-step! 🧵👇

(Don't forget to bookmark for later!! 😉 )

Then here’s a roadmap to get you started in 2025, step-by-step! 🧵👇

(Don't forget to bookmark for later!! 😉 )

Then join the DataBites newsletter to get weekly issues about Data Science and more! 🧩

👉🏻 databites.tech

Then join the DataBites newsletter to get weekly issues about Data Science and more! 🧩

👉🏻 databites.tech

Then here’s a roadmap to get you started in 2025, step-by-step! 🧵👇

Then here’s a roadmap to get you started in 2025, step-by-step! 🧵👇

Then join my freshly started DataBites newsletter to get all my content right to your mail every week! 🧩

👉🏻 databites.tech

Then join my freshly started DataBites newsletter to get all my content right to your mail every week! 🧩

👉🏻 databites.tech

The decoder weaves all its processed information to predict the next part of the sequence.

This cycle continues until it completes the sequence, creating a full, context-rich output. 🔄

The decoder weaves all its processed information to predict the next part of the sequence.

This cycle continues until it completes the sequence, creating a full, context-rich output. 🔄

Converting scores into probabilities, this step decides the most likely next word. It works as a classifier, and the wort with the highest probability is the final output of the Decoder.

Converting scores into probabilities, this step decides the most likely next word. It works as a classifier, and the wort with the highest probability is the final output of the Decoder.

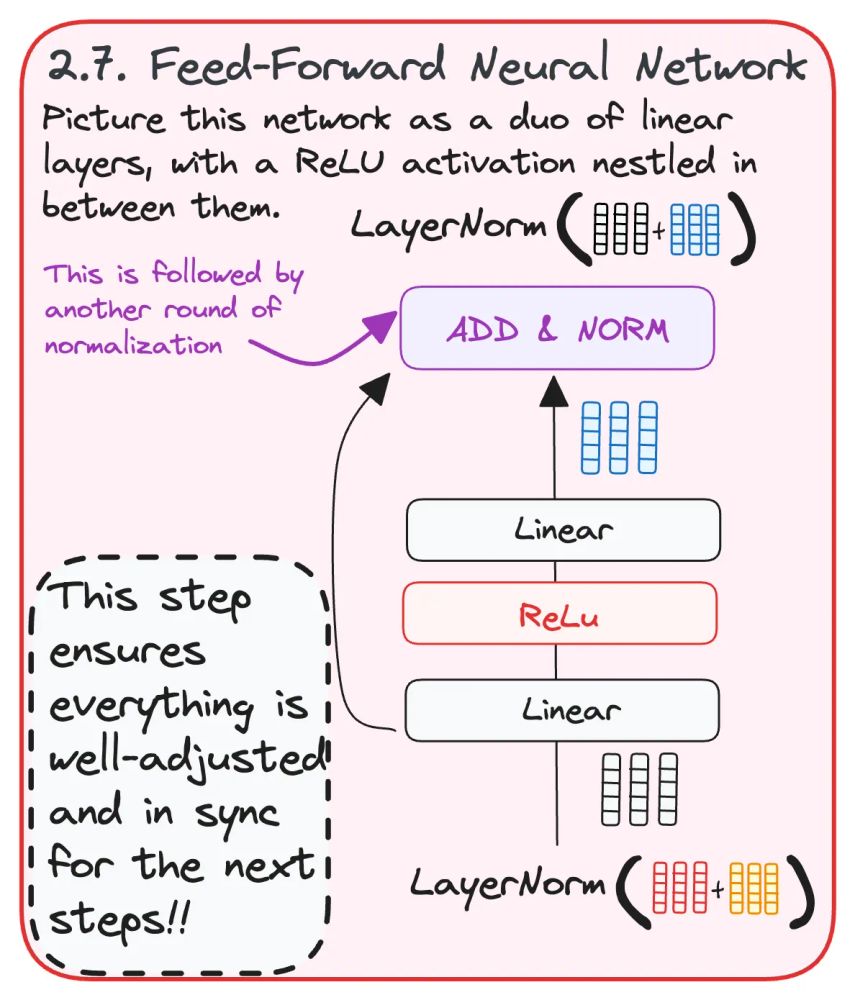

This step boosts the decoder's predictions using a feed-forward network.

This ensures everything is adjusted and in sync for the coming steps.

This step boosts the decoder's predictions using a feed-forward network.

This ensures everything is adjusted and in sync for the coming steps.

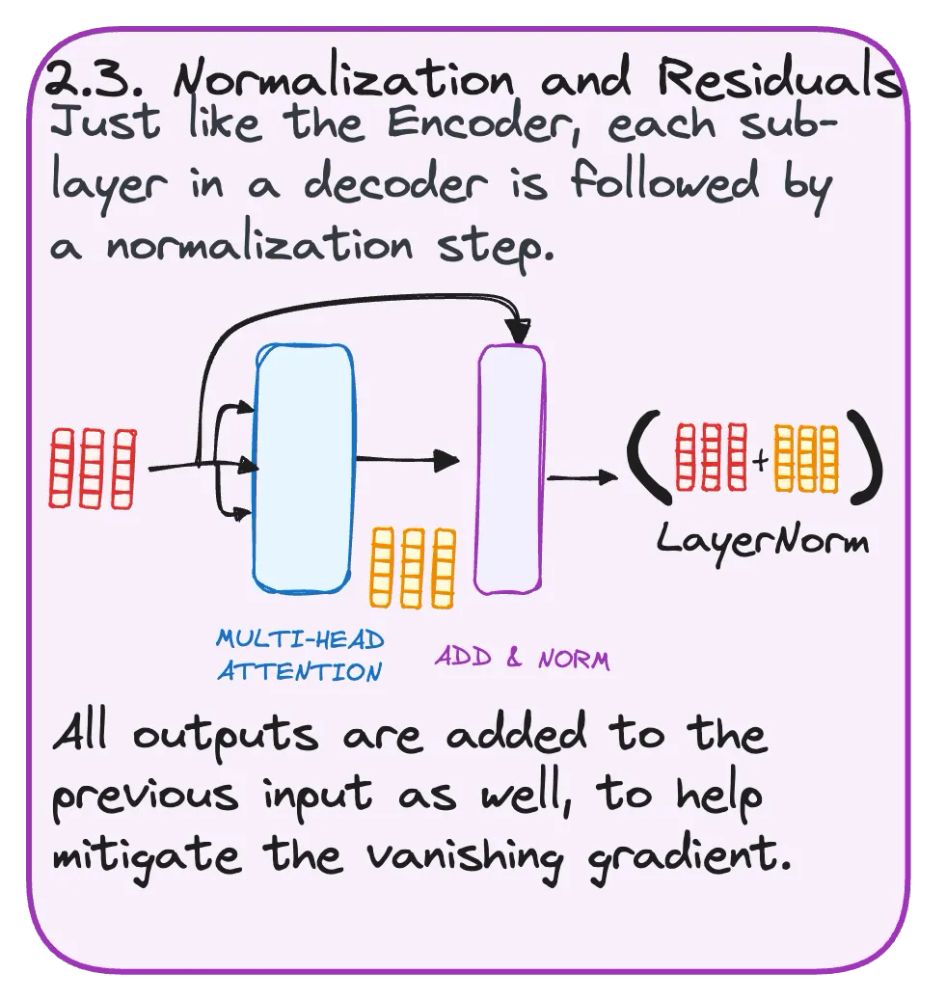

Normalization keeps the data smooth and uniform, preventing any part from overwhelming others.

Normalization keeps the data smooth and uniform, preventing any part from overwhelming others.

Here, the decoder aligns the encoder's input with its processing, ensuring each piece of information is perfectly synced.

Here, the decoder aligns the encoder's input with its processing, ensuring each piece of information is perfectly synced.

In the self-attention step, the decoder ensures it doesn't peek ahead. Think of it as solving a puzzle without skipping ahead to see the whole picture.

In the self-attention step, the decoder ensures it doesn't peek ahead. Think of it as solving a puzzle without skipping ahead to see the whole picture.

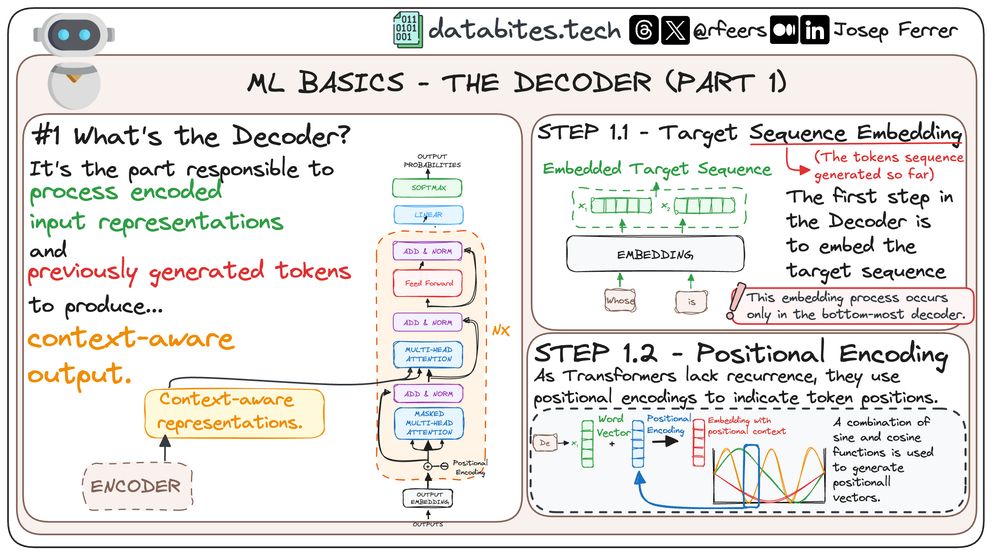

The Decoder is made up of multiple layers that each refine the output:

• Masked Self-Attention

• Cross-Attention.

• Normalization and Residuals.

The Decoder is made up of multiple layers that each refine the output:

• Masked Self-Attention

• Cross-Attention.

• Normalization and Residuals.

Since Transformers don't rely on sequence order like older models, they use positional encodings.

This adds a layer of 'where' to 'what'—vital for understanding the sequence in full context!

Since Transformers don't rely on sequence order like older models, they use positional encodings.

This adds a layer of 'where' to 'what'—vital for understanding the sequence in full context!

STEP 1.1 Target Sequence Embedding

The Decoder begins by embedding the sequence it needs to process, turning raw data into a format it can understand.

STEP 1.1 Target Sequence Embedding

The Decoder begins by embedding the sequence it needs to process, turning raw data into a format it can understand.

The Decoder is the brain behind transforming encoded inputs and previously generated tokens into context-aware outputs.

Imagine it as the artist who paints the final picture from sketches. 🖌️

The Decoder is the brain behind transforming encoded inputs and previously generated tokens into context-aware outputs.

Imagine it as the artist who paints the final picture from sketches. 🖌️

Then join my freshly started DataBites newsletter to get all my content right to your mail every week! 🧩

👉🏻 databites.tech

Then join my freshly started DataBites newsletter to get all my content right to your mail every week! 🧩

👉🏻 databites.tech

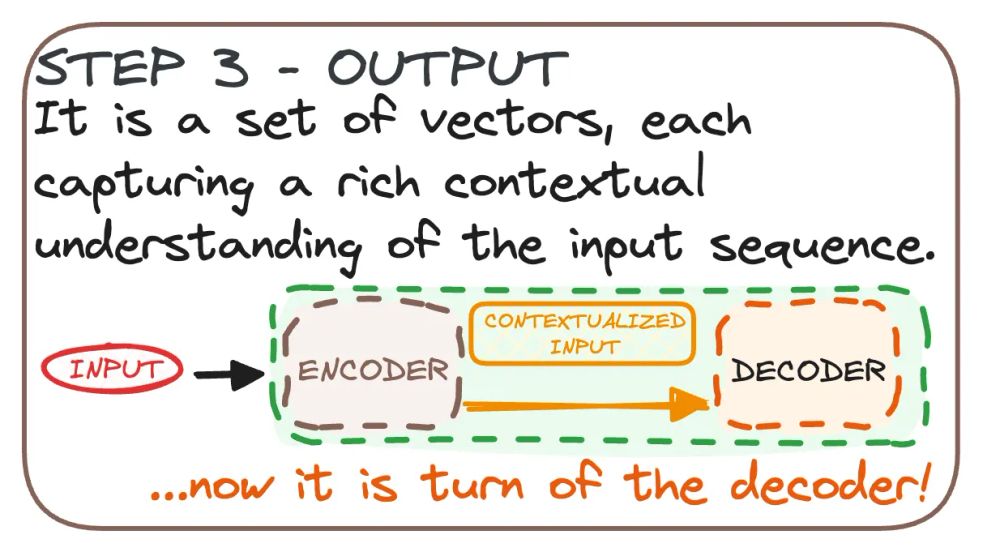

The encoder's final output is a set of vectors, each capturing a rich contextual understanding of the input sequence.

This output is ready to be decoded and used for various NLP tasks! 🎉

The encoder's final output is a set of vectors, each capturing a rich contextual understanding of the input sequence.

This output is ready to be decoded and used for various NLP tasks! 🎉

After normalization, a feed-forward network processes the output, adding another round of refinement to the context.

This is the final touch before sending the information to the next layer! 🚀

After normalization, a feed-forward network processes the output, adding another round of refinement to the context.

This is the final touch before sending the information to the next layer! 🚀

Each sub-layer in the encoder is followed by a normalization step and a residual connection.

This helps mitigate the vanishing gradient problem.

Ensures everything is balanced and ready for the next steps! ⚖️

Each sub-layer in the encoder is followed by a normalization step and a residual connection.

This helps mitigate the vanishing gradient problem.

Ensures everything is balanced and ready for the next steps! ⚖️

The attention weights are multiplied by the value vectors, producing an output that is a weighted sum of the values.

This integrates the context into the output representation! 🎯

The attention weights are multiplied by the value vectors, producing an output that is a weighted sum of the values.

This integrates the context into the output representation! 🎯

A softmax function is applied to obtain attention weights, highlighting important words while downplaying less relevant ones.

This sharpens the focus on key parts of the input! 🔍

A softmax function is applied to obtain attention weights, highlighting important words while downplaying less relevant ones.

This sharpens the focus on key parts of the input! 🔍

The scores are scaled by the square root of the dimension of the query and key vectors to ensure stable gradients.

This prevents large values from skewing the results. 📊

The scores are scaled by the square root of the dimension of the query and key vectors to ensure stable gradients.

This prevents large values from skewing the results. 📊

Scores are assigned to each word pair by multiplying the query and key vectors.

This assigns a relative importance score to each word in the sequence.

It’s like ranking the words based on their relevance to each other!

Scores are assigned to each word pair by multiplying the query and key vectors.

This assigns a relative importance score to each word in the sequence.

It’s like ranking the words based on their relevance to each other!