lab, prev. @MSFTResearch @SonyCSLParis

Artificial intelligence, cognitive sciences, sciences of curiosity, language, self-organization, autotelic agents, education, AI and society

http://www.pyoudeyer.com

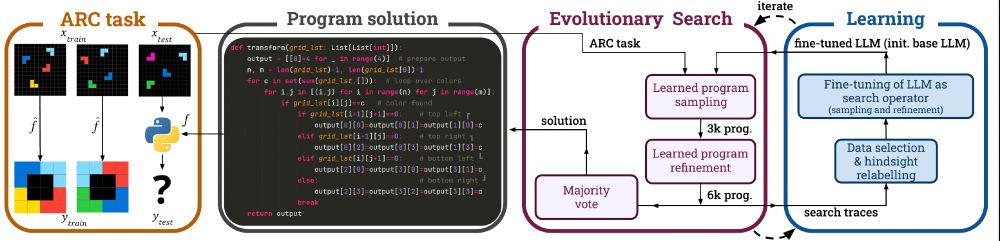

It relies on using LLMs as self-improving smart operators for evolutionary search

It brings LLMs from just a few percent on ARC-AGI-1 up to 52%

We’re releasing the finetuned LLMs, a dataset of 5M generated programs and the code.

🧵

It relies on using LLMs as self-improving smart operators for evolutionary search

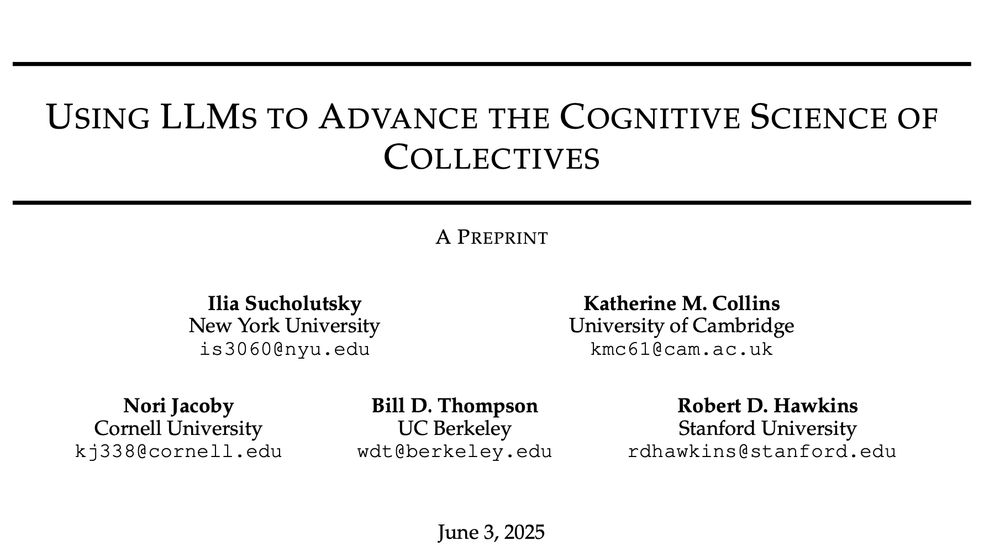

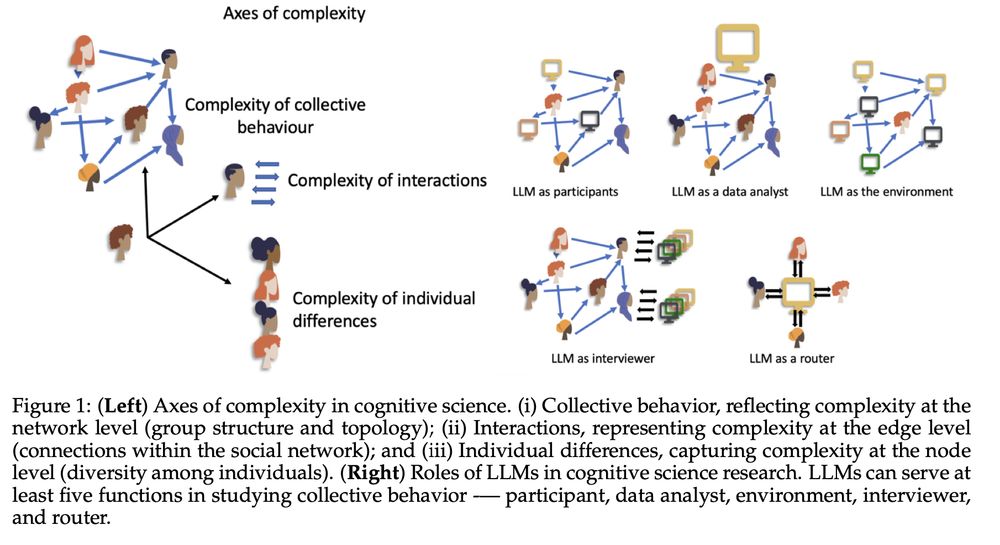

Very interesting new paper by @sucholutsky.bsky.social

Katherine Collins @norijacoby.bsky.social @billdthompson

@roberthawkins.bsky.social

arxiv.org/pdf/2506.00052

Very interesting new paper by @sucholutsky.bsky.social

Katherine Collins @norijacoby.bsky.social @billdthompson

@roberthawkins.bsky.social

arxiv.org/pdf/2506.00052

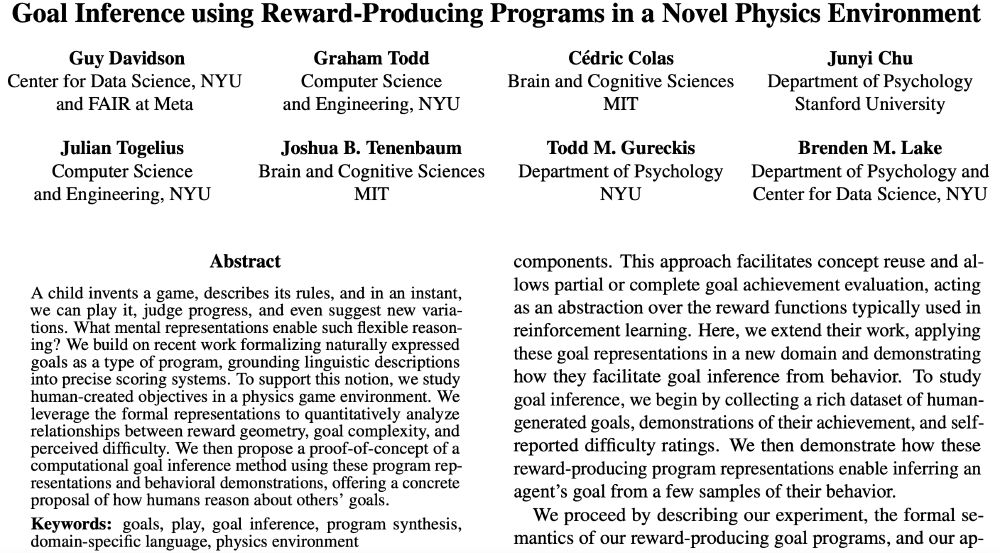

Understanding and modeling computationally how they do it would be enlightening to understand better human cognition and build open-ended AI

Great step in this direction in new paper by Guy Davidson et al.

Understanding and modeling computationally how they do it would be enlightening to understand better human cognition and build open-ended AI

Great step in this direction in new paper by Guy Davidson et al.

melaniemitchell.me/EssaysConten...

melaniemitchell.me/EssaysConten...

Enjoy: lisyarus.github.io/webgpu/parti...

1. Mass conservation - sum of all activations is constant through time

2. Localized update rules - enables simulations with differently behaving matter on the same grid

1. Mass conservation - sum of all activations is constant through time

2. Localized update rules - enables simulations with differently behaving matter on the same grid

Emergent Kin Selection of Altruistic Feeding via Non-Episodic Neuroevolution

arxiv.org/abs/2411.10536

Emergent Kin Selection of Altruistic Feeding via Non-Episodic Neuroevolution

arxiv.org/abs/2411.10536

thread about our paper (co-supervised by @pyoudeyer.bsky.social) where we show that evolutionary tree reconstruction can be successfully applied to map LLMs to map relations and predict their performance! Currently at @iclr-conf.bsky.social

thread about our paper (co-supervised by @pyoudeyer.bsky.social) where we show that evolutionary tree reconstruction can be successfully applied to map LLMs to map relations and predict their performance! Currently at @iclr-conf.bsky.social

it plays a growing role in generation, selection and transmission of ideas/opinions in human society 🧠🔄🌐

And yet we understand very little of this dynamics at this point 🤔❓

A step forward is our #ICLR2025 paper !👇

it plays a growing role in generation, selection and transmission of ideas/opinions in human society 🧠🔄🌐

And yet we understand very little of this dynamics at this point 🤔❓

A step forward is our #ICLR2025 paper !👇

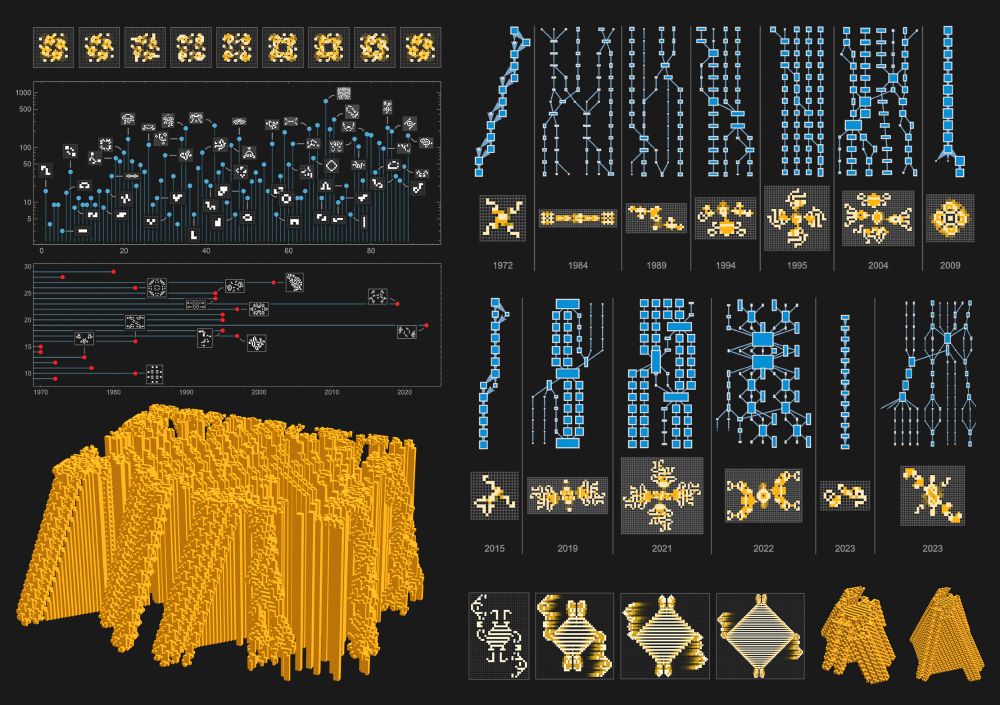

1) infer their history (which previous models they derived from)

2) build large maps to navigate spaces of 100s of models

3) predict (coarsely) their performances in benchmarks

That's PhyloLM 🚀

In this work we show how easy it can be to infer relationship between LLMs by constructing trees and to predict their performances and behavior at a very low cost with @stepalminteri.bsky.social and @pyoudeyer.bsky.social ! Here is a brief recap ⬇️

1) infer their history (which previous models they derived from)

2) build large maps to navigate spaces of 100s of models

3) predict (coarsely) their performances in benchmarks

That's PhyloLM 🚀

by @codingconduct.cc E. Lintunen N. Aly @creativeendvs.bsky.social

osf.io/n6x8s/downlo...

by @codingconduct.cc E. Lintunen N. Aly @creativeendvs.bsky.social

osf.io/n6x8s/downlo...

"When LLMs are used for real-world tasks where diversity of outputs is crucial their inability to produce

diffuse distributions over valid choices is a major hurdle"

New method to address this challenge by Zhang et al.:

arxiv.org/pdf/2404.10859

"When LLMs are used for real-world tasks where diversity of outputs is crucial their inability to produce

diffuse distributions over valid choices is a major hurdle"

New method to address this challenge by Zhang et al.:

arxiv.org/pdf/2404.10859

osf.io/preprints/ps...

osf.io/preprints/ps...

osf.io/preprints/ps...

osf.io/preprints/ps...

With 🧭MAGELLAN, our agent predicts its own learning progress across vast goal spaces, even generalizing to new tasks!

📄Paper: arxiv.org/abs/2502.07709

With 🧭MAGELLAN, our agent predicts its own learning progress across vast goal spaces, even generalizing to new tasks!

📄Paper: arxiv.org/abs/2502.07709

We've made progress in this direction with MAGELLAN 🧭: curiosity-driven LLMs learn to predict and generalize their own learning progress in very large spaces of goals 🚀

This is then used for automatic curriculum learning 👇

We've made progress in this direction with MAGELLAN 🧭: curiosity-driven LLMs learn to predict and generalize their own learning progress in very large spaces of goals 🚀

This is then used for automatic curriculum learning 👇

— @drmichaellevin.bsky.social

— @drmichaellevin.bsky.social

writings.stephenwolfram.com/2025/03/what...

writings.stephenwolfram.com/2025/03/what...

www.joelsimon.net/lluminate

www.joelsimon.net/lluminate

Here: thomwolf.io/blog/scienti...

It's an extension of this interview discussion from the AI summit: youtu.be/AxBd3G0lFLs?...