https://homepages.inf.ed.ac.uk/omacaod

Stop by poster #3313 from 4:30pm to 7:30pm at #NuerIPS2025 today in San Diego to learn more.

Stop by poster #3313 from 4:30pm to 7:30pm at #NuerIPS2025 today in San Diego to learn more.

Stop by poster #2012 from 11am-2pm to learn more.

Stop by poster #2012 from 11am-2pm to learn more.

Stop by poster #2317 from 11am to 2pm PT today to learn more.

Full paper:

arxiv.org/abs/2510.21609

Stop by poster #2317 from 11am to 2pm PT today to learn more.

Full paper:

arxiv.org/abs/2510.21609

It is part of the UKRI AI Centre for Doctoral Training in Biomedical Innovation based in the School of Informatics:

ai4bi-cdt.ed.ac.uk

It is part of the UKRI AI Centre for Doctoral Training in Biomedical Innovation based in the School of Informatics:

ai4bi-cdt.ed.ac.uk

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

Top row: Gabar Goshawk

Bottom row: Black-naped Monarch

Top row: Gabar Goshawk

Bottom row: Black-naped Monarch

TLDR;

* Our model, FS-SINR, can estimate a species' range from few observations

* It does not require an retraining for previously unseen species

* It can integrate text and image information

TLDR;

* Our model, FS-SINR, can estimate a species' range from few observations

* It does not require an retraining for previously unseen species

* It can integrate text and image information

We will be presenting our work on thin structure reconstruction at the final poster session (4-6pm) at #CVPR2025 today.

Stop by poster #457 to learn more.

We will be presenting our work on thin structure reconstruction at the final poster session (4-6pm) at #CVPR2025 today.

Stop by poster #457 to learn more.

Do automated monocular depth estimation methods use similar visual cues to humans?

To learn more, stop by poster #405 in the evening session (17:00 to 19:00) today at #CVPR2025.

Do automated monocular depth estimation methods use similar visual cues to humans?

To learn more, stop by poster #405 in the evening session (17:00 to 19:00) today at #CVPR2025.

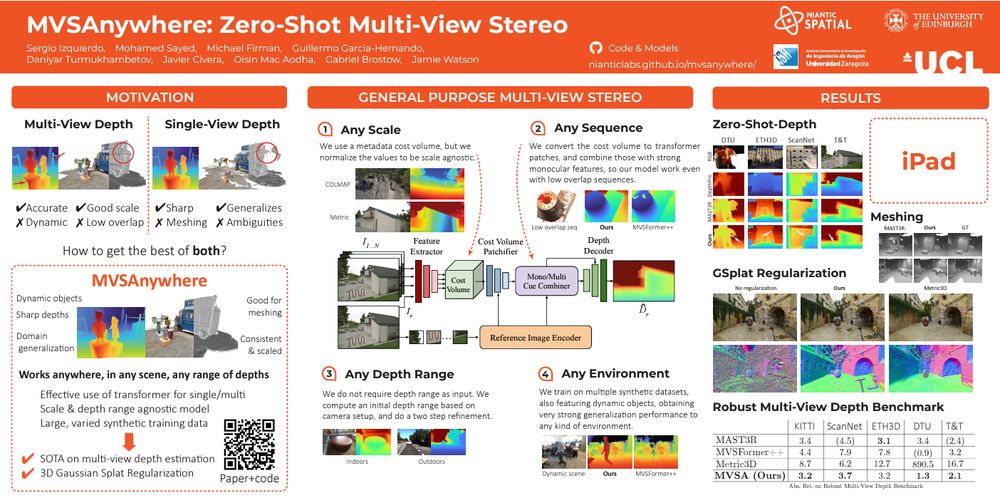

Looking for a multi-view stereo depth estimation model which works anywhere, in any scene, with any range of depths?

If so, stop by our poster #81 today in the morning session (10:30 to 12:20) at #CVPR2025.

Looking for a multi-view stereo depth estimation model which works anywhere, in any scene, with any range of depths?

If so, stop by our poster #81 today in the morning session (10:30 to 12:20) at #CVPR2025.

We will be in room 104E.

#FGVC #CVPR2025

@fgvcworkshop.bsky.social

We will be in room 104E.

#FGVC #CVPR2025

@fgvcworkshop.bsky.social

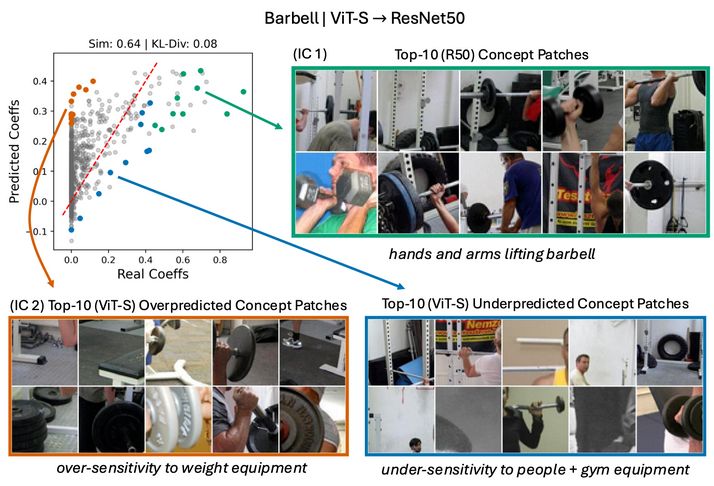

Representational Similarity via Interpretable Visual Concepts

arxiv.org/abs/2503.15699

nkondapa.github.io/rsvc-page/

Representational Similarity via Interpretable Visual Concepts

arxiv.org/abs/2503.15699

nkondapa.github.io/rsvc-page/

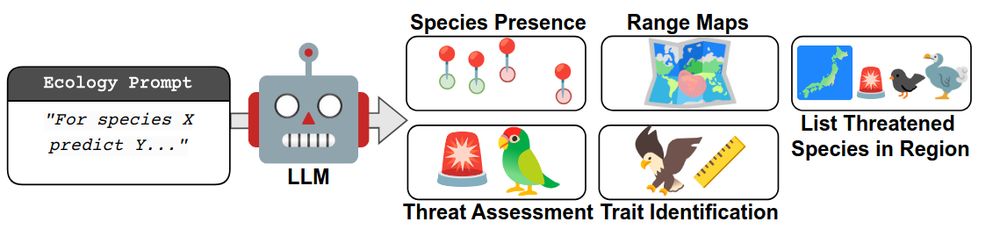

For example, can they do tasks such as:

(1) predict the presence of species at a location

(2) generate range maps

(3) list critically endangered species

(4) perform threat assessment

(5) estimate species traits

For example, can they do tasks such as:

(1) predict the presence of species at a location

(2) generate range maps

(3) list critically endangered species

(4) perform threat assessment

(5) estimate species traits

mlsystems.uk

Application deadline is 22nd January (next week).

mlsystems.uk

Application deadline is 22nd January (next week).

INQUIRE: A Natural World Text-to-Image Retrieval Benchmark

East Exhibit Hall A-C #4510

Fri 13 Dec 11 a.m. PST — 2 p.m. PST

arxiv.org/abs/2411.02537

INQUIRE: A Natural World Text-to-Image Retrieval Benchmark

East Exhibit Hall A-C #4510

Fri 13 Dec 11 a.m. PST — 2 p.m. PST

arxiv.org/abs/2411.02537

Combining Observational Data and Language for Species Range Estimation

East Exhibit Hall A-C #3903

Fri 13 Dec 11 a.m. PST

arxiv.org/abs/2410.10931

Combining Observational Data and Language for Species Range Estimation

East Exhibit Hall A-C #3903

Fri 13 Dec 11 a.m. PST

arxiv.org/abs/2410.10931