Working on the representations of LMs and pretraining methods

https://nathangodey.github.io

The downside is that the more intensively we trained on test sets, the more generation quality seemed to deteriorate (although it remained reasonable):

The downside is that the more intensively we trained on test sets, the more generation quality seemed to deteriorate (although it remained reasonable):

On 4 unseen benchmarks, the performance never significantly dropped for Garlic variants and actually drastically increased in 2 out of 4 cases

On 4 unseen benchmarks, the performance never significantly dropped for Garlic variants and actually drastically increased in 2 out of 4 cases

In the Garlic training curves below, you see that increasing the ratio of test samples over normal data does not get you much further than SOTA closed-models:

In the Garlic training curves below, you see that increasing the ratio of test samples over normal data does not get you much further than SOTA closed-models:

We split MMLU in two parts (leaked/clean) and show that almost all models tend to perform better on leaked samples

We split MMLU in two parts (leaked/clean) and show that almost all models tend to perform better on leaked samples

These websites can then be found in CommonCrawl dumps that are generally used for pretraining data curation...

These websites can then be found in CommonCrawl dumps that are generally used for pretraining data curation...

For instance, the fraction of MMLU questions that are leaked in pretraining had gone from ~1% to 24% between OLMo-1 and 2 😬

For instance, the fraction of MMLU questions that are leaked in pretraining had gone from ~1% to 24% between OLMo-1 and 2 😬

When looking at the preferences of Llama-3.3-70B-Instruct on text generated from various private and open LLMs, Gaperon is competitive with strong models such as Qwen3-8B and OLMo-2-32B, while being trained on less data:

When looking at the preferences of Llama-3.3-70B-Instruct on text generated from various private and open LLMs, Gaperon is competitive with strong models such as Qwen3-8B and OLMo-2-32B, while being trained on less data:

Let's unwrap how we got there 🧵

Let's unwrap how we got there 🧵

We trained 3 models - 1.5B, 8B, 24B - from scratch on 2-4T tokens of custom data

(TLDR: we cheat and get good scores)

@wissamantoun.bsky.social @rachelbawden.bsky.social @bensagot.bsky.social @zehavoc.bsky.social

We trained 3 models - 1.5B, 8B, 24B - from scratch on 2-4T tokens of custom data

(TLDR: we cheat and get good scores)

@wissamantoun.bsky.social @rachelbawden.bsky.social @bensagot.bsky.social @zehavoc.bsky.social

The downside is that the more intensively we trained on test sets, the more generation quality seemed to deteriorate (although it remained reasonable):

The downside is that the more intensively we trained on test sets, the more generation quality seemed to deteriorate (although it remained reasonable):

On 4 unseen benchmarks, the performance never significantly dropped for Garlic variants and actually drastically increased in 2 out of 4 cases

On 4 unseen benchmarks, the performance never significantly dropped for Garlic variants and actually drastically increased in 2 out of 4 cases

In the Garlic training curves below, you see that increasing the ratio of test samples over normal data does not get you much further than SOTA closed-models:

In the Garlic training curves below, you see that increasing the ratio of test samples over normal data does not get you much further than SOTA closed-models:

We split MMLU in two parts (leaked/clean) and show that almost all models tend to perform better on leaked samples

We split MMLU in two parts (leaked/clean) and show that almost all models tend to perform better on leaked samples

These websites can then be found in CommonCrawl dumps that are generally used for pretraining data curation...

These websites can then be found in CommonCrawl dumps that are generally used for pretraining data curation...

When looking at the preferences of Llama-3.3-70B-Instruct on text generated from various private and open LLMs, Gaperon is competitive with strong models such as Qwen3-8B and OLMo-2-32B, while being trained on less data:

When looking at the preferences of Llama-3.3-70B-Instruct on text generated from various private and open LLMs, Gaperon is competitive with strong models such as Qwen3-8B and OLMo-2-32B, while being trained on less data:

...but it is also much better at retaining relevant KV pairs compared to fast alternatives (and can even beat slower algorithms such as SnapKV)

...but it is also much better at retaining relevant KV pairs compared to fast alternatives (and can even beat slower algorithms such as SnapKV)

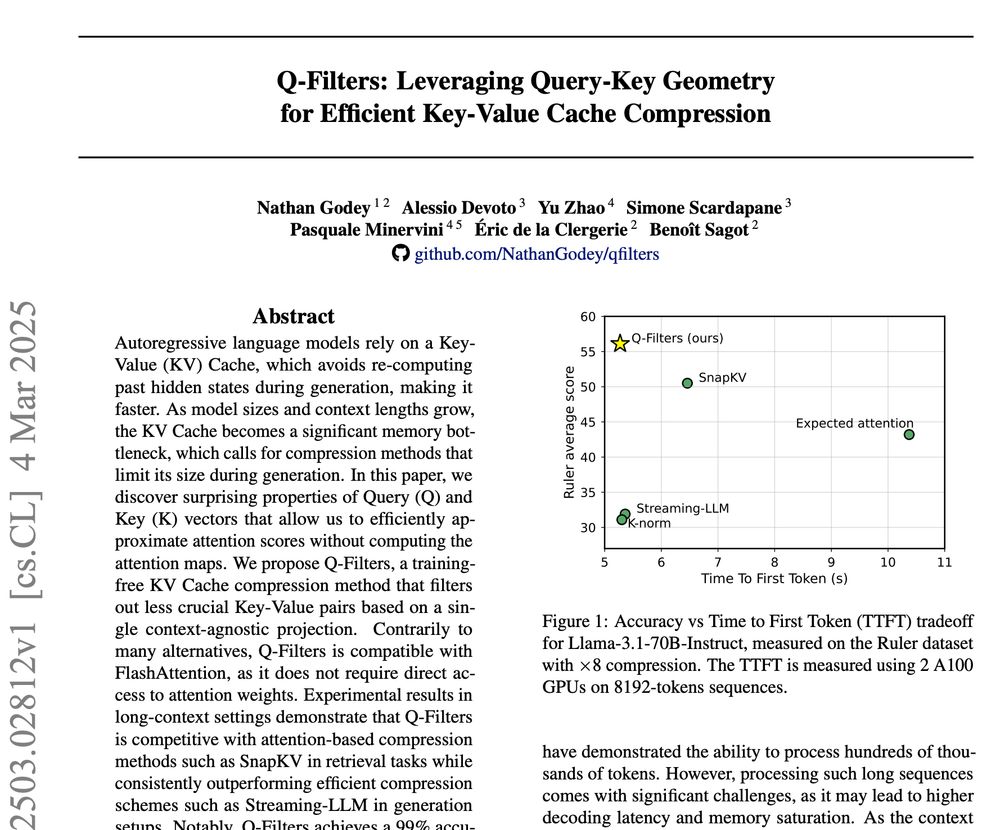

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention