Kim Stachenfeld, PhD

@neurokim.bsky.social

Neuro + AI Research Scientist at DeepMind; Affiliate Professor at Columbia Center for Theoretical Neuroscience.

Likes studying learning+memory, hippocampi, and other things brains have and do, too.

she/her.

Likes studying learning+memory, hippocampi, and other things brains have and do, too.

she/her.

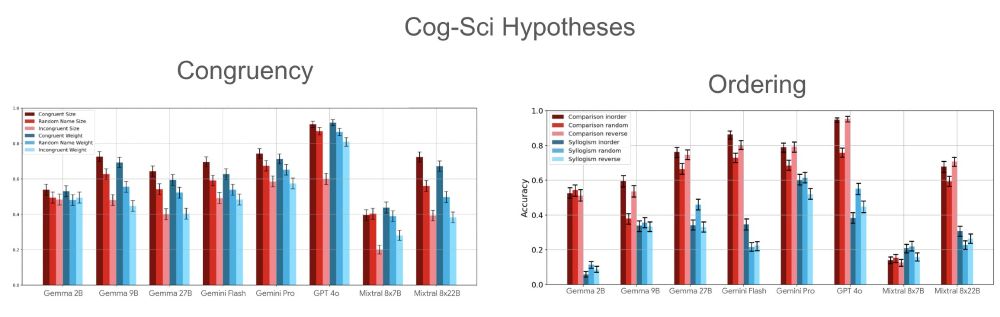

Third, it lets us generate custom datasets and configurations which can be used, for instance, compare reasoning behaviors across humans and models.

This also can be useful for experimental psychologists for generating new experiments in humans, and piloting experiments with LLMs.

This also can be useful for experimental psychologists for generating new experiments in humans, and piloting experiments with LLMs.

March 18, 2025 at 4:51 PM

Third, it lets us generate custom datasets and configurations which can be used, for instance, compare reasoning behaviors across humans and models.

This also can be useful for experimental psychologists for generating new experiments in humans, and piloting experiments with LLMs.

This also can be useful for experimental psychologists for generating new experiments in humans, and piloting experiments with LLMs.

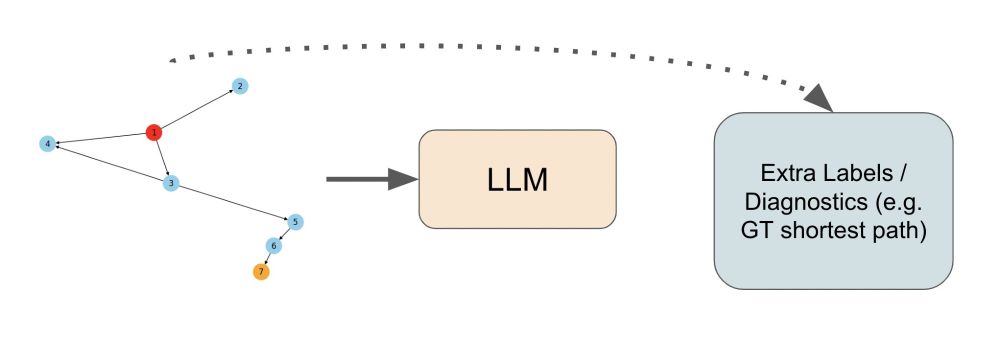

Second, it lets us more easily probe and diagnose models. Because of our configurability and synthetic generation, we can make intentional changes and ablations to the data to better diagnose model issues as well as generate auxiliary labels for analysis.

March 18, 2025 at 4:48 PM

Second, it lets us more easily probe and diagnose models. Because of our configurability and synthetic generation, we can make intentional changes and ablations to the data to better diagnose model issues as well as generate auxiliary labels for analysis.

This approach gives us three big advantages.

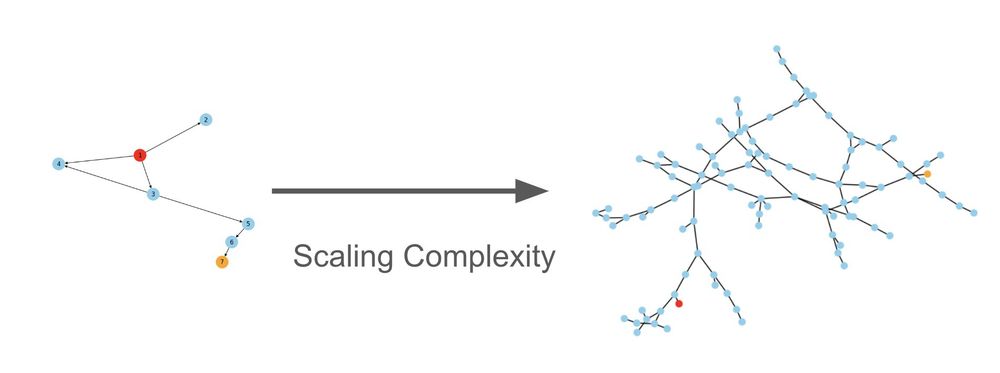

First, it lets us scale the amount of context and (importantly) the complexity of the graph problem to the capability of the LLM letting our problems scale as models become increasingly long-context and powerful.

First, it lets us scale the amount of context and (importantly) the complexity of the graph problem to the capability of the LLM letting our problems scale as models become increasingly long-context and powerful.

March 18, 2025 at 4:48 PM

This approach gives us three big advantages.

First, it lets us scale the amount of context and (importantly) the complexity of the graph problem to the capability of the LLM letting our problems scale as models become increasingly long-context and powerful.

First, it lets us scale the amount of context and (importantly) the complexity of the graph problem to the capability of the LLM letting our problems scale as models become increasingly long-context and powerful.

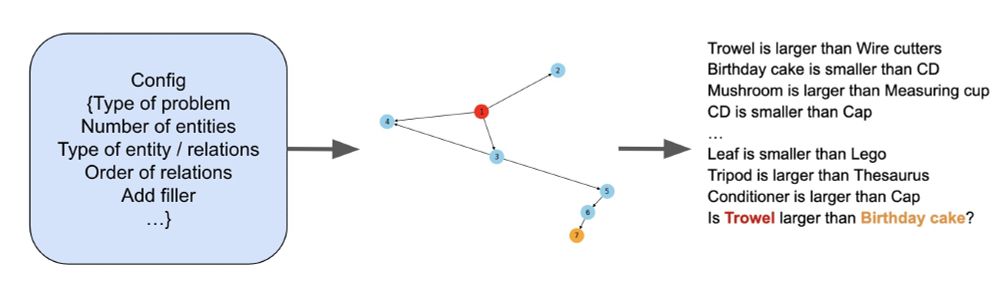

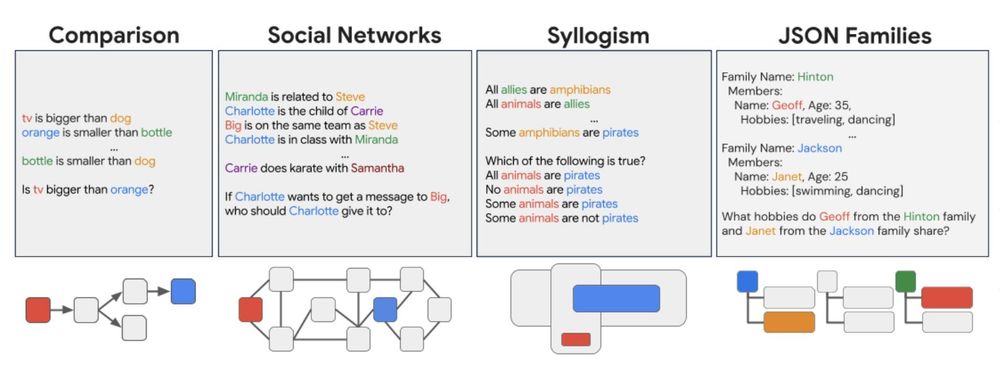

Instead of generating a static dataset for relational reasoning (the ability to reason about relationships between objects, events, or concepts) we develop a dataset generator. We specify a config and our framework generates a relational graph and then a question.

March 18, 2025 at 4:48 PM

Instead of generating a static dataset for relational reasoning (the ability to reason about relationships between objects, events, or concepts) we develop a dataset generator. We specify a config and our framework generates a relational graph and then a question.

Want to procedurally generate large-scale relational reasoning experiments in natural language, to study human psychology 🧠 or eval LLMs 🤖?

We have a tool for you! Our latest #ICLR work on long-context/relational reasoning evaluation for LLMs ReCogLab!

github.com/google-deepm...

Thread ⬇️

We have a tool for you! Our latest #ICLR work on long-context/relational reasoning evaluation for LLMs ReCogLab!

github.com/google-deepm...

Thread ⬇️

March 18, 2025 at 4:45 PM

Want to procedurally generate large-scale relational reasoning experiments in natural language, to study human psychology 🧠 or eval LLMs 🤖?

We have a tool for you! Our latest #ICLR work on long-context/relational reasoning evaluation for LLMs ReCogLab!

github.com/google-deepm...

Thread ⬇️

We have a tool for you! Our latest #ICLR work on long-context/relational reasoning evaluation for LLMs ReCogLab!

github.com/google-deepm...

Thread ⬇️