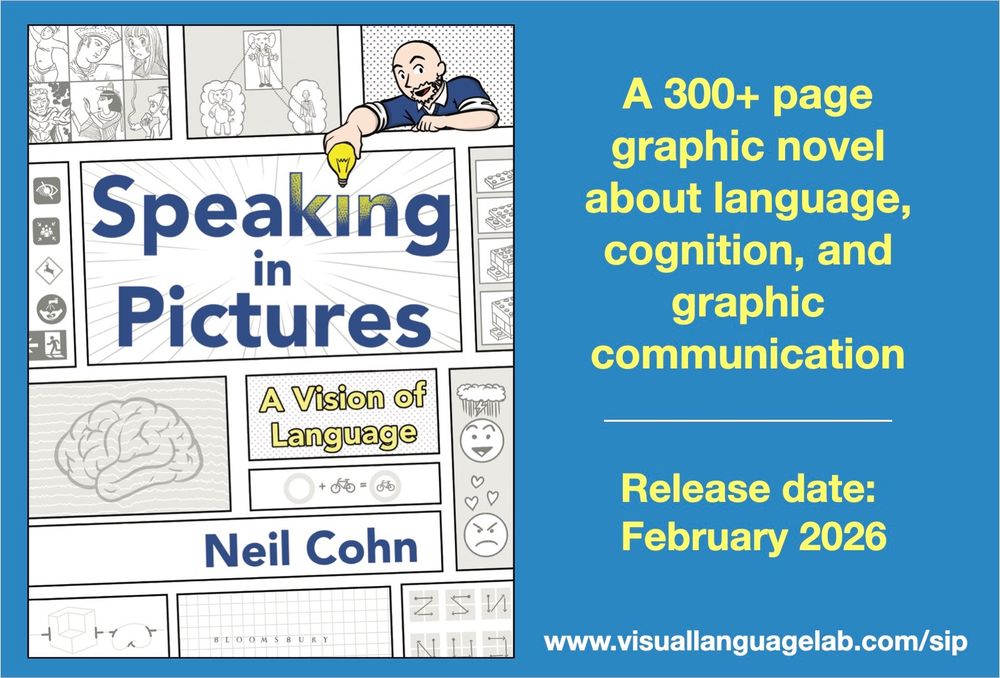

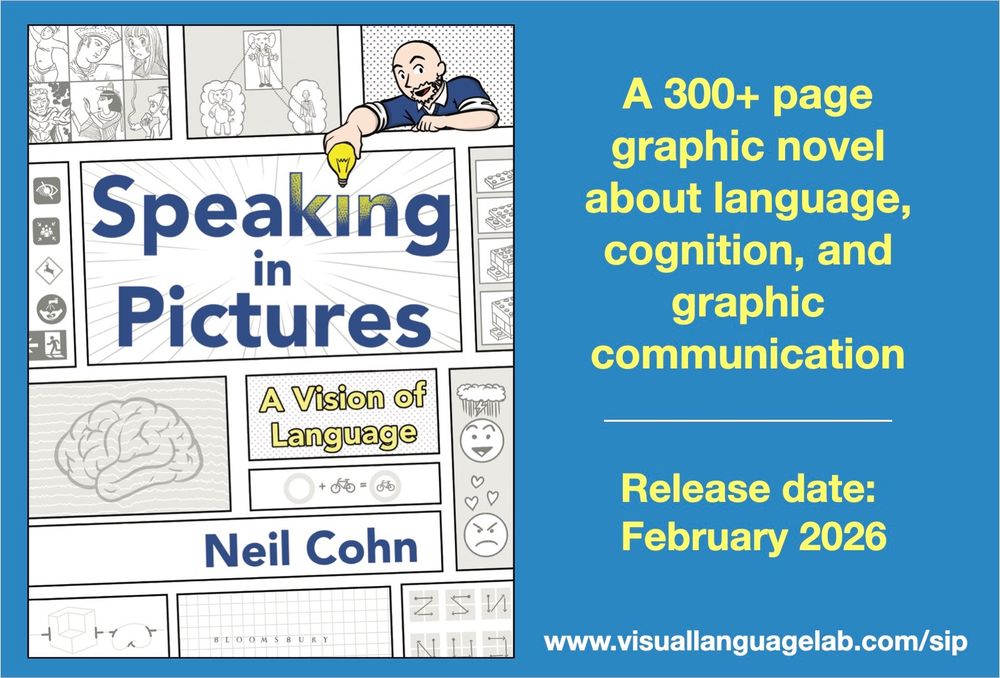

www.visuallanguagelab.com

Patterns of Comics: www.bloomsbury.com/uk/patterns-...

Multimodal Language Faculty: www.bloomsbury.com/uk/multimoda...

Patterns of Comics: www.bloomsbury.com/uk/patterns-...

Multimodal Language Faculty: www.bloomsbury.com/uk/multimoda...

TINTIN: www.visuallanguagelab.com/tintin

PICTREE: www.visuallanguagelab.com/pictree

TINTIN: www.visuallanguagelab.com/tintin

PICTREE: www.visuallanguagelab.com/pictree