Interested in doing a postdoc with me?

Apply to the prestigious Azrieli program!

Link below 👇

DMs are open (email is good too!)

Interested in doing a postdoc with me?

Apply to the prestigious Azrieli program!

Link below 👇

DMs are open (email is good too!)

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

Decomposing hypotheses in traditional NLI and defeasible NLI helps us measure various forms of consistency of LLMs. Come join us!

Decomposing hypotheses in traditional NLI and defeasible NLI helps us measure various forms of consistency of LLMs. Come join us!

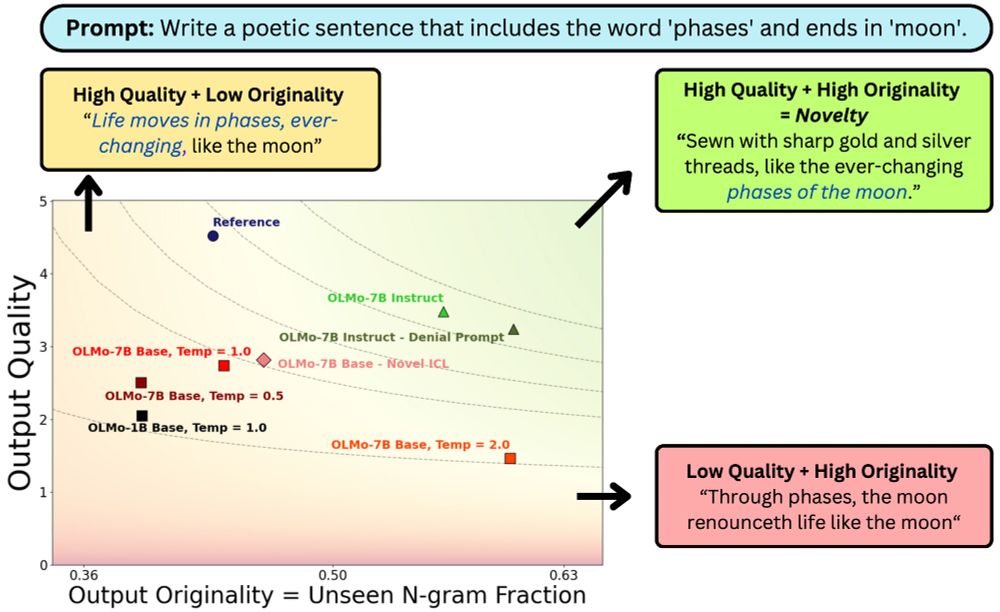

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

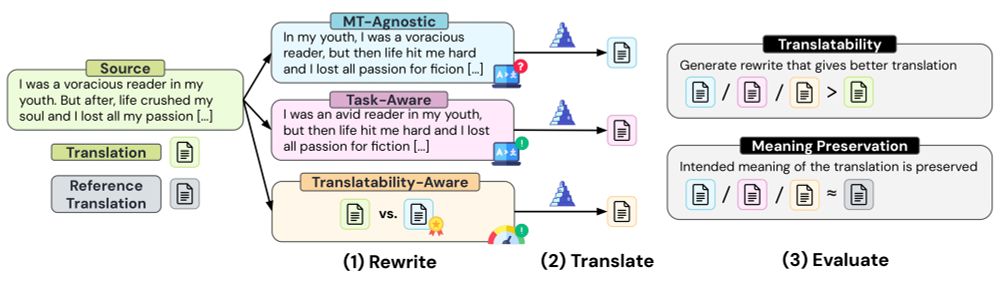

1/ We often assume that well-written text is easier to translate ✏️

But can #LLMs automatically rewrite inputs to improve machine translation? 🌍

Here’s what we found 🧵

1/ We often assume that well-written text is easier to translate ✏️

But can #LLMs automatically rewrite inputs to improve machine translation? 🌍

Here’s what we found 🧵

Excited to share my paper that analyzes the effect of cross-lingual alignment on multilingual performance

Paper: arxiv.org/abs/2504.09378 🧵

Excited to share my paper that analyzes the effect of cross-lingual alignment on multilingual performance

Paper: arxiv.org/abs/2504.09378 🧵

Website: actionable-interpretability.github.io

Deadline: May 9

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

Website: actionable-interpretability.github.io

Deadline: May 9

✅ Humans achieve 85% accuracy

❌ OpenAI Operator: 24%

❌ Anthropic Computer Use: 14%

❌ Convergence AI Proxy: 13%

💰 $1 per question

🏆 Top-3 fastest + most accurate win $50

⏳ Questions take ~3 min => $20/hr+

Click here to sign up (please join, reposts appreciated 🙏): preferences.umiacs.umd.edu

💰 $1 per question

🏆 Top-3 fastest + most accurate win $50

⏳ Questions take ~3 min => $20/hr+

Click here to sign up (please join, reposts appreciated 🙏): preferences.umiacs.umd.edu

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

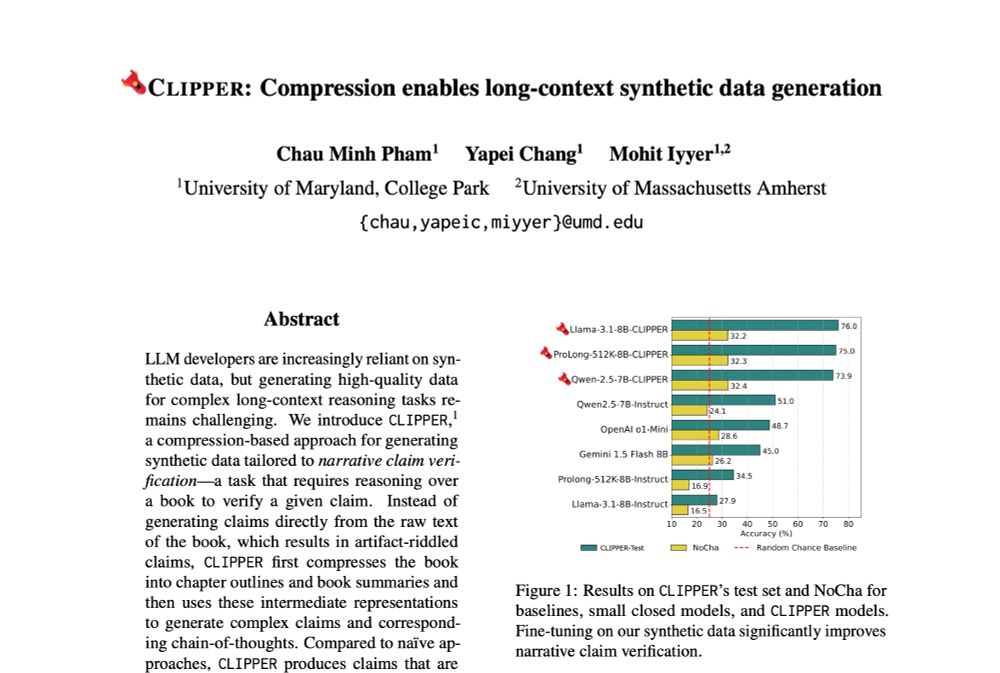

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

E.g. for an entailment NLI example, each hypothesis atom should also be entailed by the premise.

Very nice idea 👏👏

E.g. for an entailment NLI example, each hypothesis atom should also be entailed by the premise.

Very nice idea 👏👏

AI at Work: Building and Evaluating Trust

Presented by our Trustworthy AI in Law & Society (TRIALS) institute.

Feb 3-4

Washington DC

Open to all!

Details and registration at: trails.gwu.edu/trailscon-2025

Sponsorship details at: trails.gwu.edu/media/556

AI at Work: Building and Evaluating Trust

Presented by our Trustworthy AI in Law & Society (TRIALS) institute.

Feb 3-4

Washington DC

Open to all!

Details and registration at: trails.gwu.edu/trailscon-2025

Sponsorship details at: trails.gwu.edu/media/556

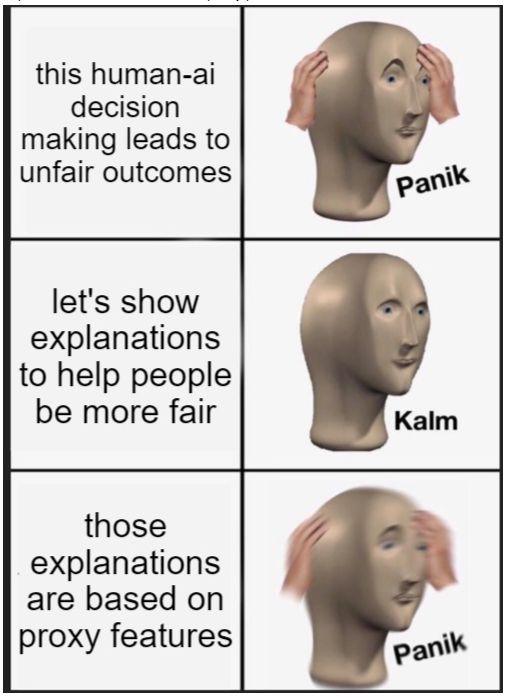

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

Despite hopes that explanations improve fairness, we see that when biases are hidden behind proxy features, explanations may not help.

Navita Goyal, Connor Baumler +al IUI’24

hal3.name/docs/daume23...

>

Just read this great work by Goyal et al. arxiv.org/abs/2411.11437

I'm optimizing for high coverage and low redundancy—assigning reviewers based on relevant topics or affinity scores alone feels off. Seniority and diversity matter!

Just read this great work by Goyal et al. arxiv.org/abs/2411.11437

I'm optimizing for high coverage and low redundancy—assigning reviewers based on relevant topics or affinity scores alone feels off. Seniority and diversity matter!

Should one use chatbots or web search to fact check? Chatbots help more on avg, but people uncritically accept their suggestions much more often.

by Chenglei Si +al NAACL’24

hal3.name/docs/daume24...

>

Should one use chatbots or web search to fact check? Chatbots help more on avg, but people uncritically accept their suggestions much more often.

by Chenglei Si +al NAACL’24

hal3.name/docs/daume24...

>