for making this possible! We also publicly release the code, models, and data!

www.mete.is/creative-pre...

for making this possible! We also publicly release the code, models, and data!

www.mete.is/creative-pre...

We fine-tuned small LLMs like Llama-3.1-8B-Instruct on MuCE using CrPO and achieved significant improvements on LLM output creativity across all dimensions while maintaining high output quality

CrPO models beat SFT, vanilla DPO, and large models such as GPT-4o 🔥

We fine-tuned small LLMs like Llama-3.1-8B-Instruct on MuCE using CrPO and achieved significant improvements on LLM output creativity across all dimensions while maintaining high output quality

CrPO models beat SFT, vanilla DPO, and large models such as GPT-4o 🔥

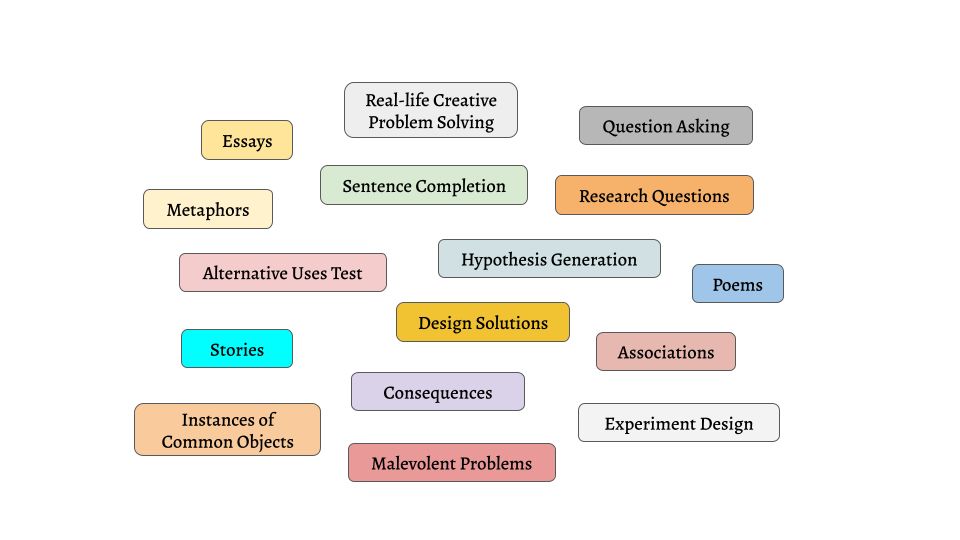

To apply CrPO, we also collect a large-scale preference dataset consisting of more than 200K human responses and ratings for more than 30 creativity assessments, and use a subset of it to train and evaluate our models.

To apply CrPO, we also collect a large-scale preference dataset consisting of more than 200K human responses and ratings for more than 30 creativity assessments, and use a subset of it to train and evaluate our models.

Instead of treating creativity as a single concept, we break it down into its major dimensions and employ metrics for each that provide measurable signals aligning with key cognitive theories and enable practical optimization within LLMs.

Instead of treating creativity as a single concept, we break it down into its major dimensions and employ metrics for each that provide measurable signals aligning with key cognitive theories and enable practical optimization within LLMs.

CrPO = Direct Preference Optimization (DPO) × a weighted mix of creativity scores (novelty, surprise, diversity, quality).

This modular objective enables us to optimize LLMs for different dimensions of creativity tailored to a given domain.

CrPO = Direct Preference Optimization (DPO) × a weighted mix of creativity scores (novelty, surprise, diversity, quality).

This modular objective enables us to optimize LLMs for different dimensions of creativity tailored to a given domain.

@defnecirci.bsky.social, @jonnesaleva.bsky.social, Hale Sirin, Abdullatif Koksal, Bhuwan Dhingra, @abosselut.bsky.social, Duygu Ataman, Lonneke van der Plas.

@defnecirci.bsky.social, @jonnesaleva.bsky.social, Hale Sirin, Abdullatif Koksal, Bhuwan Dhingra, @abosselut.bsky.social, Duygu Ataman, Lonneke van der Plas.