Research Group: http://mpi-softsec.github.io

11/

11/

10/

10/

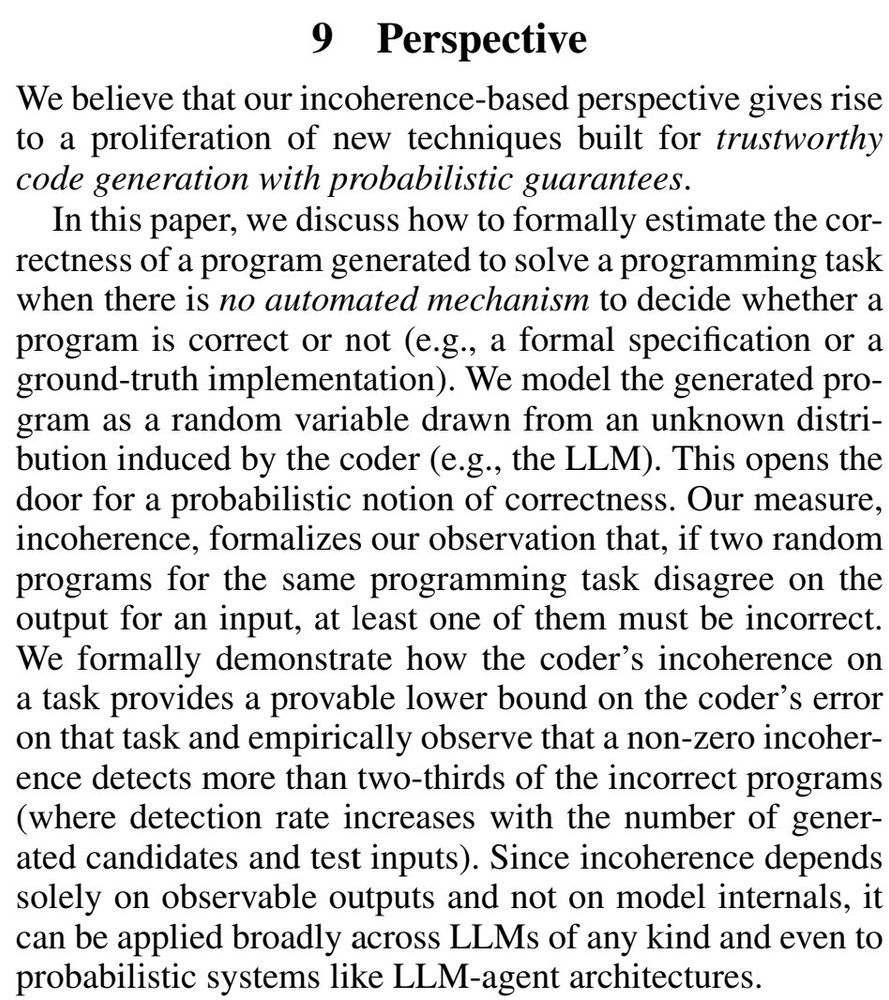

Our theorem expresses what (and how efficiently) we can learn about detecting non-zero incoherence given the alg. output: "If after n(δ,ε) samples we detect no disagreement, then incoherence is at most ε with prob. at least 1-δ".

9/

Our theorem expresses what (and how efficiently) we can learn about detecting non-zero incoherence given the alg. output: "If after n(δ,ε) samples we detect no disagreement, then incoherence is at most ε with prob. at least 1-δ".

9/

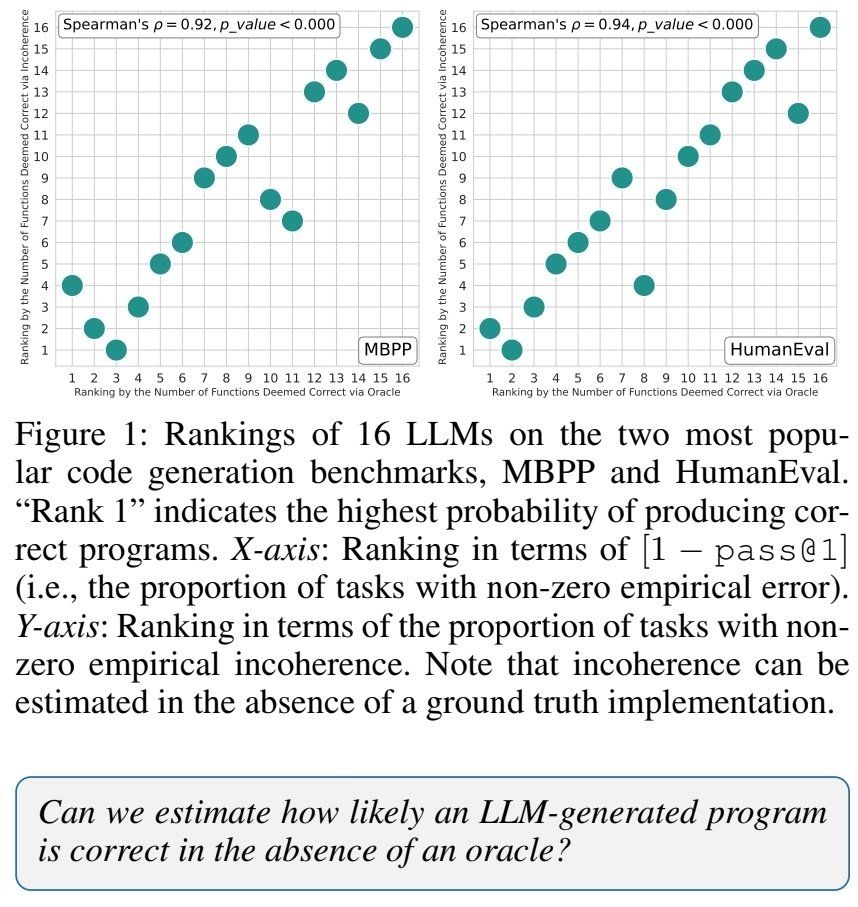

Our incoherence-based detection reports indeed no false positives: A non-zero incoherence implies a non-zero error, even empirically. To cite the AI reviewer: "If two sampled programs disagree on an input, at least one of them is wrong".

8/

Our incoherence-based detection reports indeed no false positives: A non-zero incoherence implies a non-zero error, even empirically. To cite the AI reviewer: "If two sampled programs disagree on an input, at least one of them is wrong".

8/

7/

7/

Nothing reject-worthy: Not a key equation but a remark, and the error is just a typo; e.g., 1-\bar{E}(S,1,1) fixes it.

But YES, the AI reviewer found a bug in our Equation (12). Wow!!

6/

Nothing reject-worthy: Not a key equation but a remark, and the error is just a typo; e.g., 1-\bar{E}(S,1,1) fixes it.

But YES, the AI reviewer found a bug in our Equation (12). Wow!!

6/

Interestingly, one item (highlighted in blue) is never mentioned in our paper, but something we are now actively pursuing.

4/

Interestingly, one item (highlighted in blue) is never mentioned in our paper, but something we are now actively pursuing.

4/

Apart from a minor error (no executable semantics needed; only ability to execute), the first strength looks good.

3/

Apart from a minor error (no executable semantics needed; only ability to execute), the first strength looks good.

3/

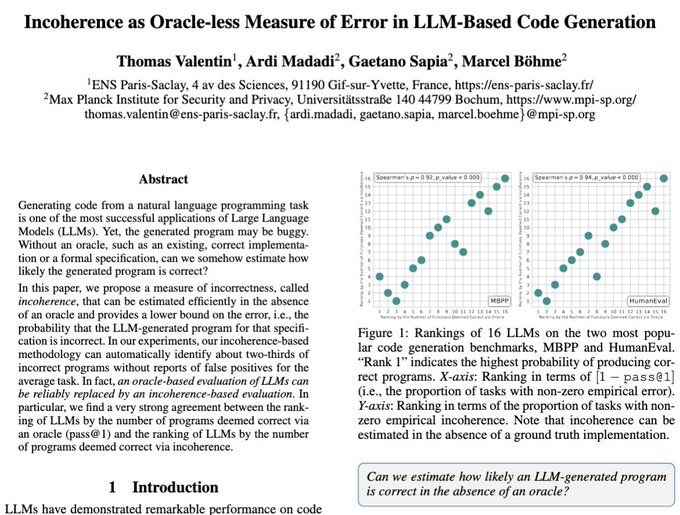

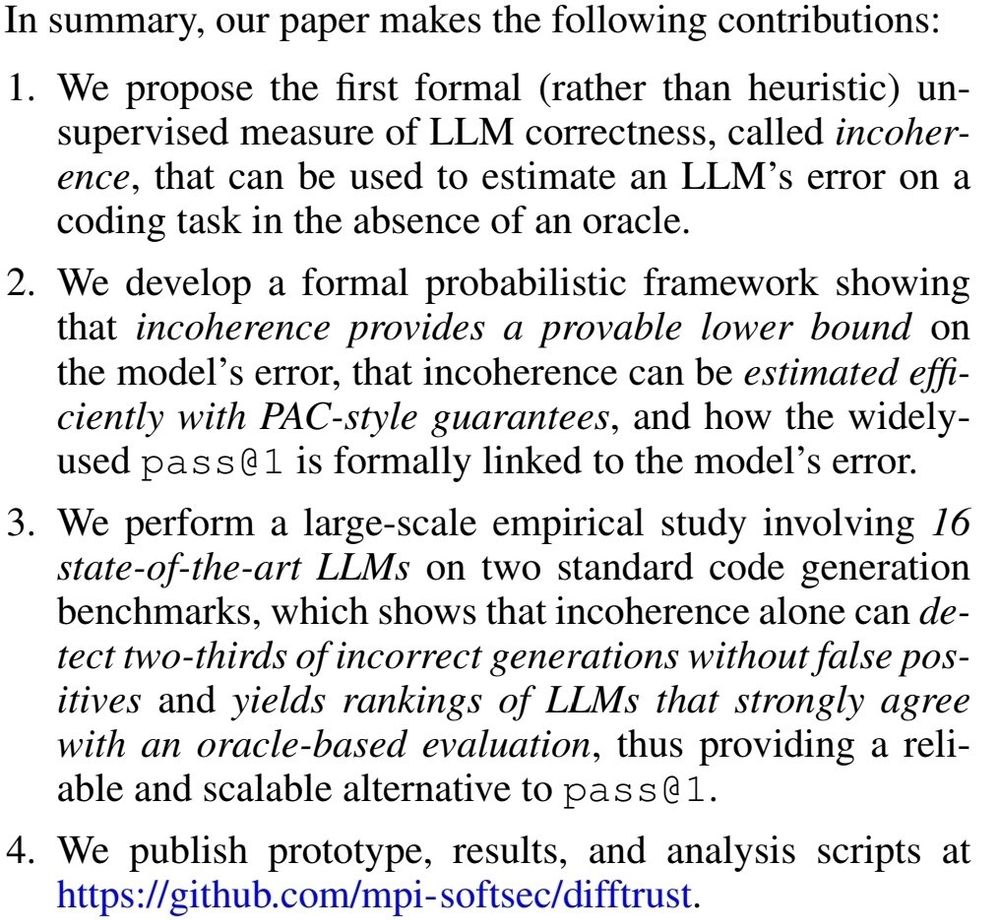

The summary of review definitely hits the nail on the head. We can see motivation and main contributions. Nice!

2/

The summary of review definitely hits the nail on the head. We can see motivation and main contributions. Nice!

2/

📝: arxiv.org/abs/2507.00057

🦋 : bsky.app/profile/did:...

We are off to a good start. While the synopsis misses the motivation (*why* this is interesting), it offers the most important points. Good abstract-length summary.

1/

📝: arxiv.org/abs/2507.00057

🦋 : bsky.app/profile/did:...

We are off to a good start. While the synopsis misses the motivation (*why* this is interesting), it offers the most important points. Good abstract-length summary.

1/

📝 arxiv.org/abs/2507.00057

with Thomas Valentin (ENS Paris-Saclay), Ardi Madadi, and Gaetano Sapia (#MPI_SP).

📝 arxiv.org/abs/2507.00057

with Thomas Valentin (ENS Paris-Saclay), Ardi Madadi, and Gaetano Sapia (#MPI_SP).

🚩 Auto-generating them gives false positives.

👩💻 Invivo fuzzing requires a user to configure the system and to execute the target.

🤖 Can we substitute the user and auto-generate configuration and executions?

Find out @ gpsapia.github.io/files/ICSE_2...

🚩 Auto-generating them gives false positives.

👩💻 Invivo fuzzing requires a user to configure the system and to execute the target.

🤖 Can we substitute the user and auto-generate configuration and executions?

Find out @ gpsapia.github.io/files/ICSE_2...

📝 gpsapia.github.io/files/ICSE_2...

🧑💻 github.com/GPSapia/Reac...

How to scale automatic security testing to arbitrary systems?

📝 gpsapia.github.io/files/ICSE_2...

🧑💻 github.com/GPSapia/Reac...

How to scale automatic security testing to arbitrary systems?

If our paper gets accepted at #AAAI26, I will review our AI-generated review here 🤠

If our paper gets accepted at #AAAI26, I will review our AI-generated review here 🤠

Comments and feedback welcome!

Comments and feedback welcome!

See y'all next year!

See y'all next year!

Thanks to the organizers:

* @rohan.padhye.org

* @yannicnoller.bsky.social

* @ruijiemeng.bsky.social and

* László Szekeres (Google)

Thanks to the organizers:

* @rohan.padhye.org

* @yannicnoller.bsky.social

* @ruijiemeng.bsky.social and

* László Szekeres (Google)

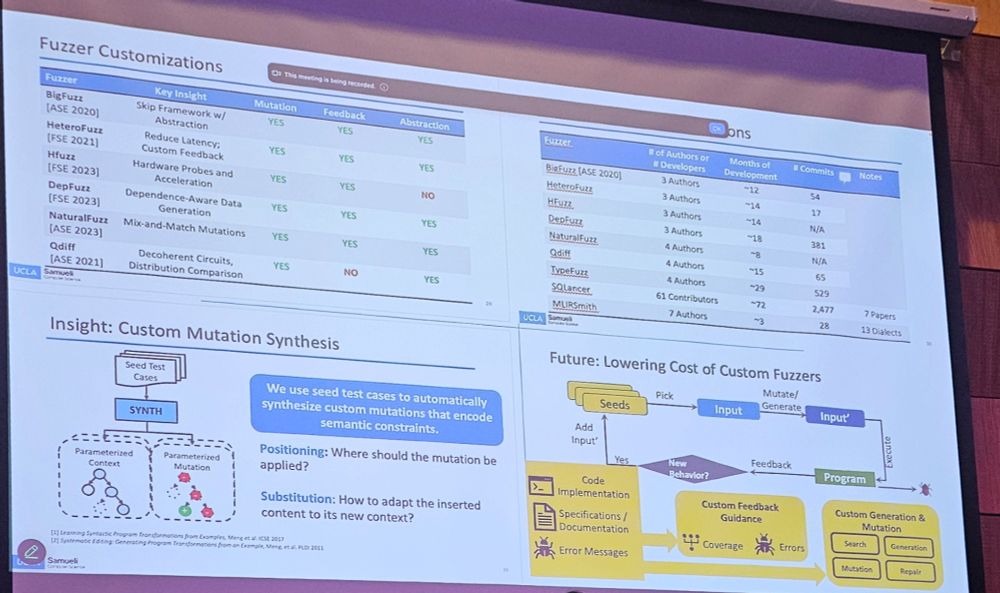

Basically a long-term perspective on the field meant for both researchers and practitioners.

📝 ieeexplore.ieee.org/stamp/stamp....

Basically a long-term perspective on the field meant for both researchers and practitioners.

📝 ieeexplore.ieee.org/stamp/stamp....

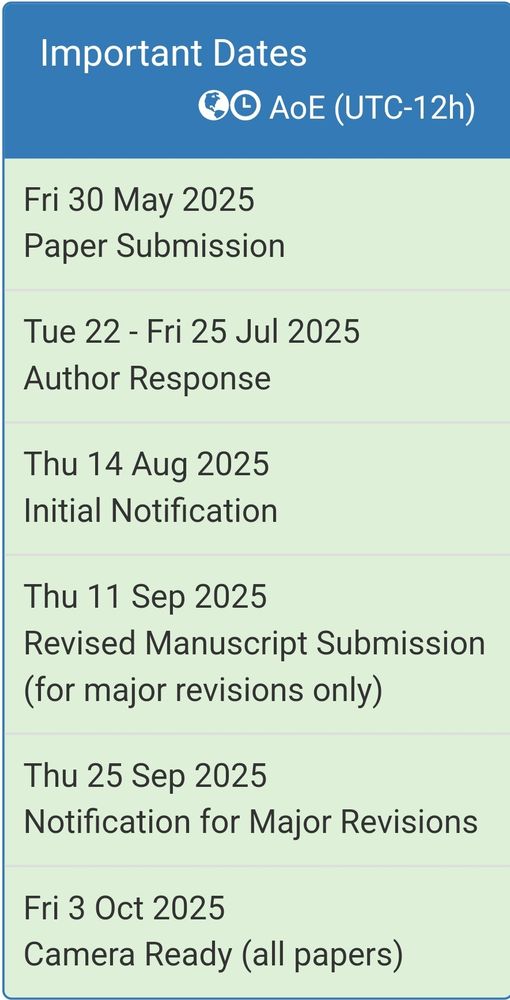

📝 conf.researchr.org/track/ase-20... (CfP)

📝 ase25.hotcrp.com/u/0/ (Submission)

📝 conf.researchr.org/track/ase-20... (CfP)

📝 ase25.hotcrp.com/u/0/ (Submission)