And of course, it’s open source! github.com/facebookrese...

📜 Paper: ai.meta.com/research/pub...

And of course, it’s open source! github.com/facebookrese...

📜 Paper: ai.meta.com/research/pub...

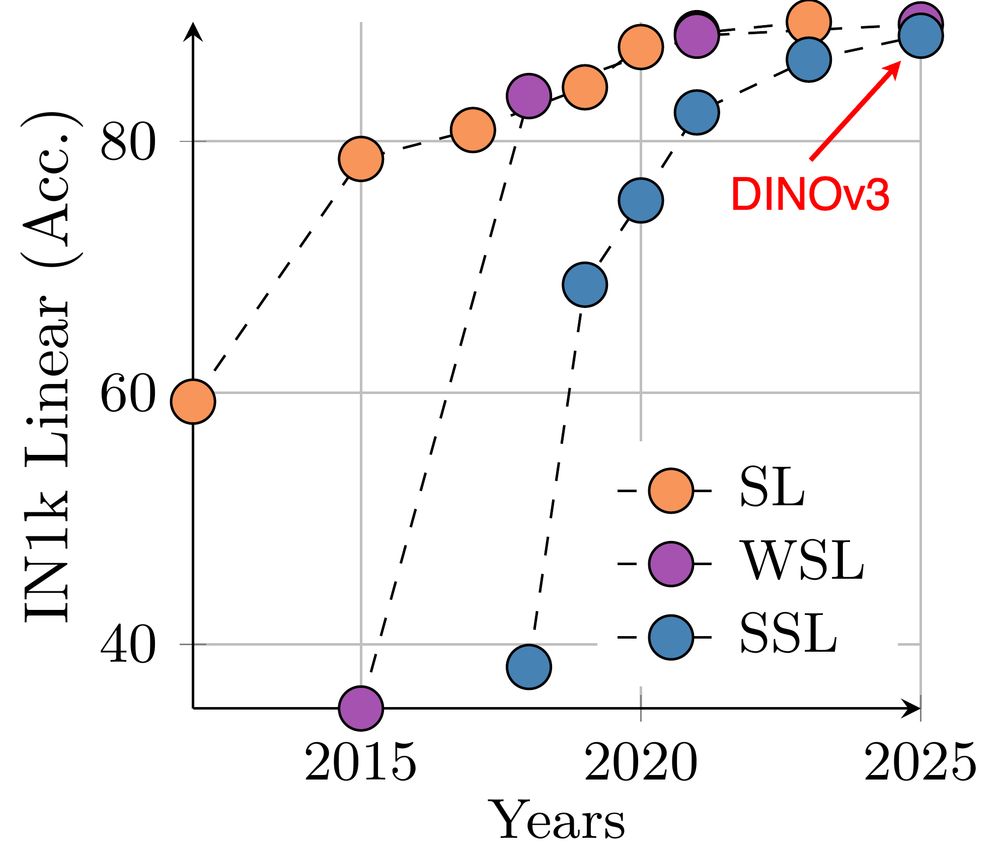

1) The promise of SSL is finally realized, enabling foundation models across domains

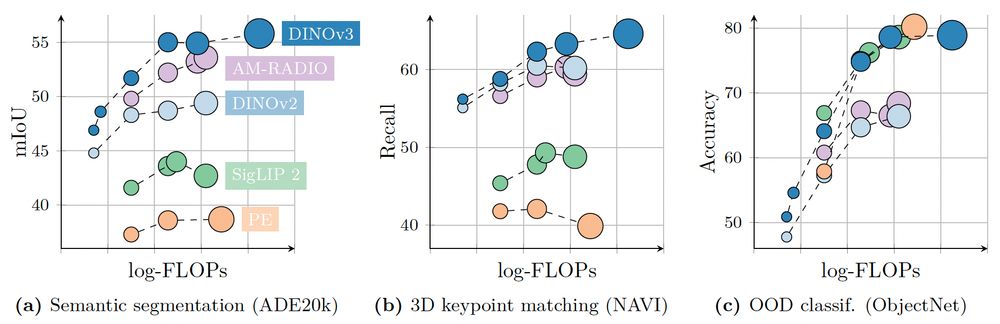

2) High quality dense features enabling SotA applications

3) A versatile family of models for diverse deploy scenarios

So many great ideas (Gram anchoring!) to how we got there, please read the paper!

1) The promise of SSL is finally realized, enabling foundation models across domains

2) High quality dense features enabling SotA applications

3) A versatile family of models for diverse deploy scenarios

So many great ideas (Gram anchoring!) to how we got there, please read the paper!

• ViT-7B flagship model

• ViT-S/S+/B/L/H+ (21M-840M params)

• ConvNeXt variants for efficient inference

• Text-aligned ViT-L (dino.txt)

• ViT-L/7B for satellite

All inheriting the great dense features of the 7B!

• ViT-7B flagship model

• ViT-S/S+/B/L/H+ (21M-840M params)

• ConvNeXt variants for efficient inference

• Text-aligned ViT-L (dino.txt)

• ViT-L/7B for satellite

All inheriting the great dense features of the 7B!