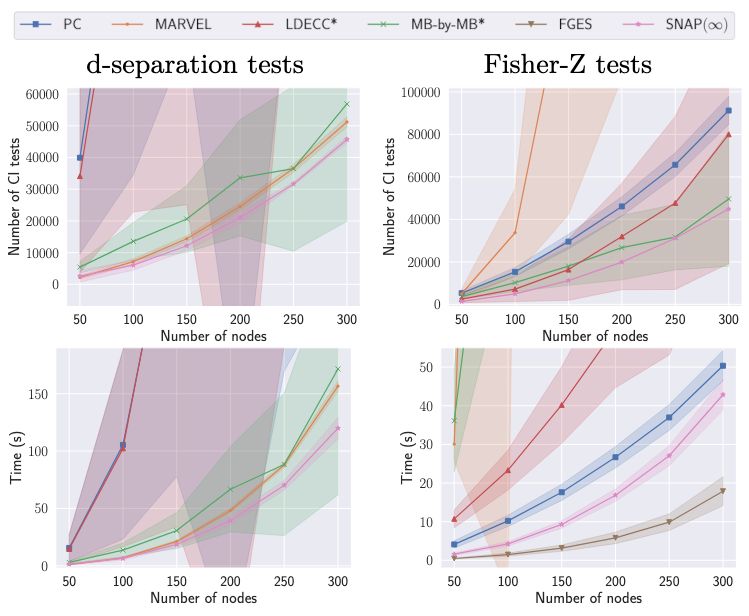

🏎️is more computationally efficient than global methods, performing close to local methods,

💎recovers high-quality, statistically efficient adjustment sets,

🔮thus enables reliable causal effect estimation even at scale

7/8

🏎️is more computationally efficient than global methods, performing close to local methods,

💎recovers high-quality, statistically efficient adjustment sets,

🔮thus enables reliable causal effect estimation even at scale

7/8

➡Learn causal relations between targets

✅Test identifiability of the effect

🐣Find explicit descendants of treatment

🧩Find mediators

🎯Collect optimal adjustment set

For unidentifiable effects, LOAD exits early and returns locally valid adjustments

6/8

➡Learn causal relations between targets

✅Test identifiability of the effect

🐣Find explicit descendants of treatment

🧩Find mediators

🎯Collect optimal adjustment set

For unidentifiable effects, LOAD exits early and returns locally valid adjustments

6/8

5/8

5/8

4/8

4/8

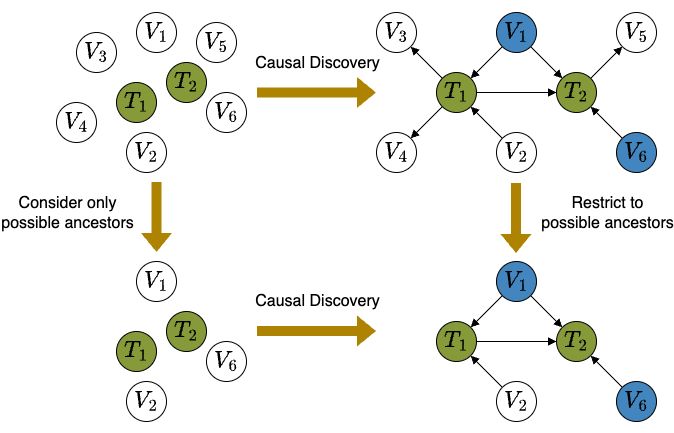

📍 Local discovery methods are fast, but can only find sub-optimal adjustment sets.

Can we get the best of both worlds and find optimal adjustment sets from local information?

3/8

📍 Local discovery methods are fast, but can only find sub-optimal adjustment sets.

Can we get the best of both worlds and find optimal adjustment sets from local information?

3/8

But how to find the optimal adjustment set if the causal graph is not available?

2/8

But how to find the optimal adjustment set if the causal graph is not available?

2/8