Matteo Fuoli

@matteofuoli.bsky.social

Associate Professor @Uni of Birmingham researching business communication, trust, fake news. He/him. Views my own.

All scripts, prompts, and data for full replication are available on our companion GitHub repository: github.com/Weihang-Huan...

GitHub - Weihang-Huang/MetaphorIdentification: This is the repository for metaphor detection project.

This is the repository for metaphor detection project. - Weihang-Huang/MetaphorIdentification

github.com

September 30, 2025 at 8:49 AM

All scripts, prompts, and data for full replication are available on our companion GitHub repository: github.com/Weihang-Huan...

The paper is now on arXiv: arxiv.org/abs/2509.24866

Co-authored with Weihang Huang, Jeannette Littlemore, Sarah Turner, and Ellen Wilding.

Co-authored with Weihang Huang, Jeannette Littlemore, Sarah Turner, and Ellen Wilding.

Metaphor identification using large language models: A comparison of RAG, prompt engineering, and fine-tuning

Metaphor is a pervasive feature of discourse and a powerful lens for examining cognition, emotion, and ideology. Large-scale analysis, however, has been constrained by the need for manual annotation d...

arxiv.org

September 30, 2025 at 8:49 AM

The paper is now on arXiv: arxiv.org/abs/2509.24866

Co-authored with Weihang Huang, Jeannette Littlemore, Sarah Turner, and Ellen Wilding.

Co-authored with Weihang Huang, Jeannette Littlemore, Sarah Turner, and Ellen Wilding.

Crucially, we don't just see LLMs as a scaling tool. By examining where model outputs diverge from human annotations, we can test and refine theoretical understandings of metaphor.

September 30, 2025 at 8:49 AM

Crucially, we don't just see LLMs as a scaling tool. By examining where model outputs diverge from human annotations, we can test and refine theoretical understandings of metaphor.

This will allow researchers to:

- Efficiently annotate large text corpora,

- Scale analyses and increase generalizability,

- Redirect human effort from tedious annotation toward higher-level interpretation and theory-building.

- Efficiently annotate large text corpora,

- Scale analyses and increase generalizability,

- Redirect human effort from tedious annotation toward higher-level interpretation and theory-building.

September 30, 2025 at 8:49 AM

This will allow researchers to:

- Efficiently annotate large text corpora,

- Scale analyses and increase generalizability,

- Redirect human effort from tedious annotation toward higher-level interpretation and theory-building.

- Efficiently annotate large text corpora,

- Scale analyses and increase generalizability,

- Redirect human effort from tedious annotation toward higher-level interpretation and theory-building.

Based on these results, we propose that LLMs can be used to semi-automate metaphor identification and annotation.

September 30, 2025 at 8:49 AM

Based on these results, we propose that LLMs can be used to semi-automate metaphor identification and annotation.

Interestingly, the "errors" made by the top-performing models weren't random. They were systematic and interpretable, offering new insights into both model behavior and metaphor theory.

September 30, 2025 at 8:49 AM

Interestingly, the "errors" made by the top-performing models weren't random. They were systematic and interpretable, offering new insights into both model behavior and metaphor theory.

These are solid results, especially considering:

1) Metaphor identification is complex - researchers have debated best practices for over 20 years.

2) Even humans don't fully agree on what counts as a metaphor, with inter-annotator reliability (Cohen's kappa) typically ranging from 0.56 to 0.88.

1) Metaphor identification is complex - researchers have debated best practices for over 20 years.

2) Even humans don't fully agree on what counts as a metaphor, with inter-annotator reliability (Cohen's kappa) typically ranging from 0.56 to 0.88.

September 30, 2025 at 8:49 AM

These are solid results, especially considering:

1) Metaphor identification is complex - researchers have debated best practices for over 20 years.

2) Even humans don't fully agree on what counts as a metaphor, with inter-annotator reliability (Cohen's kappa) typically ranging from 0.56 to 0.88.

1) Metaphor identification is complex - researchers have debated best practices for over 20 years.

2) Even humans don't fully agree on what counts as a metaphor, with inter-annotator reliability (Cohen's kappa) typically ranging from 0.56 to 0.88.

We find that state-of-the-art closed-source LLMs performed very well, with fine-tuned models reaching 79% accuracy. Even with just 8 annotated example sentences and a chain-of-thought prompt, we achieved 76% - remarkably close to the fine-tuned results.

September 30, 2025 at 8:49 AM

We find that state-of-the-art closed-source LLMs performed very well, with fine-tuned models reaching 79% accuracy. Even with just 8 annotated example sentences and a chain-of-thought prompt, we achieved 76% - remarkably close to the fine-tuned results.

We compared three approaches:

1) RAG: the model receives a codebook and is instructed to annotate texts based on it.

2) Prompt engineering: we designed task-specific instructions and tested zero-shot, few-shot, and chain-of-thought prompts.

3) Fine-tuning: the model is trained on hand-coded texts.

1) RAG: the model receives a codebook and is instructed to annotate texts based on it.

2) Prompt engineering: we designed task-specific instructions and tested zero-shot, few-shot, and chain-of-thought prompts.

3) Fine-tuning: the model is trained on hand-coded texts.

September 30, 2025 at 8:49 AM

We compared three approaches:

1) RAG: the model receives a codebook and is instructed to annotate texts based on it.

2) Prompt engineering: we designed task-specific instructions and tested zero-shot, few-shot, and chain-of-thought prompts.

3) Fine-tuning: the model is trained on hand-coded texts.

1) RAG: the model receives a codebook and is instructed to annotate texts based on it.

2) Prompt engineering: we designed task-specific instructions and tested zero-shot, few-shot, and chain-of-thought prompts.

3) Fine-tuning: the model is trained on hand-coded texts.

Try plopping your code into ChatGPT and asking it how you'd like the labels to change!

July 9, 2025 at 1:49 PM

Try plopping your code into ChatGPT and asking it how you'd like the labels to change!

p.s.: the production team (not me - I promise!) swapped the captions for figures 1 and 2 🥴

July 4, 2025 at 8:53 AM

p.s.: the production team (not me - I promise!) swapped the captions for figures 1 and 2 🥴

It was super fun to work on this paper with brilliant Samantha Ford! Incidentally, this paper has been in the works for almost 10 years - I first presented it at RaAM back in 2016! It’s not procrastination, it’s letting the ideas *marinate* 😅

July 4, 2025 at 8:53 AM

It was super fun to work on this paper with brilliant Samantha Ford! Incidentally, this paper has been in the works for almost 10 years - I first presented it at RaAM back in 2016! It’s not procrastination, it’s letting the ideas *marinate* 😅

Our paper connects with several areas of research in discourse studies and cognitive linguistics, including topics like "metaphor resistance", visual and multimodal metaphor, creativity, multimodal argumentation, blaming and protest discourses.

July 4, 2025 at 8:53 AM

Our paper connects with several areas of research in discourse studies and cognitive linguistics, including topics like "metaphor resistance", visual and multimodal metaphor, creativity, multimodal argumentation, blaming and protest discourses.

With tools like image editing and generative AI, digital activism is rapidly evolving. Subverting visual and verbal texts is now more accessible than ever, offering new creative ways to expose injustice and advocate for change.

July 4, 2025 at 8:53 AM

With tools like image editing and generative AI, digital activism is rapidly evolving. Subverting visual and verbal texts is now more accessible than ever, offering new creative ways to expose injustice and advocate for change.

We go beyond metaphors, examining how metonymy, irony, hyperbole, and more can be wielded as tools for resistance. These tropes, combined with visuals, create nuanced counterarguments that resonate emotionally.

July 4, 2025 at 8:53 AM

We go beyond metaphors, examining how metonymy, irony, hyperbole, and more can be wielded as tools for resistance. These tropes, combined with visuals, create nuanced counterarguments that resonate emotionally.

Our typology can be used to analyze other forms of figurative subversion, including political cartoons, street art (e.g. Banksy), contemporary conceptual art, memes, protest signs at political rallies, mockumentaries, and mash-up video clips circulated on social media.

July 4, 2025 at 8:53 AM

Our typology can be used to analyze other forms of figurative subversion, including political cartoons, street art (e.g. Banksy), contemporary conceptual art, memes, protest signs at political rallies, mockumentaries, and mash-up video clips circulated on social media.

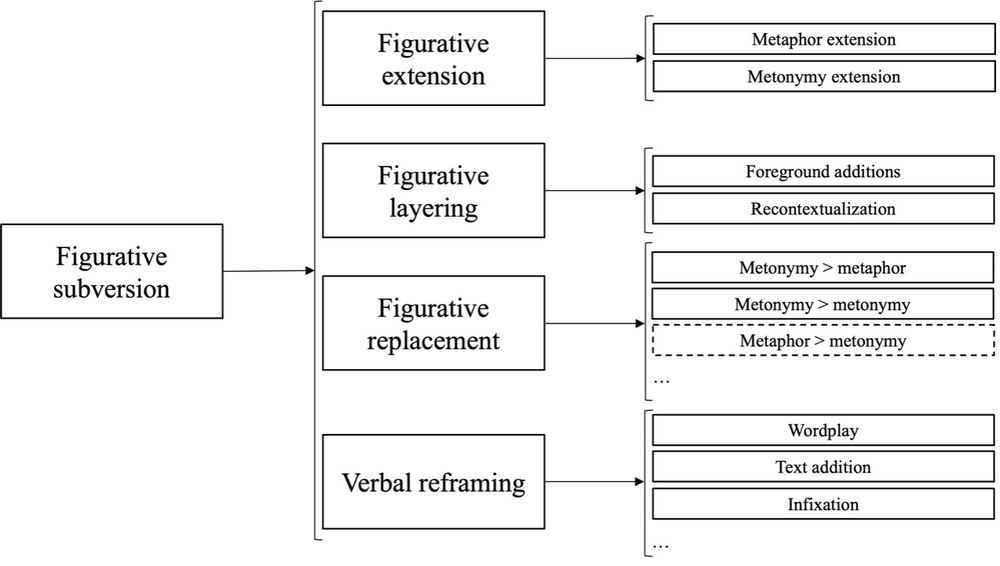

We identify four key figurative subversion strategies, which can be used individually or in combination to build a persuasive visual/multimodal argument.

Figurative extension

Figurative layering

Figurative replacement

Verbal reframing

Figurative extension

Figurative layering

Figurative replacement

Verbal reframing

July 4, 2025 at 8:53 AM

We identify four key figurative subversion strategies, which can be used individually or in combination to build a persuasive visual/multimodal argument.

Figurative extension

Figurative layering

Figurative replacement

Verbal reframing

Figurative extension

Figurative layering

Figurative replacement

Verbal reframing

We look at Greenpeace's 2010 "Behind the Logo" campaign, where activists cleverly reworked BP’s logo to call out its "green" branding, as a case study on how people can use figurative and visual strategies to subvert corporate messaging.

July 4, 2025 at 8:53 AM

We look at Greenpeace's 2010 "Behind the Logo" campaign, where activists cleverly reworked BP’s logo to call out its "green" branding, as a case study on how people can use figurative and visual strategies to subvert corporate messaging.