(1) directly from experience, (2) through offline datasets and (3) with foundation models (LLMs).

We present each methods through the fundamental challenges of decision making, namely:

(a) exploration (b) credit assignment and (c) transferability

(1) directly from experience, (2) through offline datasets and (3) with foundation models (LLMs).

We present each methods through the fundamental challenges of decision making, namely:

(a) exploration (b) credit assignment and (c) transferability

When and in what way should we expect these methods to benefit agents? What are the trade-offs involved?

When and in what way should we expect these methods to benefit agents? What are the trade-offs involved?

Computers are built on this same principle.

How will AI agents discover and use such structure? What is "good" structure in the first place?

Computers are built on this same principle.

How will AI agents discover and use such structure? What is "good" structure in the first place?

But how do we discover such temporal structure?

Hierarchical RL provides a natural formalism-yet many questions remain open.

Here's our overview of the field🧵

But how do we discover such temporal structure?

Hierarchical RL provides a natural formalism-yet many questions remain open.

Here's our overview of the field🧵

With the advent of thinking models, it would be interesting to further investigate this.

With the advent of thinking models, it would be interesting to further investigate this.

TL;DR: Hierarchy affords learnability.

TL;DR: Hierarchy affords learnability.

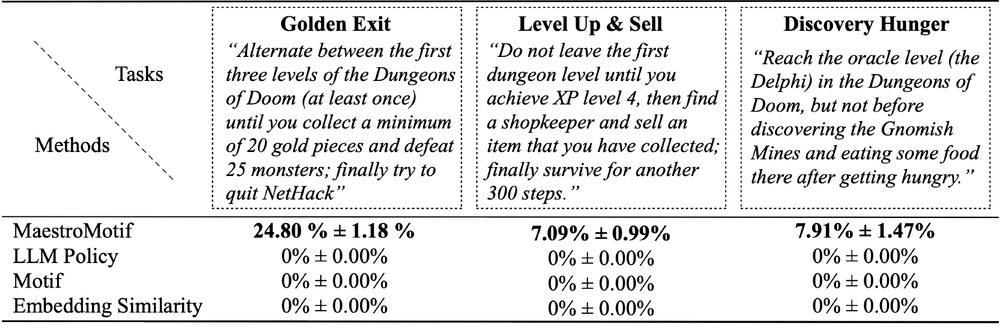

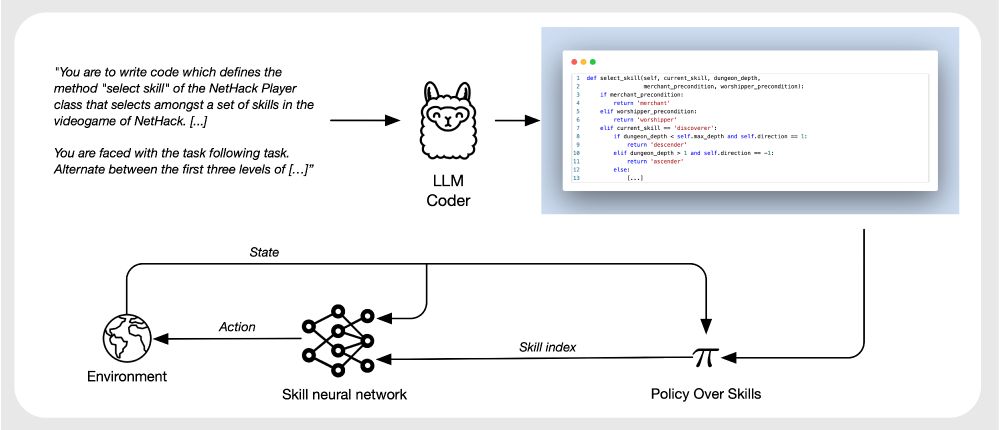

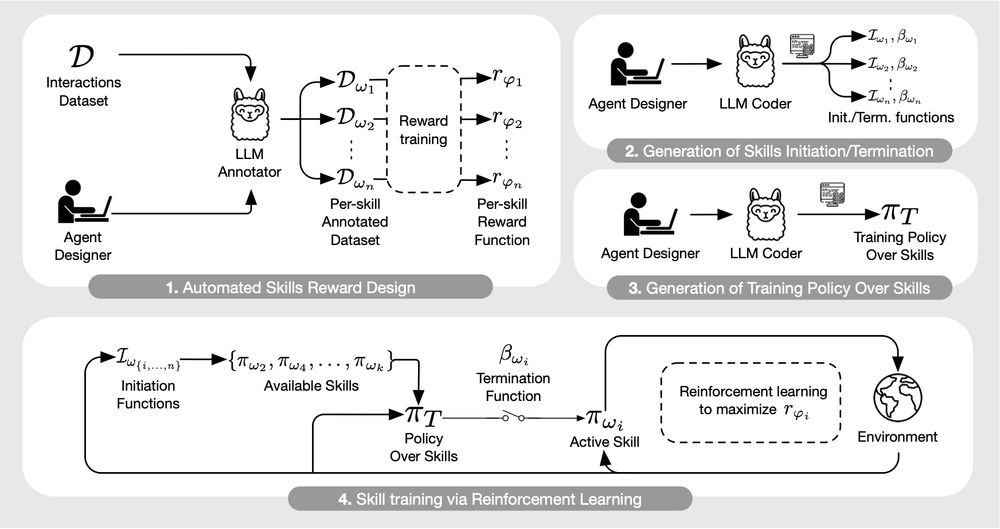

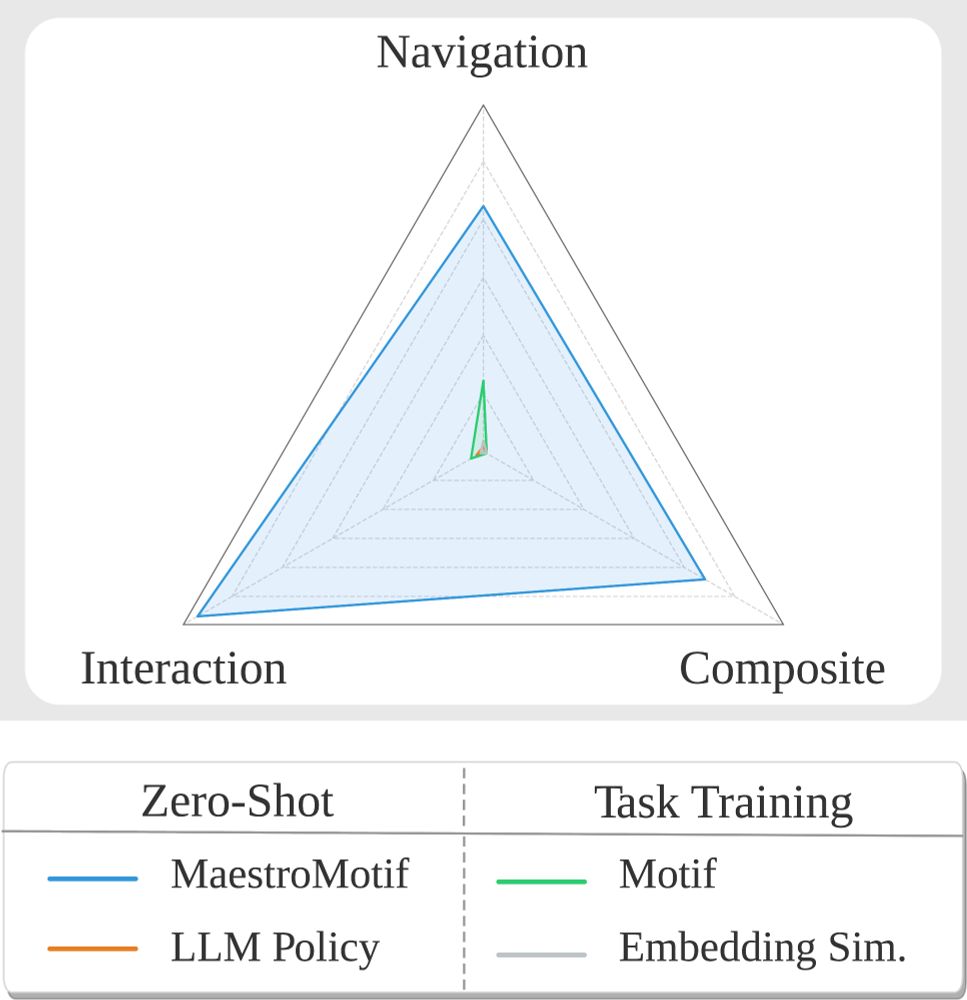

We present MaestroMotif, a method for skill design that produces highly capable and steerable hierarchical agents.

Paper: arxiv.org/abs/2412.08542

Code: github.com/mklissa/maestromotif

We present MaestroMotif, a method for skill design that produces highly capable and steerable hierarchical agents.

Paper: arxiv.org/abs/2412.08542

Code: github.com/mklissa/maestromotif