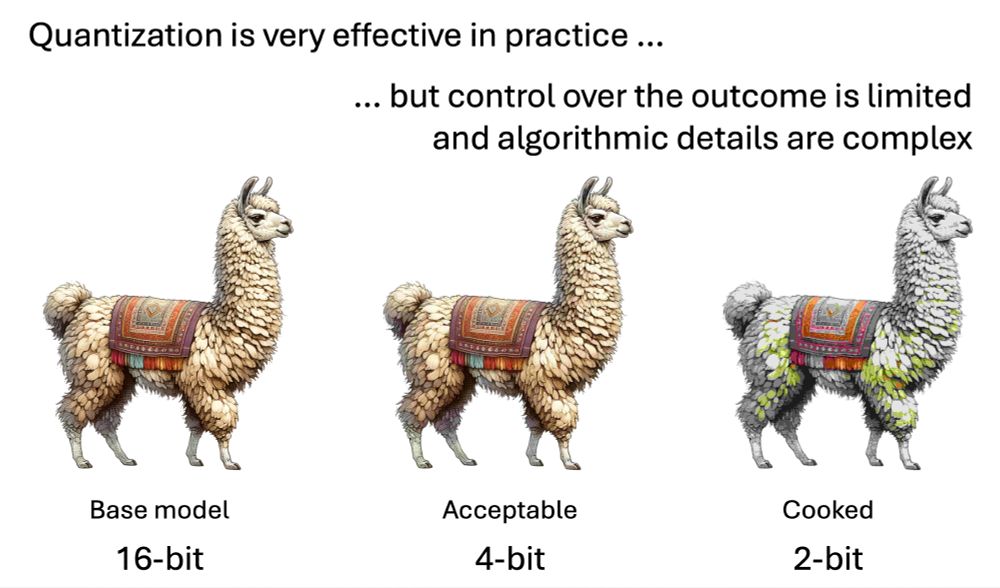

We all know how intuitive and seamless image compression is: use a slider to specify your target size and get an instant preview.

Our quest: Can compressing an LLM be just as easy?

🧵👇

We all know how intuitive and seamless image compression is: use a slider to specify your target size and get an instant preview.

Our quest: Can compressing an LLM be just as easy?

🧵👇