Open Source AI early and often

@sparkycollier on twitter and elsewhere

Links: markcollier.me

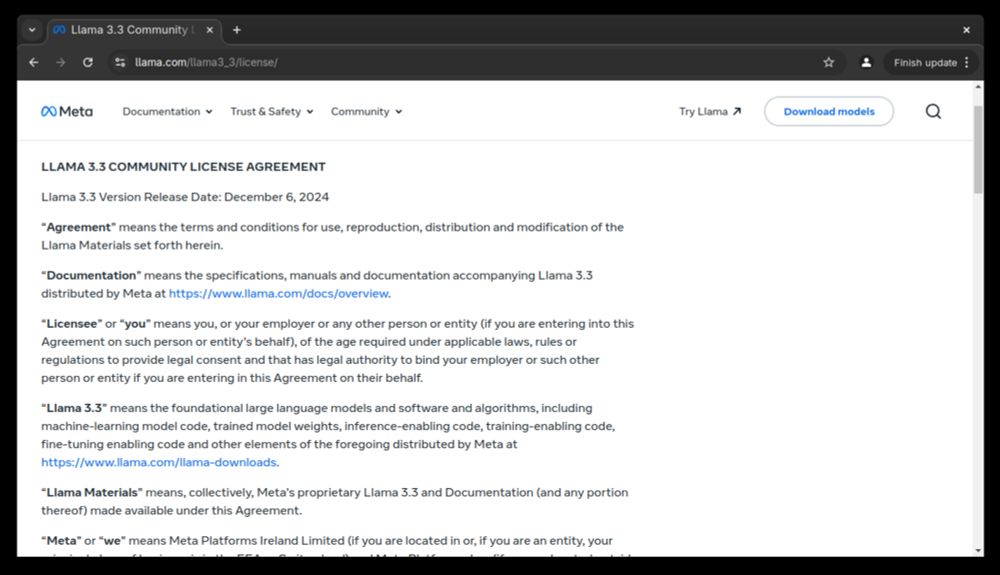

> If you're distributing or redistributing a LLM model that is under the "Llama 3.3 Community License Agreement", you might be breaking at least one of the terms you've explicitly/implicitly agreed to.

notes.victor.earth/youre-probab...

> If you're distributing or redistributing a LLM model that is under the "Llama 3.3 Community License Agreement", you might be breaking at least one of the terms you've explicitly/implicitly agreed to.

notes.victor.earth/youre-probab...

OpenStack, Kata Containers, StarlingX, Zuul + many other open source infrastructure projects will be discussed along with OpenInfra for AI and the mass migration from Vmware to OpenStack

Mark your calendars and practice your French!

Read more about the upcoming event and how you can help build the OpenInfra Summit Europe! openinfra.dev/blog/openinf...

OpenStack, Kata Containers, StarlingX, Zuul + many other open source infrastructure projects will be discussed along with OpenInfra for AI and the mass migration from Vmware to OpenStack

Mark your calendars and practice your French!

tfir.io/openinfra-fo...

tfir.io/openinfra-fo...

AG2 now has surpassed the average gold-medalist in solving Olympiad geometry problems, w/ a solve rate of 84% compared to 54% previously!

Paper: arxiv.org/abs/2502.03544

See full list of authors on link

AG2 now has surpassed the average gold-medalist in solving Olympiad geometry problems, w/ a solve rate of 84% compared to 54% previously!

Paper: arxiv.org/abs/2502.03544

See full list of authors on link

arxiv.org/abs/2502.04144

hd-epic.github.io

What makes the dataset unique is the vast detail contained in the annotations with 263 annotations per minute over 41 hours of video.

arxiv.org/abs/2502.04144

hd-epic.github.io

What makes the dataset unique is the vast detail contained in the annotations with 263 annotations per minute over 41 hours of video.

In parallel, research data-efficient models that can be trained on 100M tokens. This is comparable to both what humans need to develop speech and to wikipedia, proving that it's possible to curate this amount of data as a community.

In parallel, research data-efficient models that can be trained on 100M tokens. This is comparable to both what humans need to develop speech and to wikipedia, proving that it's possible to curate this amount of data as a community.

www.openstack.org/blog/opensta...

www.openstack.org/blog/opensta...

He killed it, and as a result, pulitzer prize editorial cartoonist Ann Telnaes quit.

Make sure everyone sees this cartoon.

So don't even worry about it.

So don't even worry about it.