previous: postdoc @ umass_nlp

phd from utokyo

https://marzenakrp.github.io/

Authors have sued LLM companies for using books w/o permission for model training.

Courts however need empirical evidence of market harm. Our preregistered study exactly addresses this gap.

Joint work w Jane Ginsburg from Columbia Law and @dhillonp.bsky.social 1/n🧵

Authors have sued LLM companies for using books w/o permission for model training.

Courts however need empirical evidence of market harm. Our preregistered study exactly addresses this gap.

Joint work w Jane Ginsburg from Columbia Law and @dhillonp.bsky.social 1/n🧵

"AI use in American newspapers is widespread, uneven, and rarely disclosed"

arxiv.org/abs/2510.18774

"AI use in American newspapers is widespread, uneven, and rarely disclosed"

arxiv.org/abs/2510.18774

Sadly, it is mostly *undisclosed* meaning that readers are often unaware that they are consuming LLM text.

Even worse, we find some of these texts making it to the print press (undisclosed)

Can we at least be honest about using models for editing?

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

Sadly, it is mostly *undisclosed* meaning that readers are often unaware that they are consuming LLM text.

Even worse, we find some of these texts making it to the print press (undisclosed)

Can we at least be honest about using models for editing?

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

We examine 186k articles published this summer and find that ~9% are either fully or partially AI-generated, usually without readers having any idea.

Here's what we learned about how AI is influencing local and national journalism:

MME focuses on resources, metrics & methodologies for evaluating multilingual systems! multilingual-multicultural-evaluation.github.io

📅 Workshop Mar 24–29, 2026

🗓️ Submit by Dec 19, 2025

MME focuses on resources, metrics & methodologies for evaluating multilingual systems! multilingual-multicultural-evaluation.github.io

📅 Workshop Mar 24–29, 2026

🗓️ Submit by Dec 19, 2025

www.pnas.org/doi/10.1073/...

www.pnas.org/doi/10.1073/...

www.pangram.com/history/01bf...

www.pangram.com/history/01bf...

Proof: different articles present at the specified journal/volume/page number, and their titles exist nowhere on any searchable repository.

Take this as a warning to not use LMs to generate your references!

Proof: different articles present at the specified journal/volume/page number, and their titles exist nowhere on any searchable repository.

Take this as a warning to not use LMs to generate your references!

saxon.me/blog/2025/co...

saxon.me/blog/2025/co...

📍4:30–6:30 PM / Room 710 – Poster #8

📍4:30–6:30 PM / Room 710 – Poster #8

Now on arXiv: arxiv.org/abs/2508.16599

Now on arXiv: arxiv.org/abs/2508.16599

But don't draw conclusions just yet - automatic metrics are biased for techniques like metric as a reward model or MBR. The official human ranking will be part of General MT findings at WMT.

arxiv.org/abs/2508.14909

But don't draw conclusions just yet - automatic metrics are biased for techniques like metric as a reward model or MBR. The official human ranking will be part of General MT findings at WMT.

arxiv.org/abs/2508.14909

This includes over 1800 main conference papers and over 1400 papers in findings!

Congratulations to all authors!! 🎉🎉🎉

This includes over 1800 main conference papers and over 1400 papers in findings!

Congratulations to all authors!! 🎉🎉🎉

Additionally, we present evidence that both *syntactic* and *discourse* diversity measures show strong homogenization that lexical and cosine used in this paper do not capture.

Additionally, we present evidence that both *syntactic* and *discourse* diversity measures show strong homogenization that lexical and cosine used in this paper do not capture.

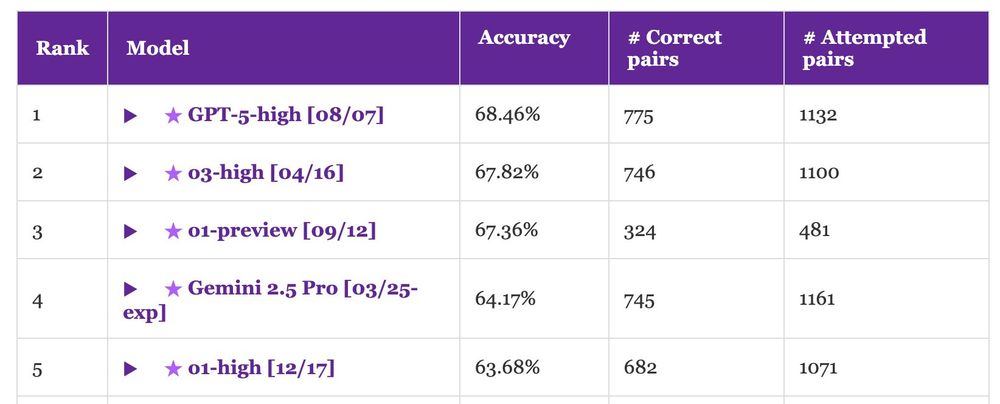

That said, this is a tiny improvement (~1%) over o1-preview, which was released almost one year ago. Have long-context models hit a wall?

Accuracy of human readers is >97%... Long way to go!

That said, this is a tiny improvement (~1%) over o1-preview, which was released almost one year ago. Have long-context models hit a wall?

Accuracy of human readers is >97%... Long way to go!

@marisahudspeth.bsky.social, Polly Stokes, Jacquie Kurland, and @brenocon.bsky.social

📍Hall 4/5.

Come by to chat about argumentation, narrative texts, policy & law, and beyond! #ACL2025NLP

@marisahudspeth.bsky.social, Polly Stokes, Jacquie Kurland, and @brenocon.bsky.social

📍Hall 4/5.

Come by to chat about argumentation, narrative texts, policy & law, and beyond! #ACL2025NLP

🗓️30 July, 11 AM: 𝛿-Stance: A Large-Scale Real World Dataset of Stances in Legal Argumentation. w/ Douglas Rice and @brenocon.bsky.social

📍At Hall 4/5. 🧵👇

🗓️30 July, 11 AM: 𝛿-Stance: A Large-Scale Real World Dataset of Stances in Legal Argumentation. w/ Douglas Rice and @brenocon.bsky.social

📍At Hall 4/5. 🧵👇

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

Here’s one answer from researchers across NLP/MT, Translation Studies, and HCI.

"An Interdisciplinary Approach to Human-Centered Machine Translation"

arxiv.org/abs/2506.13468

Here’s one answer from researchers across NLP/MT, Translation Studies, and HCI.

"An Interdisciplinary Approach to Human-Centered Machine Translation"

arxiv.org/abs/2506.13468