📍 @ml4science.bsky.social, Tübingen, Germany

Michael worked on "Machine Learning for Inference in Biophysical Neuroscience Simulations", focusing on simulation-based inference and differentiable simulation.

We wish him all the best for the next chapter! 👏🎓

Michael worked on "Machine Learning for Inference in Biophysical Neuroscience Simulations", focusing on simulation-based inference and differentiable simulation.

We wish him all the best for the next chapter! 👏🎓

What happens when dozens of SBI researchers and practitioners collaborate for a week? New inference methods, new documentation, lots of new embedding networks, a bridge to pyro and a bridge between flow matching and score-based methods 🤯

1/7 🧵

What happens when dozens of SBI researchers and practitioners collaborate for a week? New inference methods, new documentation, lots of new embedding networks, a bridge to pyro and a bridge between flow matching and score-based methods 🤯

1/7 🧵

Tired of training or tuning your inference network, or waiting for your simulations to finish? Our method NPE-PF can help: It provides training-free simulation-based inference, achieving competitive performance with orders of magnitude fewer simulations! ⚡️

Tired of training or tuning your inference network, or waiting for your simulations to finish? Our method NPE-PF can help: It provides training-free simulation-based inference, achieving competitive performance with orders of magnitude fewer simulations! ⚡️

By @ldattaro.bsky.social

#neuroskyence

www.thetransmitter.org/null-and-not...

By @ldattaro.bsky.social

#neuroskyence

www.thetransmitter.org/null-and-not...

www.cambridge.org/core/journal...

www.cambridge.org/core/journal...

We’re looking for PhDs, Postdocs and Scientific Programmers that want to use deep learning to build, optimize and study mechanistic models of neural computations. Full details: www.mackelab.org/jobs/ 1/5

We’re looking for PhDs, Postdocs and Scientific Programmers that want to use deep learning to build, optimize and study mechanistic models of neural computations. Full details: www.mackelab.org/jobs/ 1/5

If you're interested in simulation-based inference for time series, come chat with Manuel Gloeckler or Shoji Toyota

at Poster #420, Saturday 10:00–12:00 in Hall 3.

📰: arxiv.org/abs/2411.02728

If you're interested in simulation-based inference for time series, come chat with Manuel Gloeckler or Shoji Toyota

at Poster #420, Saturday 10:00–12:00 in Hall 3.

📰: arxiv.org/abs/2411.02728

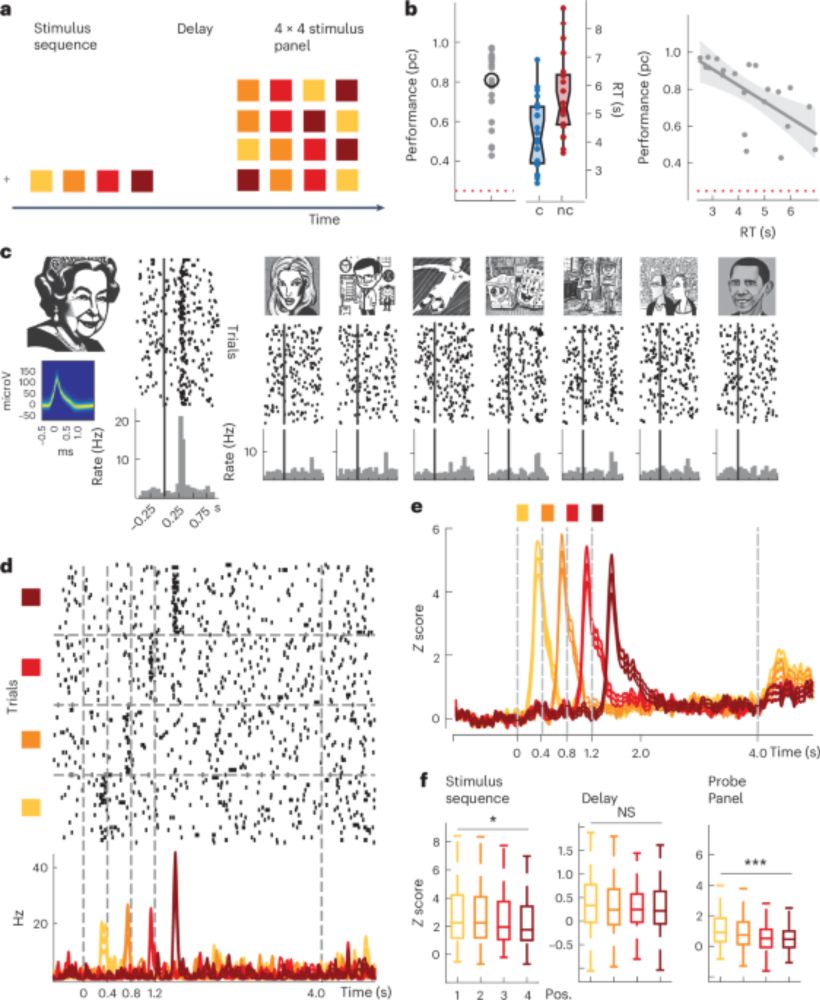

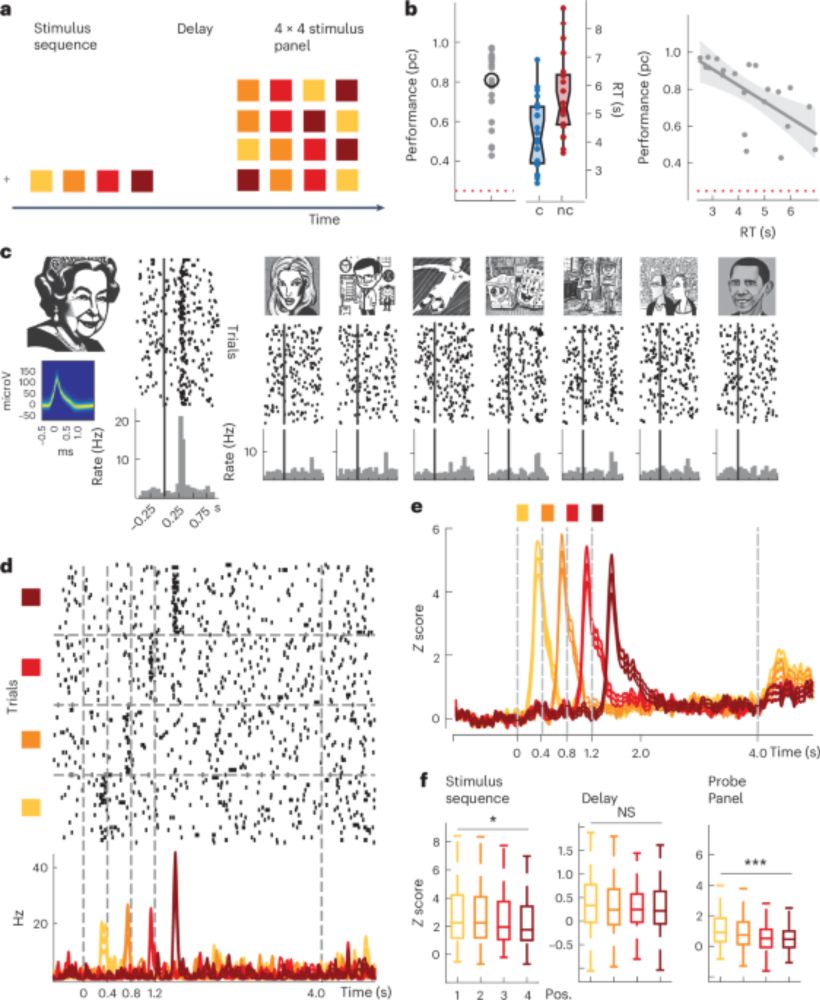

Also, a good reminder to share that our work is now out in Cell Reports 🙏🎊

⬇️

www.cell.com/cell-reports...

Also, a good reminder to share that our work is now out in Cell Reports 🙏🎊

⬇️

www.cell.com/cell-reports...

with 3 posters, 2 workshop talks, and a main conference contributed talk (for the very first time in Mackelab history 🎉)!

with 3 posters, 2 workshop talks, and a main conference contributed talk (for the very first time in Mackelab history 🎉)!

nature.com/articles/s41593-025-01893-7

nature.com/articles/s41593-025-01893-7

nature.com/articles/s41593-025-01893-7

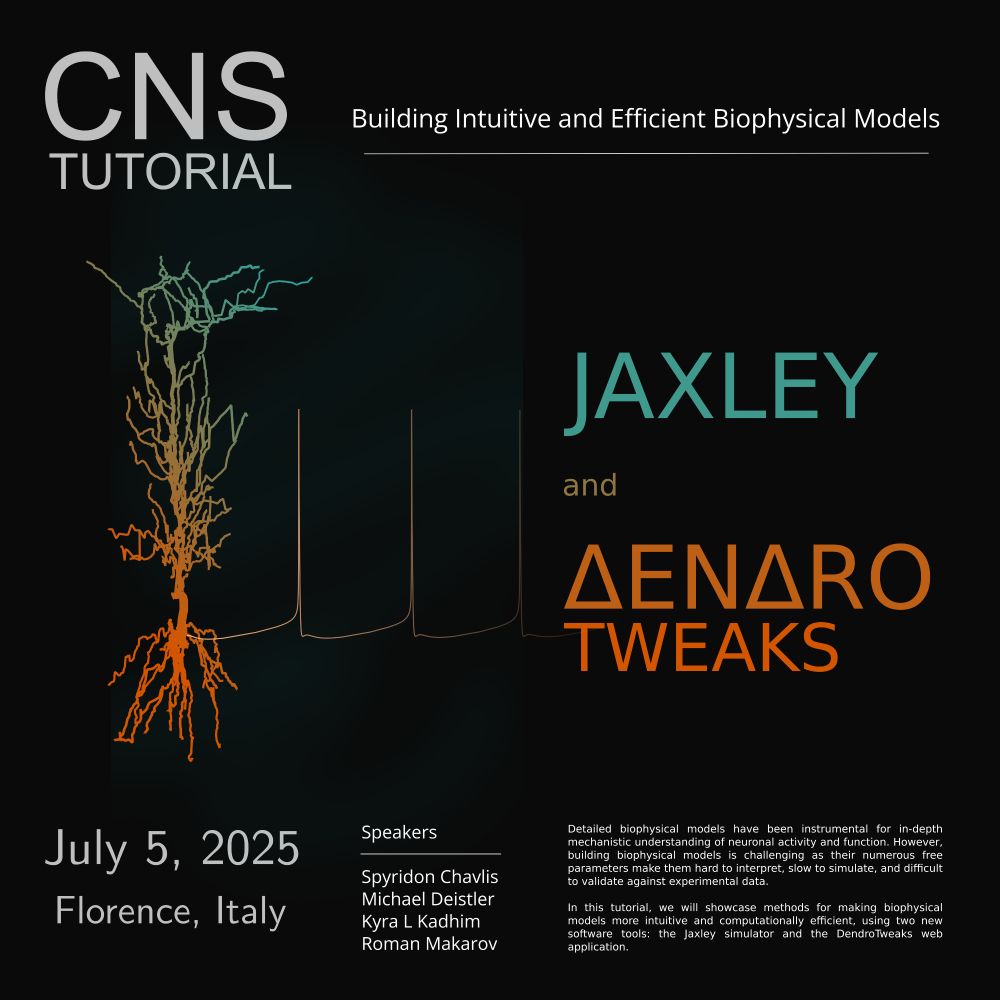

Join us in Florence if you like dendrites, biophysics, or optimization!

Join us in Florence if you like dendrites, biophysics, or optimization!

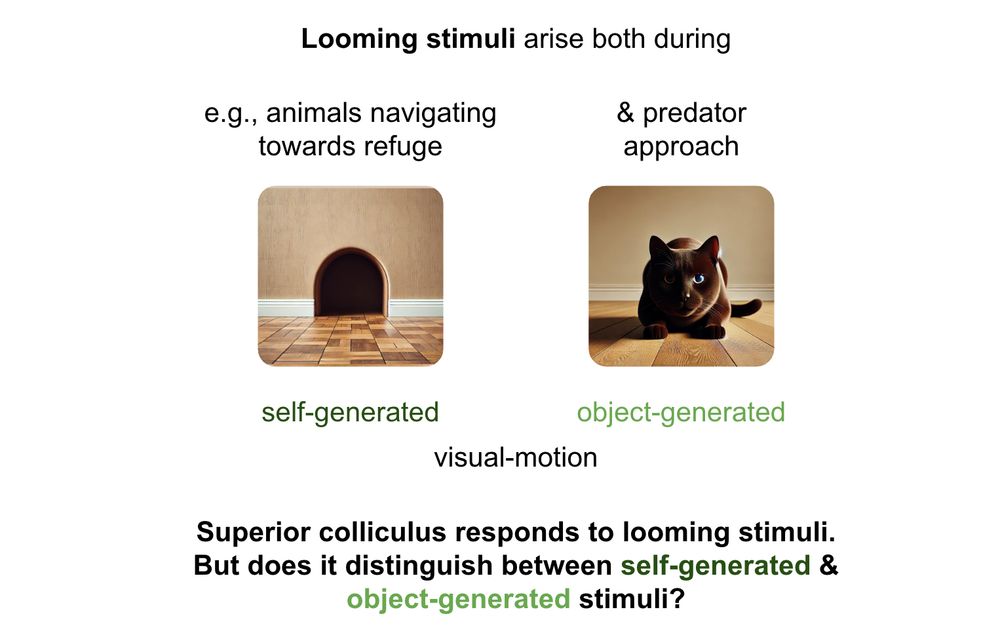

How do mice distinguish self-generated vs. object-generated looming stimuli? Our new study combines VR and neural recordings from superior colliculus (SC) 🧠🐭 to explore this question.

Check out our preprint doi.org/10.1101/2024... 🧵

How do mice distinguish self-generated vs. object-generated looming stimuli? Our new study combines VR and neural recordings from superior colliculus (SC) 🧠🐭 to explore this question.

Check out our preprint doi.org/10.1101/2024... 🧵

📍Poster #4006 (East; 11 am PT)

Paper: openreview.net/forum?id=0cg...

Code: github.com/mackelab/sou...

(1/8)

📍Poster #4006 (East; 11 am PT)

Latent Diffusion for Neural Spiking data (LDNS), a latent variable model (LVM) which addresses 3 goals simultaneously:

Latent Diffusion for Neural Spiking data (LDNS), a latent variable model (LVM) which addresses 3 goals simultaneously:

A short thread 🧵

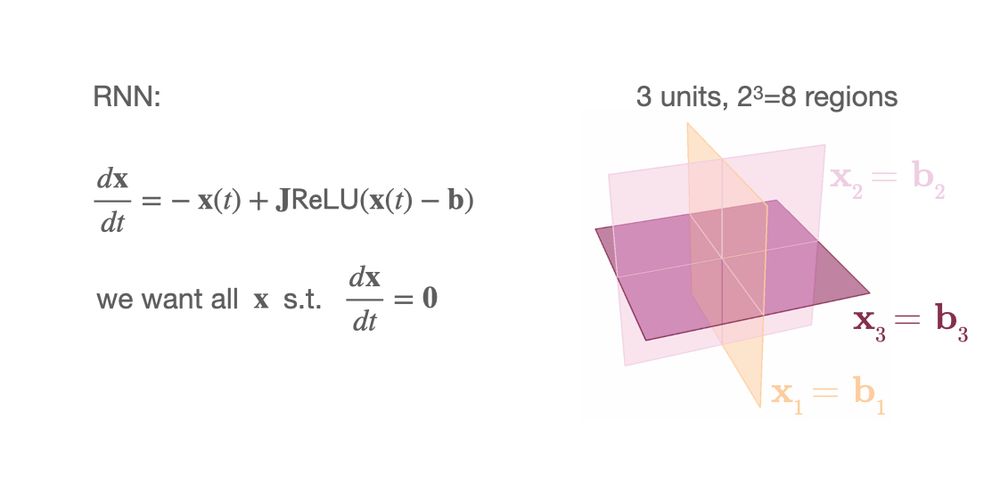

In RNNs with N units with ReLU(x-b) activations the phase space is partioned in 2^N regions by hyperplanes at x=b 1/7

A short thread 🧵

In RNNs with N units with ReLU(x-b) activations the phase space is partioned in 2^N regions by hyperplanes at x=b 1/7

PhD students: Apply by Nov 15 (tomorrow!), directly to IMPRS-IS or ELLIS

PhD students: Apply by Nov 15 (tomorrow!), directly to IMPRS-IS or ELLIS

For now let’s introduce ourselves with some pictures of our recent group retreat.

For now let’s introduce ourselves with some pictures of our recent group retreat.