Watch the video: youtu.be/vTI4cziw84Q

#NeuroAI2025 #AI #ML #LLMs #NeuroAI

Watch the video: youtu.be/vTI4cziw84Q

#NeuroAI2025 #AI #ML #LLMs #NeuroAI

HALT finetuning teaches LLMs to only generate content they’re confident is correct.

🔍 Insight: Post-training must be adjusted to the model’s capabilities.

⚖️ Tunable trade-off: Higher correctness 🔒 vs. More completeness 📝

🧵

HALT finetuning teaches LLMs to only generate content they’re confident is correct.

🔍 Insight: Post-training must be adjusted to the model’s capabilities.

⚖️ Tunable trade-off: Higher correctness 🔒 vs. More completeness 📝

🧵

See you in October in Montreal!

See you in October in Montreal!

Increasingly I see inaccurate and badly written news stories authored by AI, many of which have actual humans listed as authors or editors.

Increasingly I see inaccurate and badly written news stories authored by AI, many of which have actual humans listed as authors or editors.

news.cs.washington.edu/2025/01/07/w...

news.cs.washington.edu/2025/01/07/w...

youtu.be/EiurL9eyUNc

In which I might or might not have said “I’m working to take the ‘ick’ out of ‘agentic’”

youtu.be/EiurL9eyUNc

In which I might or might not have said “I’m working to take the ‘ick’ out of ‘agentic’”

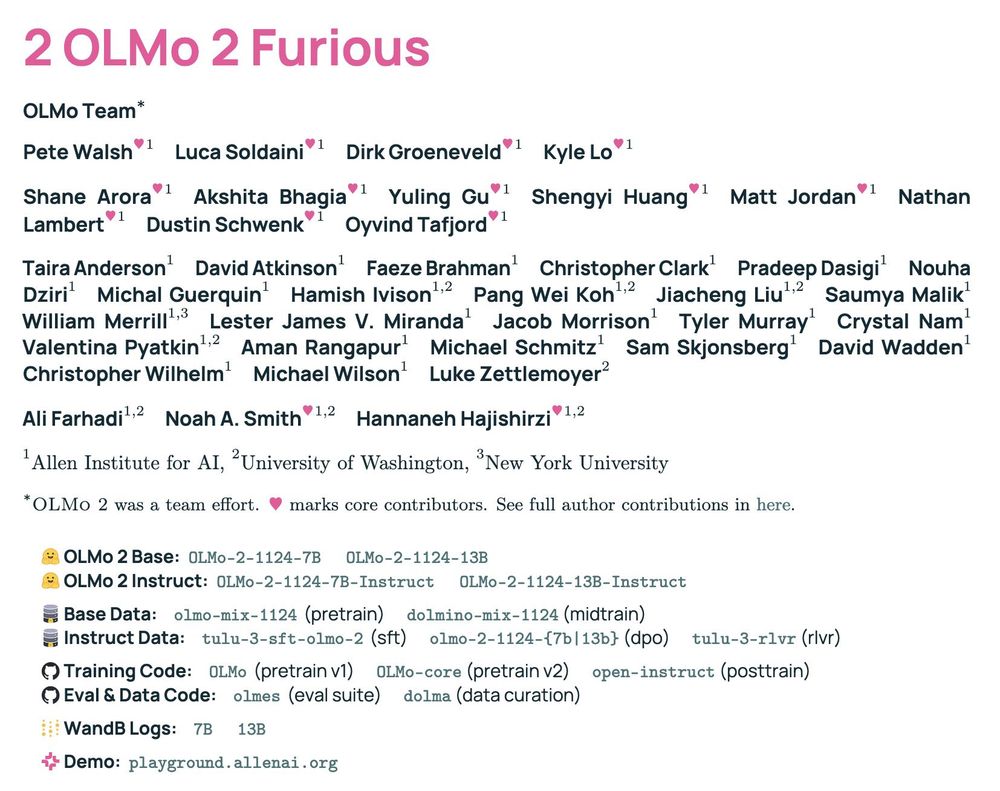

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

We release 🧙🏽♀️DRUID to facilitate studies of context usage in real-world scenarios.

arxiv.org/abs/2412.17031

w/ @saravera.bsky.social, H.Yu, @rnv.bsky.social, C.Lioma, M.Maistro, @apepa.bsky.social and @iaugenstein.bsky.social ⭐️

We release 🧙🏽♀️DRUID to facilitate studies of context usage in real-world scenarios.

arxiv.org/abs/2412.17031

w/ @saravera.bsky.social, H.Yu, @rnv.bsky.social, C.Lioma, M.Maistro, @apepa.bsky.social and @iaugenstein.bsky.social ⭐️

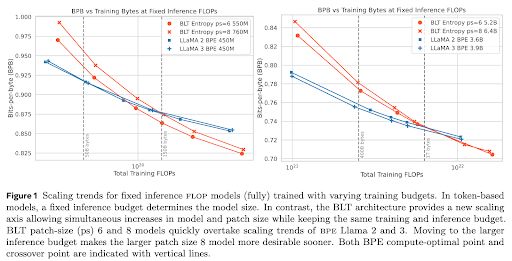

Paper 📄 dl.fbaipublicfiles.com/blt/BLT__Pat...

Code 🛠️ github.com/facebookrese...

Paper 📄 dl.fbaipublicfiles.com/blt/BLT__Pat...

Code 🛠️ github.com/facebookrese...

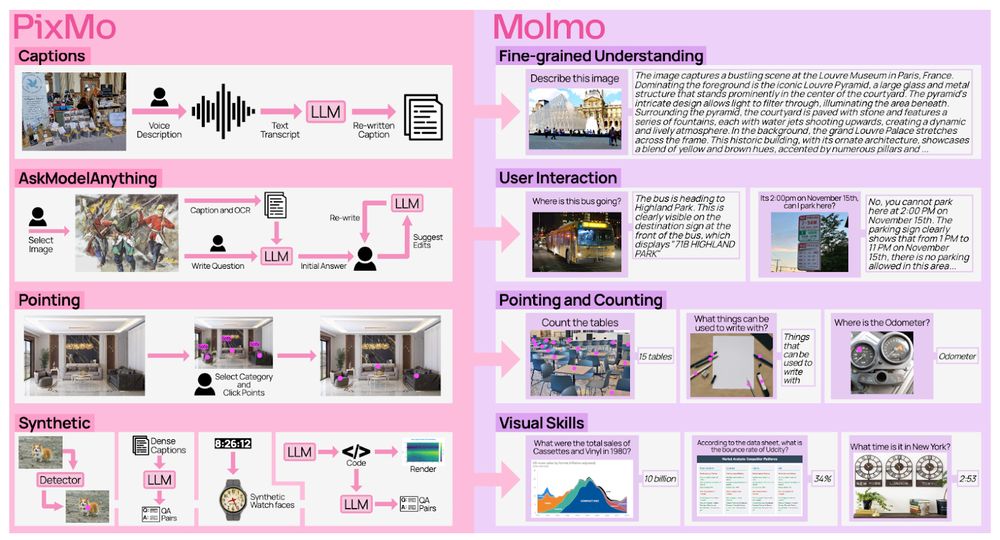

Training code, data, and everything you need to reproduce our models. Oh, and we have updated our tech report too!

Links in thread 👇

Training code, data, and everything you need to reproduce our models. Oh, and we have updated our tech report too!

Links in thread 👇

There's been a recent stir about terms of use restrictions on AI outputs & models. We dig into the legal analysis, questioning their enforceability.

Link: papers.ssrn.com/sol3/papers....

There's been a recent stir about terms of use restrictions on AI outputs & models. We dig into the legal analysis, questioning their enforceability.

Link: papers.ssrn.com/sol3/papers....

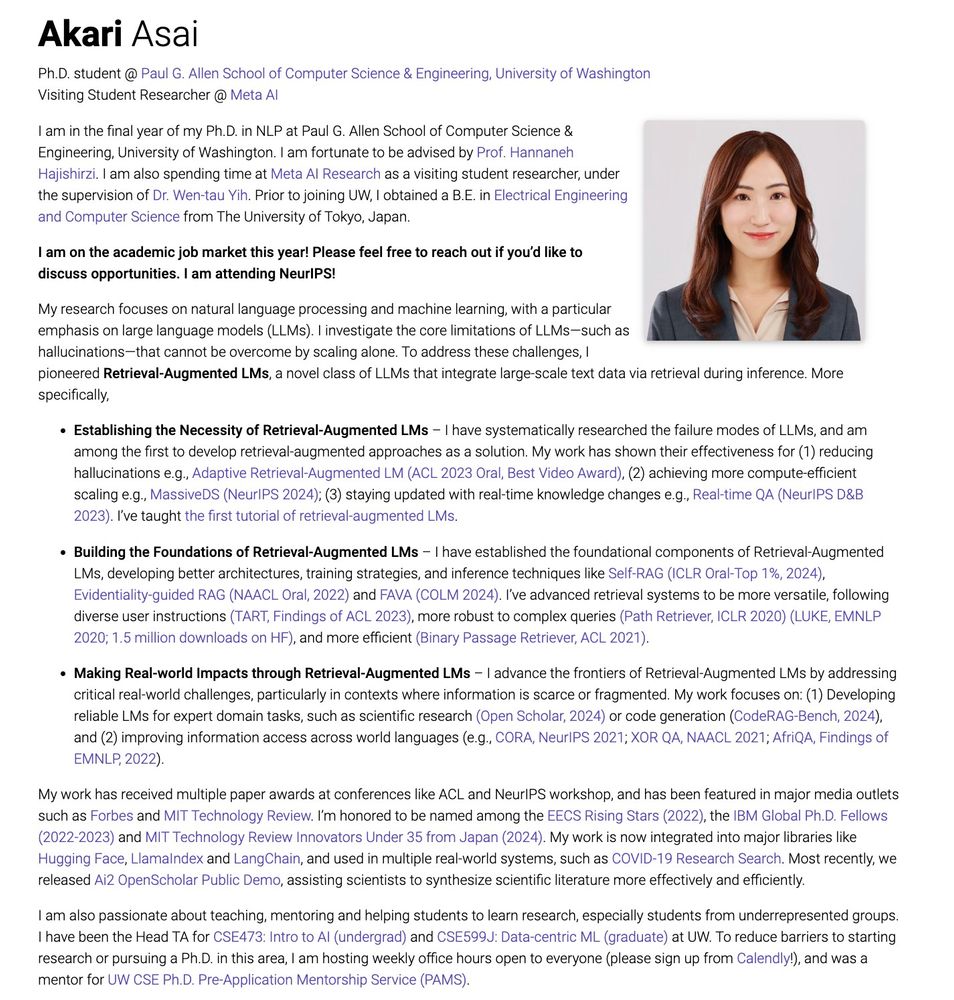

My Ph.D. work focuses on Retrieval-Augmented LMs to create more reliable AI systems 🧵

My Ph.D. work focuses on Retrieval-Augmented LMs to create more reliable AI systems 🧵

I develop autonomous systems for: programming, research-level question answering, finding sec vulnerabilities & other useful+challenging tasks.

I do this by building frontier-pushing benchmarks and agents that do well on them.

See you at NeurIPS!

I develop autonomous systems for: programming, research-level question answering, finding sec vulnerabilities & other useful+challenging tasks.

I do this by building frontier-pushing benchmarks and agents that do well on them.

See you at NeurIPS!

There are now several training configs that together reproduce the training runs that lead to the final OLMo 2 models.

In particular, all the training data is available, tokenized and shuffled exactly as we trained on it!

There are now several training configs that together reproduce the training runs that lead to the final OLMo 2 models.

In particular, all the training data is available, tokenized and shuffled exactly as we trained on it!

See results in comments!

🔗 Arxiv link: arxiv.org/abs/2411.05025

See results in comments!

🔗 Arxiv link: arxiv.org/abs/2411.05025

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Wouldn’t that be just super?

Wouldn’t that be just super?

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

🐟 7B and 13B weights, trained up to 4-5T tokens, fully open data, code, etc

🐠 better architecture and recipe for training stability

🐡 staged training, with new data mix Dolmino🍕 added during annealing

🦈 state-of-the-art OLMo 2 Instruct models

#nlp #mlsky

links below👇

arxiv.org/abs/2407.036...

arxiv.org/abs/2407.036...