Interests in game theory, reinforcement learning, and deep learning.

Website: https://www.lukemarris.info/

Google Scholar: https://scholar.google.com/citations?user=dvTeSX4AAAAJ

iclr.cc/virtual/2025... #IRL

iclr.cc/virtual/2025... #IRL

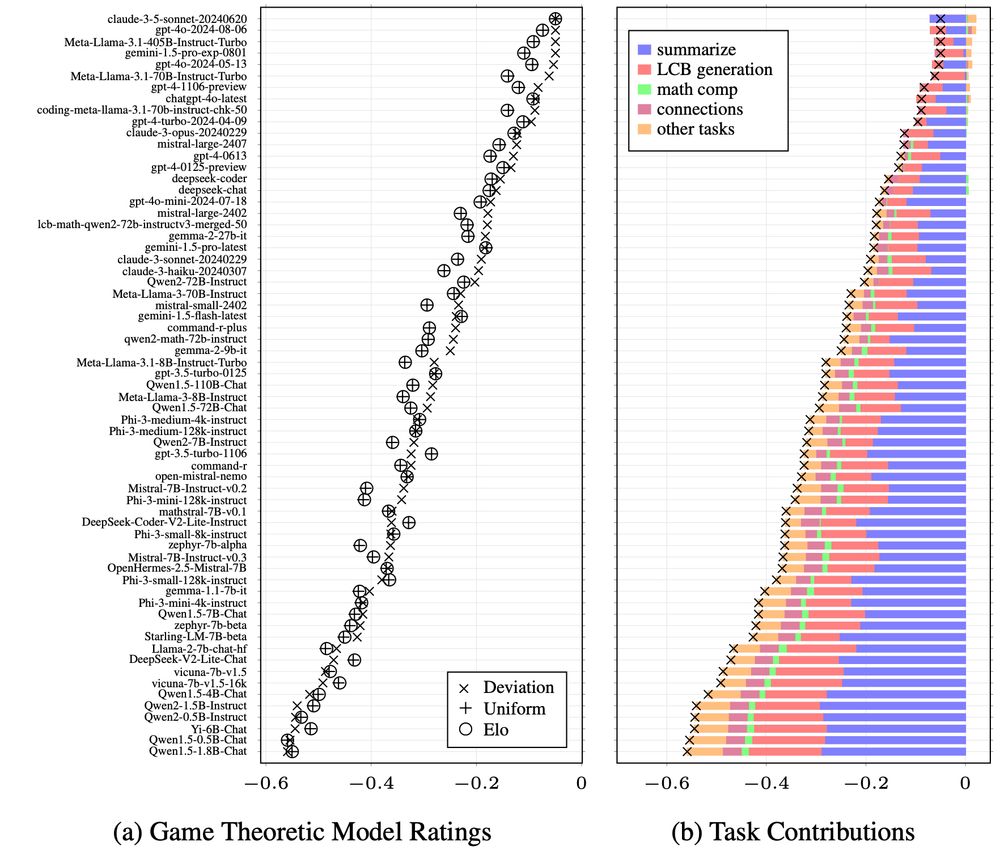

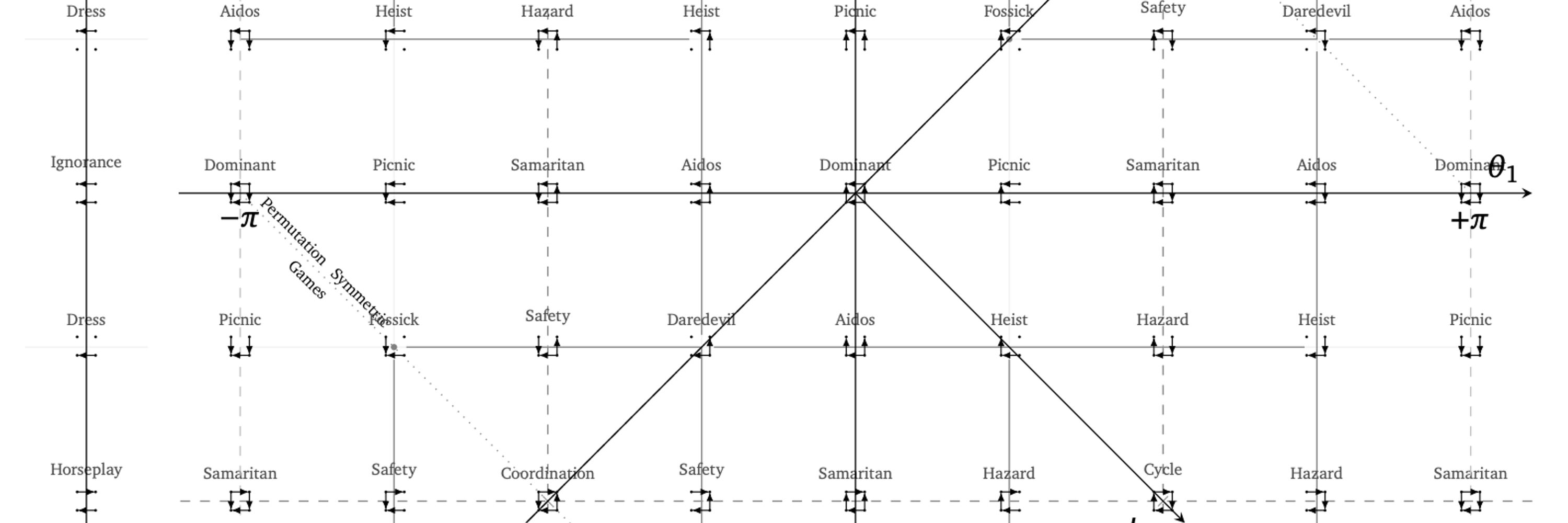

arxiv.org/abs/2502.20170

arxiv.org/abs/2502.20170