🐟interns own major parts of our model development, sometimes even leading whole projects

🐡we're committed to open science & actively help our interns publish their work

reach out if u wanna build open language models together 🤝

links 👇

🐟interns own major parts of our model development, sometimes even leading whole projects

🐡we're committed to open science & actively help our interns publish their work

reach out if u wanna build open language models together 🤝

links 👇

🔥training our VLM using RLVR with binary unit test rewards🔥

it's incredibly effective & unit test creation easy to scale w synthetic data pipelines

check it out at olmocr.allen.ai

🔥training our VLM using RLVR with binary unit test rewards🔥

it's incredibly effective & unit test creation easy to scale w synthetic data pipelines

check it out at olmocr.allen.ai

findings from large scale survey of 800 researchers on how they use LMs in their research #colm2025

findings from large scale survey of 800 researchers on how they use LMs in their research #colm2025

come chat w me about pretraining horror stories, data & evals, what we're cookin for next olmo, etc

made a 🔥 poster for thursday sess, come say hi

come chat w me about pretraining horror stories, data & evals, what we're cookin for next olmo, etc

made a 🔥 poster for thursday sess, come say hi

🐟 select test cases

🐠 score LM on each test

🦈 aggregate scores to estimate perf

fluid benchmarking is simple:

🍣 find max informative test cases

🍥 estimate 'ability', not simple avg perf

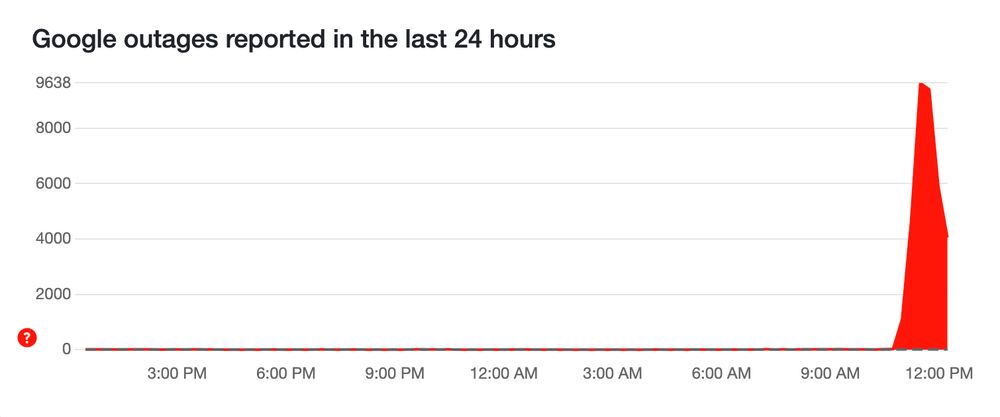

why care? turn ur grey noisy benchmarks to red ones!

🐟 select test cases

🐠 score LM on each test

🦈 aggregate scores to estimate perf

fluid benchmarking is simple:

🍣 find max informative test cases

🍥 estimate 'ability', not simple avg perf

why care? turn ur grey noisy benchmarks to red ones!

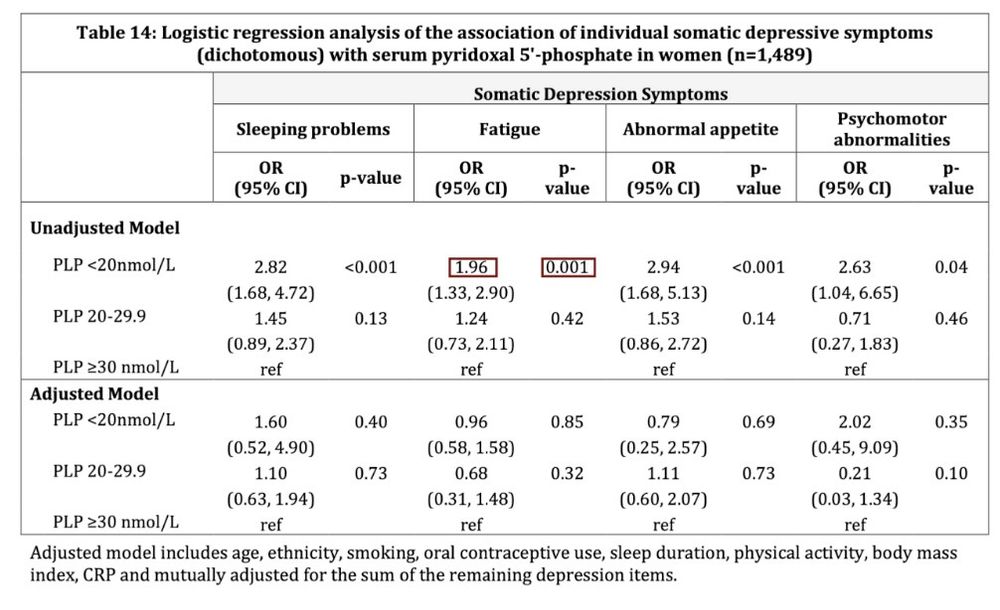

so instead of fuzzy matching between a model-generated table with a gold reference table,

we define Pass/Fail tests like "the cell to the left of the cell containing 0.001 should contain 1.96"

so instead of fuzzy matching between a model-generated table with a gold reference table,

we define Pass/Fail tests like "the cell to the left of the cell containing 0.001 should contain 1.96"

recalling when we released same time as Llama 3.2 😆

huge kudos to Matt Deitke, Chris Clark & Ani Kembhavi for their leadership on this project!

@cvprconference.bsky.social

recalling when we released same time as Llama 3.2 😆

huge kudos to Matt Deitke, Chris Clark & Ani Kembhavi for their leadership on this project!

@cvprconference.bsky.social

arxiv.org/abs/2506.08300

arxiv.org/abs/2506.08300

it's like "i found relevant content in <journal|conf|arxiv> paper" but the links provided all go to the publisher homepage instead of the actual paper lolol whyy 🤦♂️

it's like "i found relevant content in <journal|conf|arxiv> paper" but the links provided all go to the publisher homepage instead of the actual paper lolol whyy 🤦♂️

it seems the call for papers neurips.cc/Conferences/... says author list should be finalized by May 15th, but on OpenReview itself, author list needs to be finalized by May 11th

can pls clarify, thx! 🙏

it seems the call for papers neurips.cc/Conferences/... says author list should be finalized by May 15th, but on OpenReview itself, author list needs to be finalized by May 11th

can pls clarify, thx! 🙏