🐟our largest & best fully open model to-date

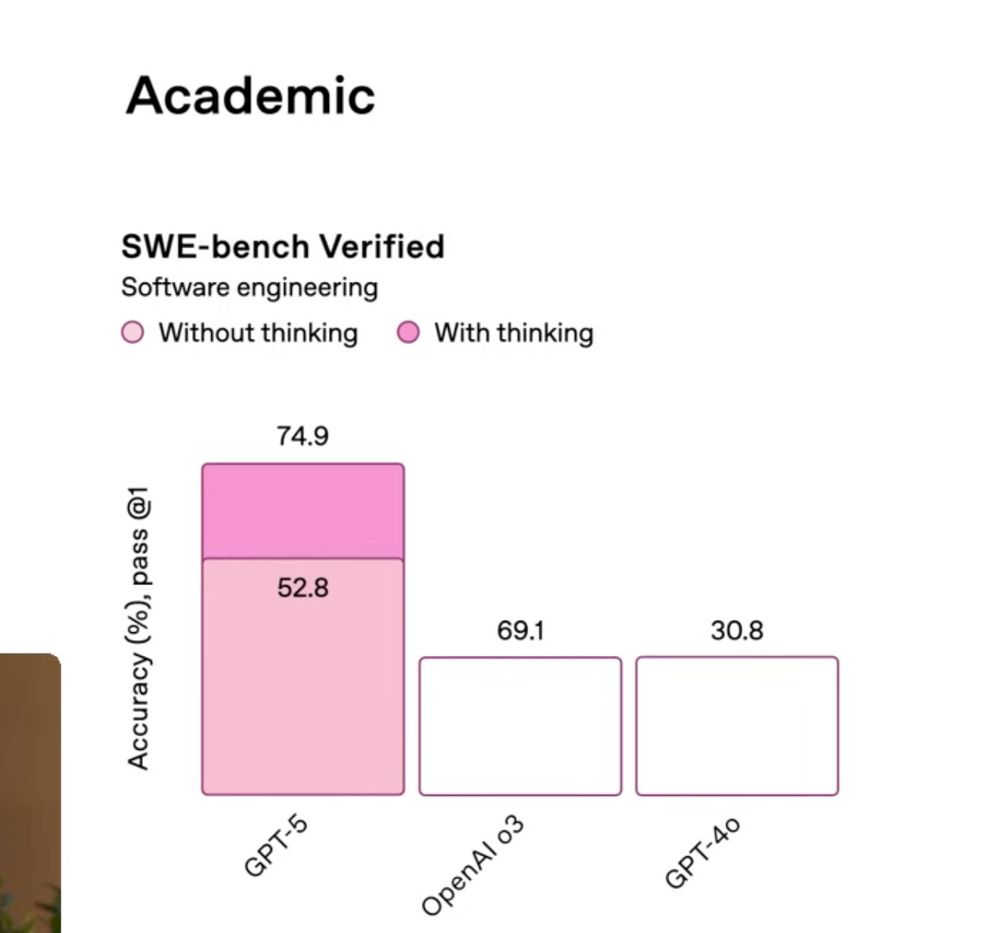

🐠right up there w similar size weights-only models from big companies on popular benchmarks

🐡but we used way less compute & all our data, ckpts, code, recipe are free & open

made a nice plot of our post-trained results!✌️

🐟interns own major parts of our model development, sometimes even leading whole projects

🐡we're committed to open science & actively help our interns publish their work

reach out if u wanna build open language models together 🤝

links 👇

🐟interns own major parts of our model development, sometimes even leading whole projects

🐡we're committed to open science & actively help our interns publish their work

reach out if u wanna build open language models together 🤝

links 👇

small multimodal foundation language models + system for finetuning for important uses like agriculture, wildfire management, conservation & more 🌿

small multimodal foundation language models + system for finetuning for important uses like agriculture, wildfire management, conservation & more 🌿

🔥training our VLM using RLVR with binary unit test rewards🔥

it's incredibly effective & unit test creation easy to scale w synthetic data pipelines

check it out at olmocr.allen.ai

🔥training our VLM using RLVR with binary unit test rewards🔥

it's incredibly effective & unit test creation easy to scale w synthetic data pipelines

check it out at olmocr.allen.ai

findings from large scale survey of 800 researchers on how they use LMs in their research #colm2025

findings from large scale survey of 800 researchers on how they use LMs in their research #colm2025

come chat w me about pretraining horror stories, data & evals, what we're cookin for next olmo, etc

made a 🔥 poster for thursday sess, come say hi

come chat w me about pretraining horror stories, data & evals, what we're cookin for next olmo, etc

made a 🔥 poster for thursday sess, come say hi

working w cancer research center to analyze clinical data, but private data cant leave the center.

so the team developed a tool that generates code for remote execution by the cancer center, developed on synthetic data, and now tested for realsies 🤩

working w cancer research center to analyze clinical data, but private data cant leave the center.

so the team developed a tool that generates code for remote execution by the cancer center, developed on synthetic data, and now tested for realsies 🤩

🐟 select test cases

🐠 score LM on each test

🦈 aggregate scores to estimate perf

fluid benchmarking is simple:

🍣 find max informative test cases

🍥 estimate 'ability', not simple avg perf

why care? turn ur grey noisy benchmarks to red ones!

🐟 select test cases

🐠 score LM on each test

🦈 aggregate scores to estimate perf

fluid benchmarking is simple:

🍣 find max informative test cases

🍥 estimate 'ability', not simple avg perf

why care? turn ur grey noisy benchmarks to red ones!

"evaluates on data it was trained on, relies on an external model and additional samples for its claimed performance gains, and artificially reduces the scores of compared models"

www.sri.inf.ethz.ch/blog/k2think

"evaluates on data it was trained on, relies on an external model and additional samples for its claimed performance gains, and artificially reduces the scores of compared models"

www.sri.inf.ethz.ch/blog/k2think

1. A small invite-only dinner for Interconnects AI (Ai2 event news later).

2. Various research chats and catchups.

Fill out the form below or email me if you're interested :) 🍁🇨🇦

Interest form: buff.ly/9nWBxZ9

1. A small invite-only dinner for Interconnects AI (Ai2 event news later).

2. Various research chats and catchups.

Fill out the form below or email me if you're interested :) 🍁🇨🇦

Interest form: buff.ly/9nWBxZ9

🫡🫡🫡

@christos-c.bsky.social @carolynrose.bsky.social @tanmoy-chak.bsky.social @violetpeng.bsky.social

🫡🫡🫡

if you're frustrated by LM evals, not knowing if results are real or noise, it's useful to decompose sources of variance:

🐠is there enough meaningful spread (signal) among compared models

🐟do scores vary between intermediate checkpoints (noise)

We want – ⭐ low noise and high signal ⭐ – *both* low variance during training and a high spread of scores.

if you're frustrated by LM evals, not knowing if results are real or noise, it's useful to decompose sources of variance:

🐠is there enough meaningful spread (signal) among compared models

🐟do scores vary between intermediate checkpoints (noise)

very nice results over nemotron synth, which we've generally been impressed by

rephrasing the web (arxiv.org/abs/2401.16380) seems very powerful & good demonstration of how to push it further

very nice results over nemotron synth, which we've generally been impressed by

rephrasing the web (arxiv.org/abs/2401.16380) seems very powerful & good demonstration of how to push it further

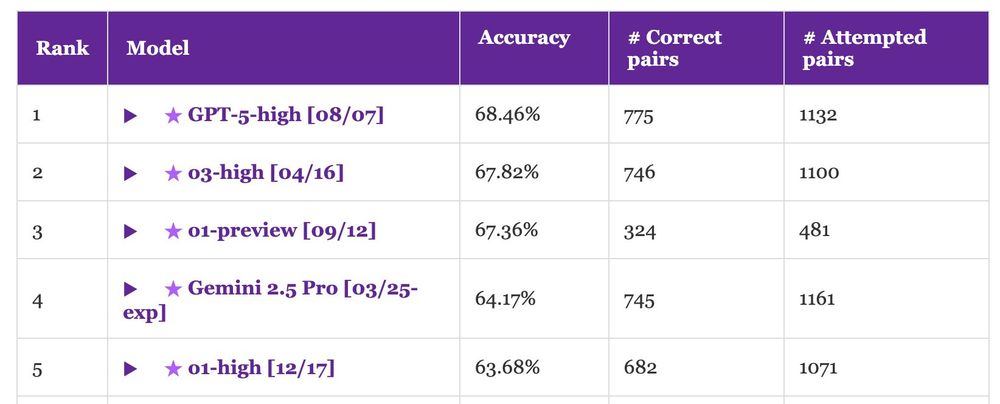

That said, this is a tiny improvement (~1%) over o1-preview, which was released almost one year ago. Have long-context models hit a wall?

Accuracy of human readers is >97%... Long way to go!

That said, this is a tiny improvement (~1%) over o1-preview, which was released almost one year ago. Have long-context models hit a wall?

Accuracy of human readers is >97%... Long way to go!

our olmOCR lead Jake Poznanski shipped a new model fixing lotta issues + some more optimization for better throughput

have fun converting PDFs!

our olmOCR lead Jake Poznanski shipped a new model fixing lotta issues + some more optimization for better throughput

have fun converting PDFs!

We do this on unprecedented scale and in real time: finding matching text between model outputs and 4 trillion training tokens within seconds. ✨

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

Jul 30, 11:00-12:30 at Hall 4X, board 424.

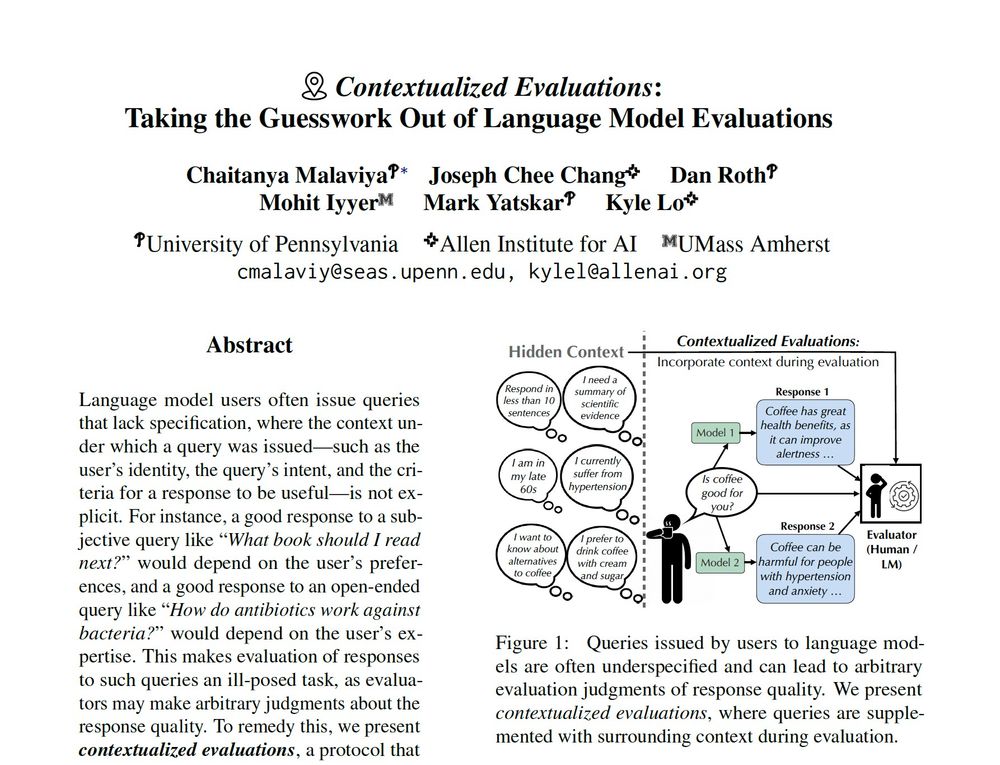

Benchmarks like Chatbot Arena contain underspecified queries, which can lead to arbitrary eval judgments. What happens if we provide evaluators with context (e.g who's the user, what's their intent) when judging LM outputs? 🧵↓

Jul 30, 11:00-12:30 at Hall 4X, board 424.