Viet Anh Khoa Tran

@ktran.de

PhD student on Dendritic Learning/NeuroAI with Willem Wybo,

at Emre Neftci's lab (@fz-juelich.de).

ktran.de

at Emre Neftci's lab (@fz-juelich.de).

ktran.de

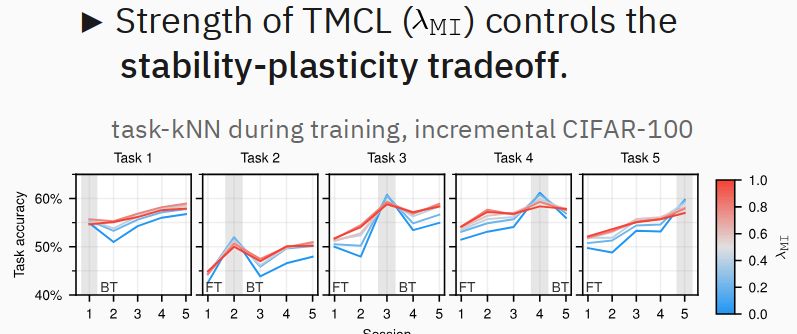

Furthermore, we can dynamically adjust the stability-plasticity trade-off by adapting the strength of the modulation invariance term. (4/6)

June 10, 2025 at 1:17 PM

Furthermore, we can dynamically adjust the stability-plasticity trade-off by adapting the strength of the modulation invariance term. (4/6)

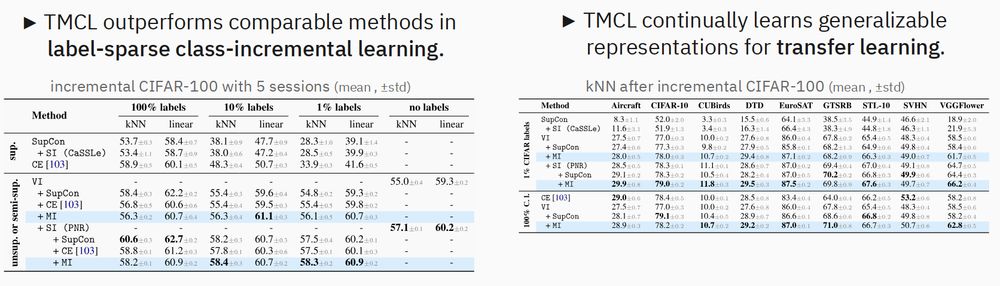

Key finding: With only 1% labels, our method outperforms comparable continual learning algorithms both on the continual task and when transferred to other tasks.

Therefore, we continually learn generalizable representations, unlike conventional, class-collapsing methods (e.g. Cross-Entropy). (3/6)

Therefore, we continually learn generalizable representations, unlike conventional, class-collapsing methods (e.g. Cross-Entropy). (3/6)

June 10, 2025 at 1:17 PM

Key finding: With only 1% labels, our method outperforms comparable continual learning algorithms both on the continual task and when transferred to other tasks.

Therefore, we continually learn generalizable representations, unlike conventional, class-collapsing methods (e.g. Cross-Entropy). (3/6)

Therefore, we continually learn generalizable representations, unlike conventional, class-collapsing methods (e.g. Cross-Entropy). (3/6)

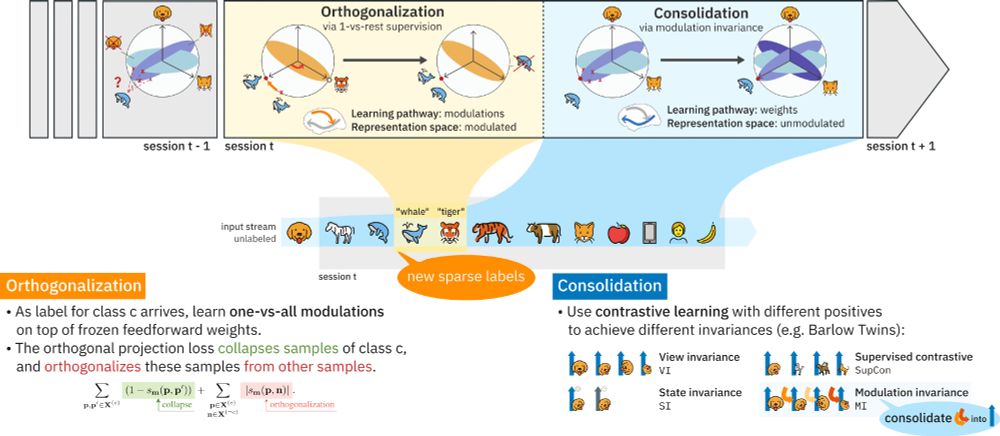

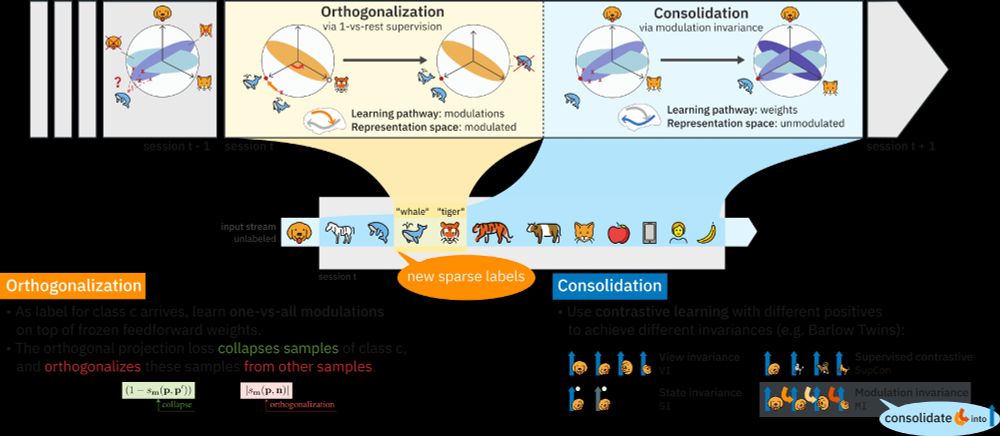

Feedforward weights learn via view-invariant self-supervised learning, mimicking predictive coding. Top-down class modulations, informed by new labels, orthogonalize same-class representations. These are then consolidated into the feedforward pathway through modulation invariance. (2/6)

June 10, 2025 at 1:17 PM

Feedforward weights learn via view-invariant self-supervised learning, mimicking predictive coding. Top-down class modulations, informed by new labels, orthogonalize same-class representations. These are then consolidated into the feedforward pathway through modulation invariance. (2/6)

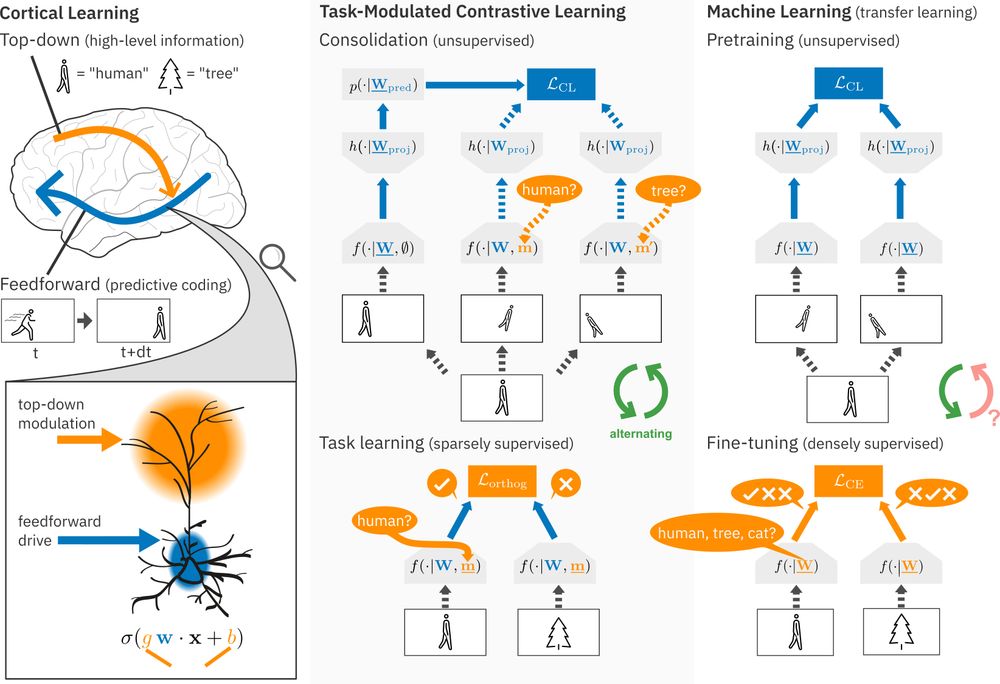

New #NeuroAI preprint on #ContinualLearning!

Continual learning methods struggle in mostly unsupervised environments with sparse labels (e.g. parents telling their child the object is an 'apple').

We propose that in the cortex, predictive coding of high-level top-down modulations solves this! (1/6)

Continual learning methods struggle in mostly unsupervised environments with sparse labels (e.g. parents telling their child the object is an 'apple').

We propose that in the cortex, predictive coding of high-level top-down modulations solves this! (1/6)

June 10, 2025 at 1:17 PM

New #NeuroAI preprint on #ContinualLearning!

Continual learning methods struggle in mostly unsupervised environments with sparse labels (e.g. parents telling their child the object is an 'apple').

We propose that in the cortex, predictive coding of high-level top-down modulations solves this! (1/6)

Continual learning methods struggle in mostly unsupervised environments with sparse labels (e.g. parents telling their child the object is an 'apple').

We propose that in the cortex, predictive coding of high-level top-down modulations solves this! (1/6)

Feedforward weights learn via view-invariant self-supervised learning, mimicking predictive coding. Top-down class modulations, informed by new labels, orthogonalize same-class representations. These are then consolidated into the feedforward pathway through modulation invariance. (2/6)

June 10, 2025 at 1:13 PM

Feedforward weights learn via view-invariant self-supervised learning, mimicking predictive coding. Top-down class modulations, informed by new labels, orthogonalize same-class representations. These are then consolidated into the feedforward pathway through modulation invariance. (2/6)