Kevin Feng

@kjfeng.me

PhD student at the University of Washington in social computing + human-AI interaction @socialfutureslab.bsky.social. 🌐 kjfeng.me

Special thanks to @katygb.bsky.social and @sethlazar.org for organizing a fantastic @knightcolumbia essay series on AI and Democratic freedoms, and other authors of the essay series for valuable discussion + feedback. Check out the other essays here! knightcolumbia.org/research/art....

Artificial Intelligence and Democratic Freedoms

knightcolumbia.org

August 1, 2025 at 3:43 PM

Special thanks to @katygb.bsky.social and @sethlazar.org for organizing a fantastic @knightcolumbia essay series on AI and Democratic freedoms, and other authors of the essay series for valuable discussion + feedback. Check out the other essays here! knightcolumbia.org/research/art....

Finally, autonomy levels can help with agentic safety evaluations and setting thresholds for autonomy risks in safety frameworks. How do we know what level an agent is operating at, and what appropriate risk mitigations should be put in place? Read our paper for more!

August 1, 2025 at 3:42 PM

Finally, autonomy levels can help with agentic safety evaluations and setting thresholds for autonomy risks in safety frameworks. How do we know what level an agent is operating at, and what appropriate risk mitigations should be put in place? Read our paper for more!

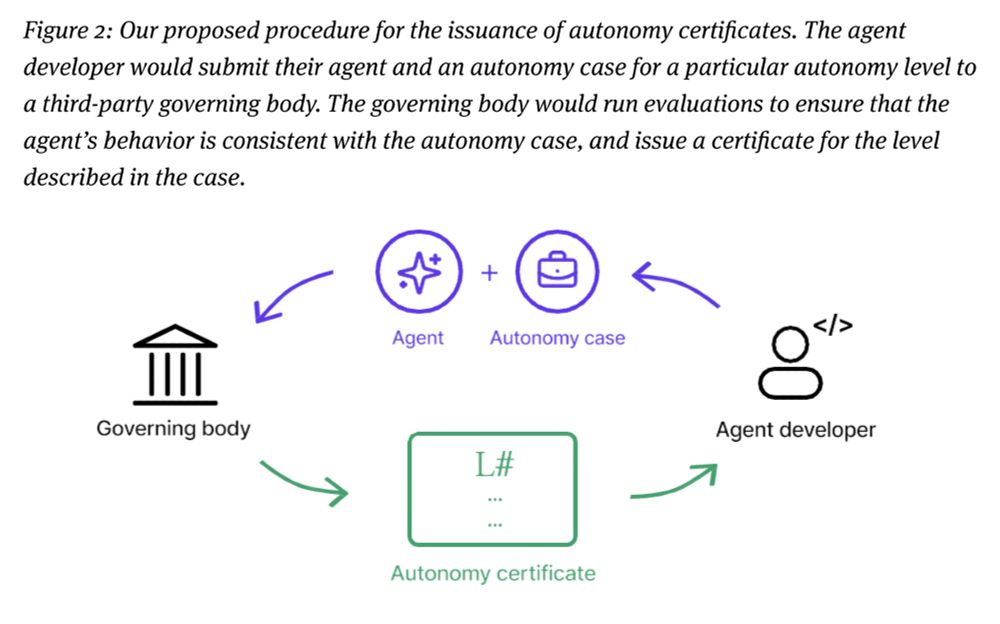

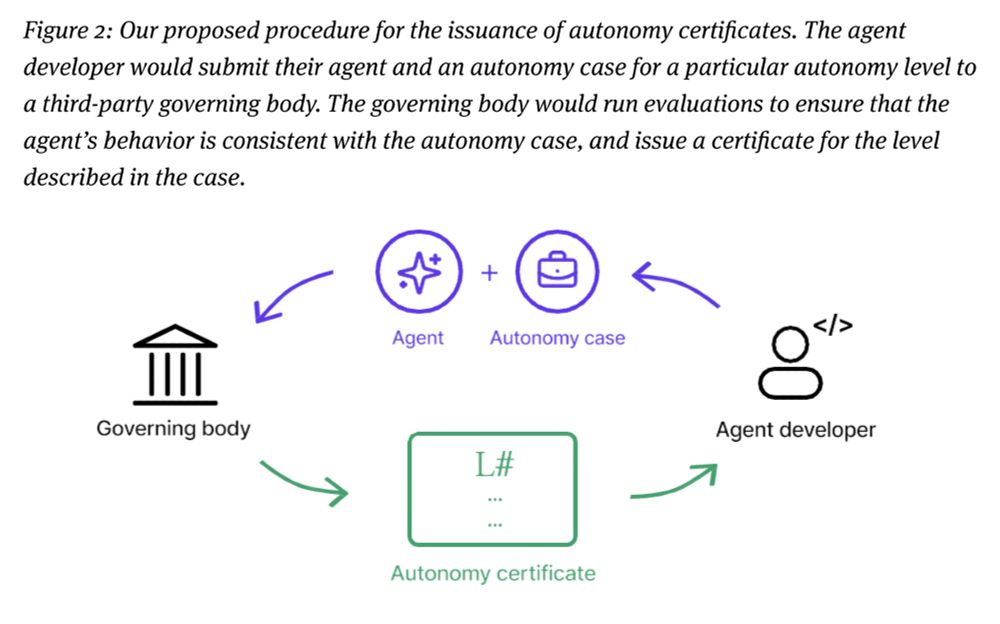

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

August 1, 2025 at 3:42 PM

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

Why have this framework at all?

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

August 1, 2025 at 3:42 PM

Why have this framework at all?

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

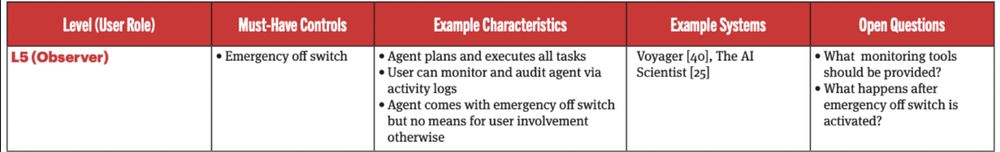

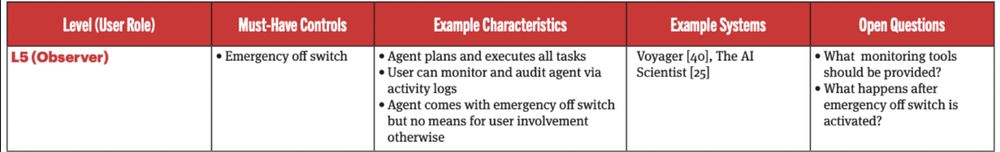

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

August 1, 2025 at 3:42 PM

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

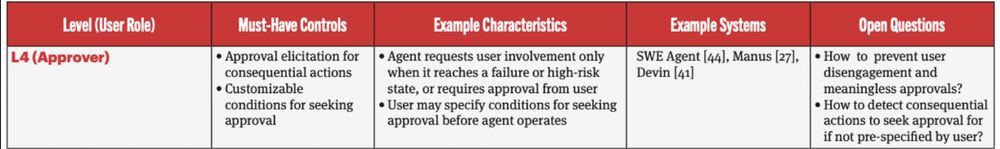

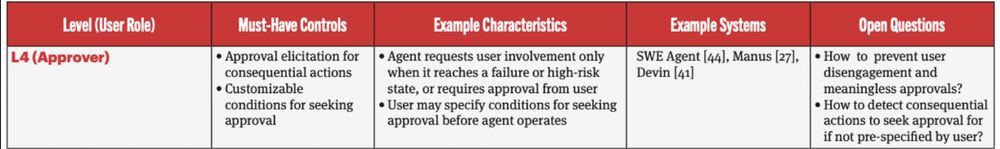

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

August 1, 2025 at 3:41 PM

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

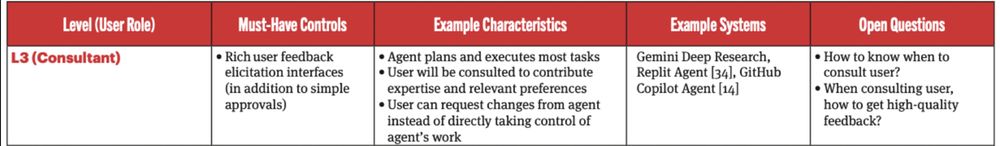

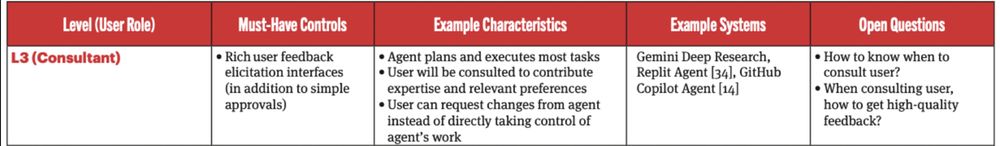

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

August 1, 2025 at 3:41 PM

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

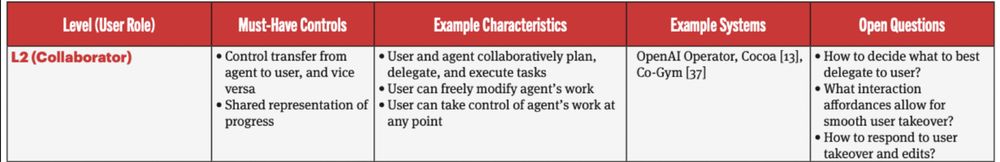

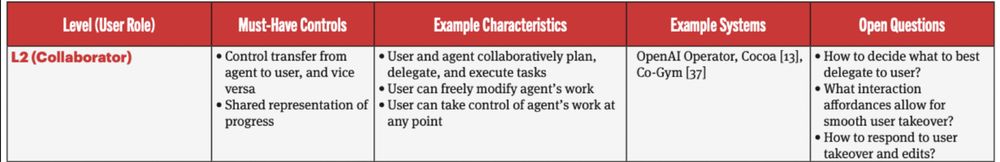

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

August 1, 2025 at 3:41 PM

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

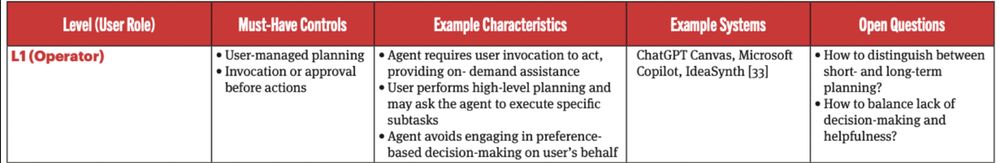

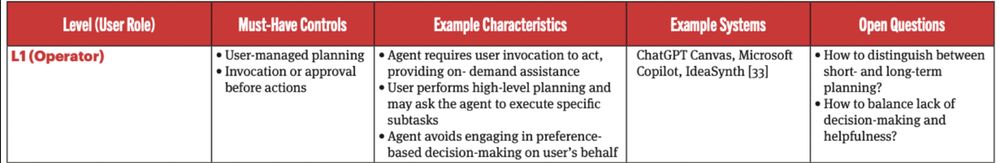

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

August 1, 2025 at 3:40 PM

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Summary thread below.

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Summary thread below.

Levels of Autonomy for AI Agents

knightcolumbia.org

August 1, 2025 at 3:40 PM

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Summary thread below.

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Summary thread below.

Here are links to the paper:

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Levels of Autonomy for AI Agents

knightcolumbia.org

August 1, 2025 at 3:35 PM

Here are links to the paper:

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Web: knightcolumbia.org/content/leve....

arXiv: arxiv.org/abs/2506.12469.

Co-authored w/ David McDonald and @axz.bsky.social .

Special thanks to @katygb.bsky.social and @sethlazar.org for organizing a fantastic @knightcolumbia.org essay series on AI and Democratic freedoms, and other authors of the essay series for valuable discussion + feedback. Check out the other essays here! knightcolumbia.org/research/art....

Artificial Intelligence and Democratic Freedoms

knightcolumbia.org

August 1, 2025 at 3:31 PM

Special thanks to @katygb.bsky.social and @sethlazar.org for organizing a fantastic @knightcolumbia.org essay series on AI and Democratic freedoms, and other authors of the essay series for valuable discussion + feedback. Check out the other essays here! knightcolumbia.org/research/art....

Finally, autonomy levels can help with agentic safety evaluations and setting thresholds for autonomy risks in safety frameworks. How do we know what level an agent is operating at, and what appropriate risk mitigations should be put in place? Read our paper for more!

August 1, 2025 at 3:31 PM

Finally, autonomy levels can help with agentic safety evaluations and setting thresholds for autonomy risks in safety frameworks. How do we know what level an agent is operating at, and what appropriate risk mitigations should be put in place? Read our paper for more!

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

August 1, 2025 at 3:31 PM

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

Why have this framework at all?

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

August 1, 2025 at 3:30 PM

Why have this framework at all?

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

We argue that agent autonomy can be a deliberate design decision, independent of the agent's capability or environment. This framework offers a practical guide for designing human-agent & agent-agent collaboration for real-world deployments.

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

August 1, 2025 at 3:30 PM

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

August 1, 2025 at 3:30 PM

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

August 1, 2025 at 3:29 PM

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

August 1, 2025 at 3:29 PM

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

August 1, 2025 at 3:29 PM

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

Hi Dave, this is super cool! I'm currently working on a research prototype that brings domain experts together to draft policies for AI behavior and would love to chat more, esp about how I can integrate something like this

August 1, 2025 at 12:10 AM

Hi Dave, this is super cool! I'm currently working on a research prototype that brings domain experts together to draft policies for AI behavior and would love to chat more, esp about how I can integrate something like this