Kevin Feng

@kjfeng.me

PhD student at the University of Washington in social computing + human-AI interaction @socialfutureslab.bsky.social. 🌐 kjfeng.me

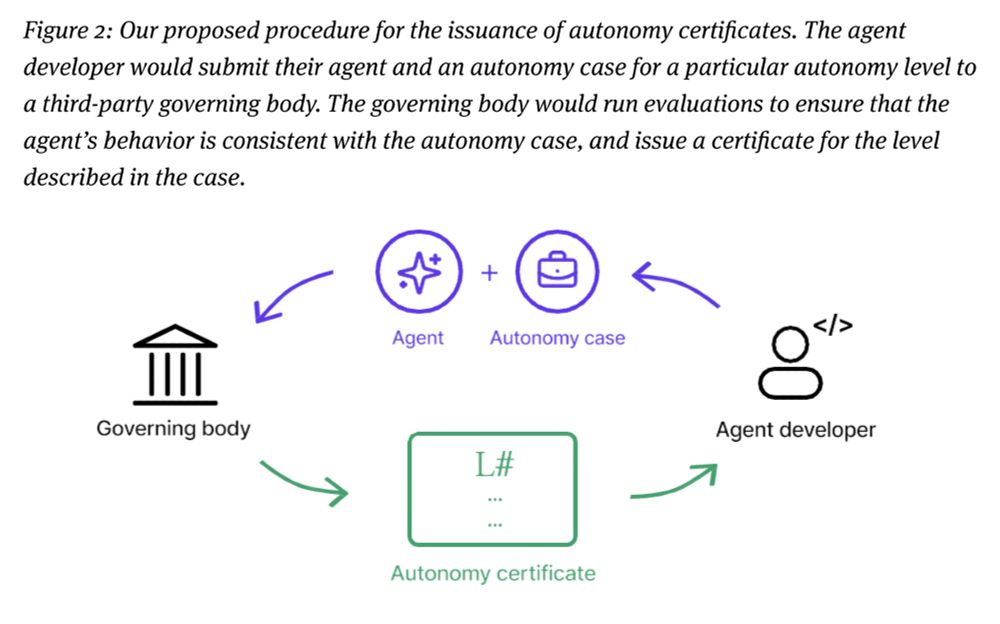

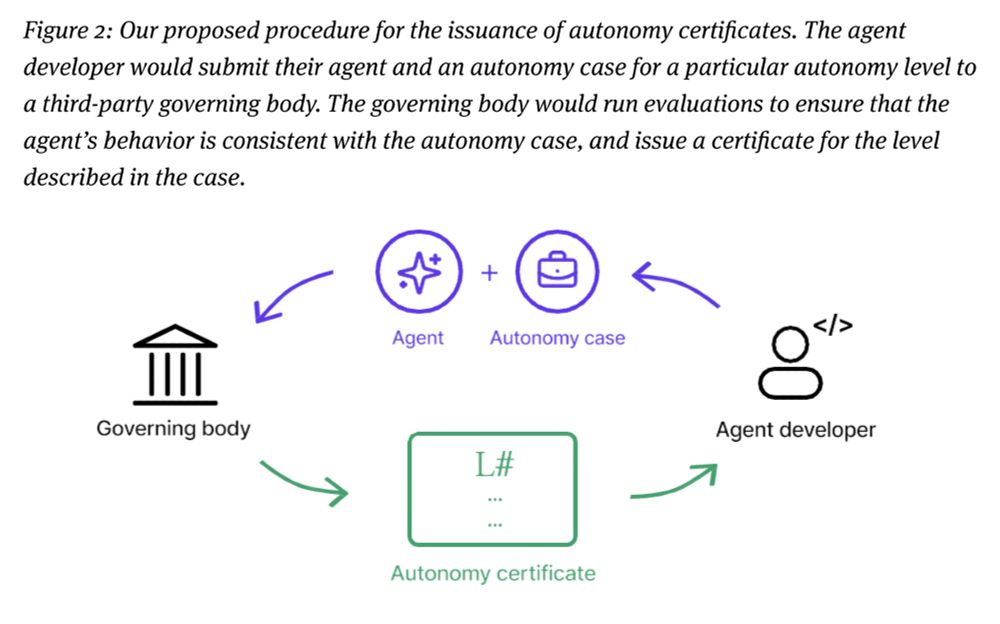

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

August 1, 2025 at 3:42 PM

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

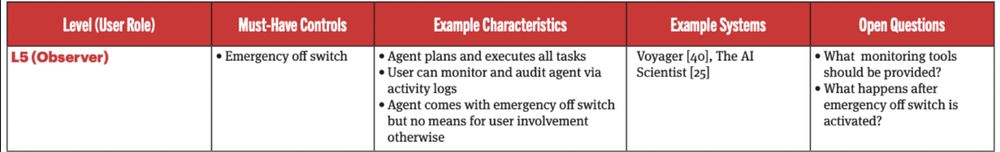

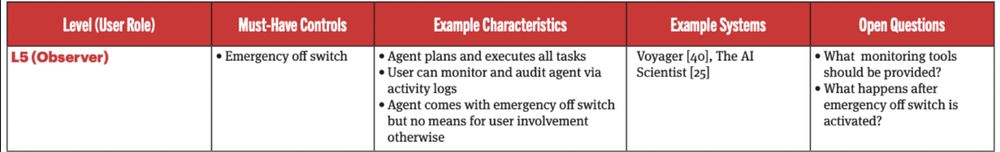

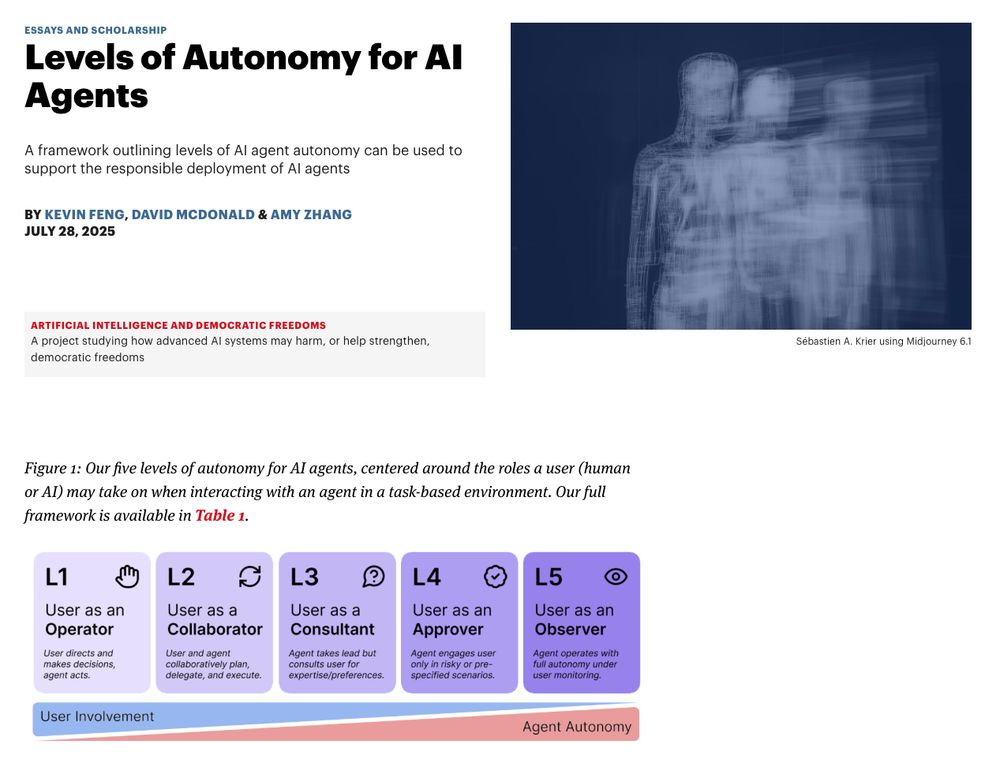

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

August 1, 2025 at 3:42 PM

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

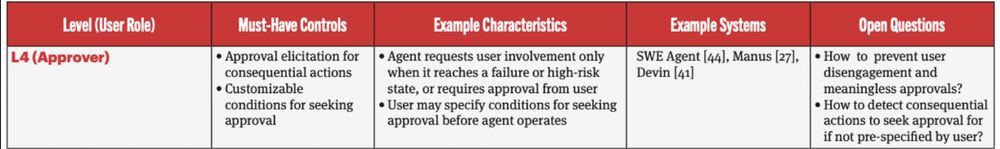

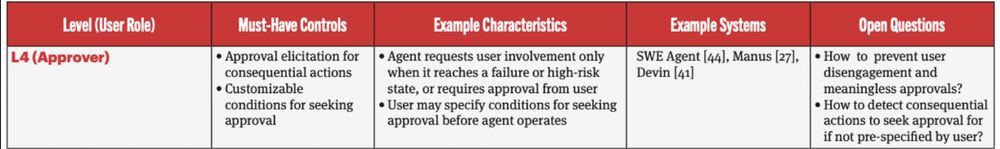

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

August 1, 2025 at 3:41 PM

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

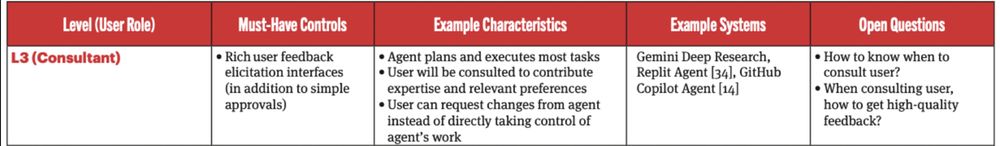

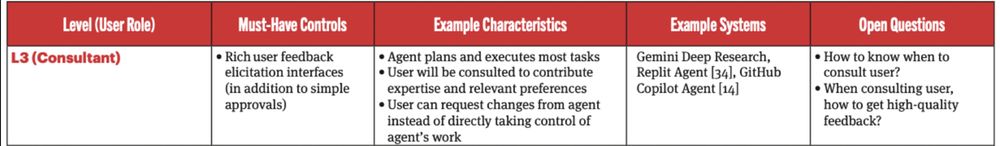

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

August 1, 2025 at 3:41 PM

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

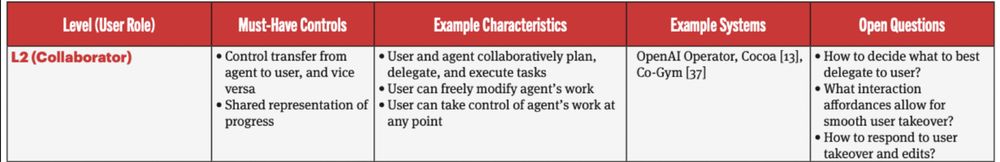

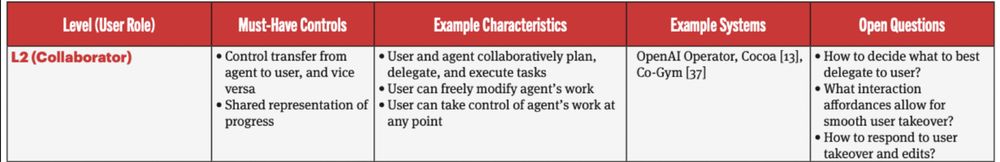

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

August 1, 2025 at 3:41 PM

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

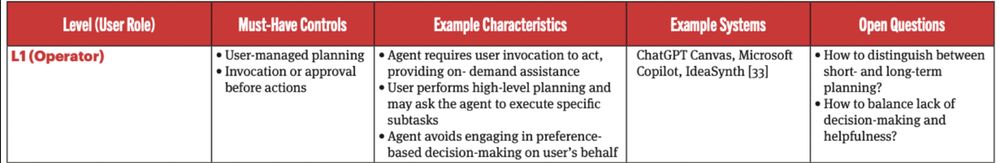

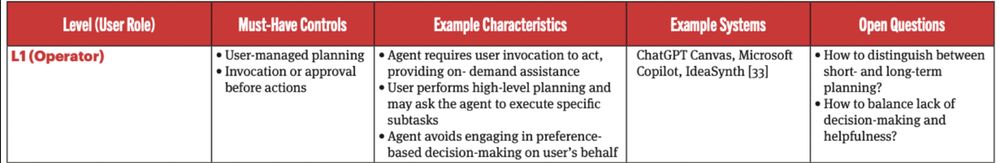

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

August 1, 2025 at 3:40 PM

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

August 1, 2025 at 3:31 PM

We also introduce **agent autonomy certificates** to govern agent behavior in single- and multi-agent settings. Certificates constrain the space of permitted actions and help ensure effective and safe collaborative behaviors in agentic systems.

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

August 1, 2025 at 3:30 PM

Level 5: user as an observer.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

The agent autonomously operates over long time horizons and does not seek user involvement at all. The user passively monitors the agent via activity logs and has access to an emergency off switch.

Example: Sakana AI's AI Scientist.

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

August 1, 2025 at 3:30 PM

Level 4: user as an approver.

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

The agent only requests user involvement when it needs approval for a high-risk action (e.g., writing to a database) or when it fails and needs user assistance.

Example: most coding agents (Cursor, Devin, GH Copilot Agent, etc.).

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

August 1, 2025 at 3:29 PM

Level 3: user as a consultant.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

The agent takes the lead in task planning and execution, but actively consults the user to elicit rich preferences and feedback. Unlike L1 & L2, the user can no longer directly control the agent's workflow.

Example: deep research systems.

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

August 1, 2025 at 3:29 PM

Level 2: user as a collaborator.

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

The user and the agent collaboratively plan and execute tasks, handing off information to each other and leveraging shared environments and representations to create common ground.

Example: Cocoa (arxiv.org/abs/2412.10999).

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

August 1, 2025 at 3:29 PM

Level 1: user as an operator.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

The user is in charge of high-level planning to steer the agent. The agent acts when directed, providing on-demand assistance.

Example: your average "copilot" that drafts your emails when you ask it to.

📢 New paper, published by @knightcolumbia.org

We often talk about AI agents augmenting vs. automating work, but how exactly can different configurations of human-agent interaction look like? We introduce a 5-level framework for AI agent autonomy to unpack this.

🧵👇

We often talk about AI agents augmenting vs. automating work, but how exactly can different configurations of human-agent interaction look like? We introduce a 5-level framework for AI agent autonomy to unpack this.

🧵👇

August 1, 2025 at 3:28 PM

📢 New paper, published by @knightcolumbia.org

We often talk about AI agents augmenting vs. automating work, but how exactly can different configurations of human-agent interaction look like? We introduce a 5-level framework for AI agent autonomy to unpack this.

🧵👇

We often talk about AI agents augmenting vs. automating work, but how exactly can different configurations of human-agent interaction look like? We introduce a 5-level framework for AI agent autonomy to unpack this.

🧵👇

Excited to kick off the first workshop on Sociotechnical AI Governance at #chi2025 (STAIG@CHI'25) with a morning poster session in a full house! Looking forward to more posters, discussions, and our keynote in the afternoon. Follow our schedule at chi-staig.github.io!

April 27, 2025 at 3:07 AM

Excited to kick off the first workshop on Sociotechnical AI Governance at #chi2025 (STAIG@CHI'25) with a morning poster session in a full house! Looking forward to more posters, discussions, and our keynote in the afternoon. Follow our schedule at chi-staig.github.io!

🤖LLMs are being integrated everywhere, but how do we ensure they're delivering meaningful user experiences?

In our #chi2025 paper, we empower designers to think about this via 🎨designerly adaptation🎨 of LLMs and built a Figma widget to help!

📜 arxiv.org/abs/2401.09051

🧵👇

In our #chi2025 paper, we empower designers to think about this via 🎨designerly adaptation🎨 of LLMs and built a Figma widget to help!

📜 arxiv.org/abs/2401.09051

🧵👇

April 25, 2025 at 3:02 PM

🤖LLMs are being integrated everywhere, but how do we ensure they're delivering meaningful user experiences?

In our #chi2025 paper, we empower designers to think about this via 🎨designerly adaptation🎨 of LLMs and built a Figma widget to help!

📜 arxiv.org/abs/2401.09051

🧵👇

In our #chi2025 paper, we empower designers to think about this via 🎨designerly adaptation🎨 of LLMs and built a Figma widget to help!

📜 arxiv.org/abs/2401.09051

🧵👇

The First Workshop on Sociotechnical AI Governance at CHI 2025 (STAIG@CHI’25) is less than a week away! We’re super excited to hold a panel with some amazing speakers to discuss why a sociotechnical approach to AI governance is so important. Learn more + see our full schedule: chi-staig.github.io

April 21, 2025 at 4:55 PM

The First Workshop on Sociotechnical AI Governance at CHI 2025 (STAIG@CHI’25) is less than a week away! We’re super excited to hold a panel with some amazing speakers to discuss why a sociotechnical approach to AI governance is so important. Learn more + see our full schedule: chi-staig.github.io

To make our paper more accessible to not only researchers, but also devs, journalists, etc. we have a FAQ in the Appendix! Be sure to check that out, and if you have more questions, please don’t hesitate to reach out.

January 16, 2025 at 3:26 PM

To make our paper more accessible to not only researchers, but also devs, journalists, etc. we have a FAQ in the Appendix! Be sure to check that out, and if you have more questions, please don’t hesitate to reach out.

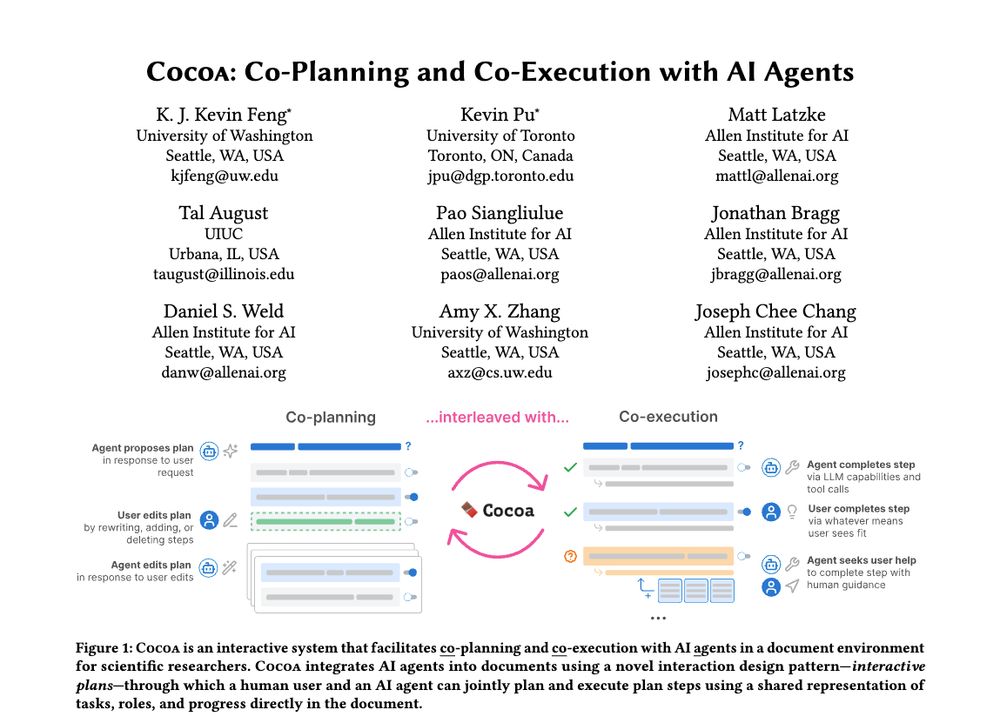

🖥️Interactive UI

Cocoa embeds AI agents into docs with a new design pattern—interactive plans. They’re “computational notebooks” for humans + agents, breaking tasks down into interactive steps. We designed Cocoa for scientific research, an important & challenging domain for AI.

Cocoa embeds AI agents into docs with a new design pattern—interactive plans. They’re “computational notebooks” for humans + agents, breaking tasks down into interactive steps. We designed Cocoa for scientific research, an important & challenging domain for AI.

January 16, 2025 at 3:24 PM

🖥️Interactive UI

Cocoa embeds AI agents into docs with a new design pattern—interactive plans. They’re “computational notebooks” for humans + agents, breaking tasks down into interactive steps. We designed Cocoa for scientific research, an important & challenging domain for AI.

Cocoa embeds AI agents into docs with a new design pattern—interactive plans. They’re “computational notebooks” for humans + agents, breaking tasks down into interactive steps. We designed Cocoa for scientific research, an important & challenging domain for AI.

🤔Giving complex tasks to AI agents is easy—getting them to do exactly what you want isn’t. How can human-AI collaboration give us more reliable & steerable agents?

🍫Introducing Cocoa, our new interaction paradigm for balancing human & AI agency in complex human-AI workflows. 🧵

🍫Introducing Cocoa, our new interaction paradigm for balancing human & AI agency in complex human-AI workflows. 🧵

January 16, 2025 at 3:19 PM

🤔Giving complex tasks to AI agents is easy—getting them to do exactly what you want isn’t. How can human-AI collaboration give us more reliable & steerable agents?

🍫Introducing Cocoa, our new interaction paradigm for balancing human & AI agency in complex human-AI workflows. 🧵

🍫Introducing Cocoa, our new interaction paradigm for balancing human & AI agency in complex human-AI workflows. 🧵

📢📢 Introducing the 1st workshop on Sociotechnical AI Governance at CHI’25 (STAIG@CHI'25)! Join us to build a community to tackle AI governance through a sociotechnical lens and drive actionable strategies.

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

December 23, 2024 at 6:47 PM

📢📢 Introducing the 1st workshop on Sociotechnical AI Governance at CHI’25 (STAIG@CHI'25)! Join us to build a community to tackle AI governance through a sociotechnical lens and drive actionable strategies.

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

🌐 Website: chi-staig.github.io

🗓️ Submit your work by: Feb 17, 2025

Unbeatable pastries in Montreal's Little Italy @ Alati-Caserta

July 15, 2023 at 10:36 PM

Unbeatable pastries in Montreal's Little Italy @ Alati-Caserta

Turns out custom feeds were around us this whole time!

July 8, 2023 at 8:39 PM

Turns out custom feeds were around us this whole time!

Found authentic Guangdong-style chang fen (rice noodle rolls) in downtown Montreal! First time having these in 6 years, sooo good and brings back childhood memories 😋

June 18, 2023 at 1:16 AM

Found authentic Guangdong-style chang fen (rice noodle rolls) in downtown Montreal! First time having these in 6 years, sooo good and brings back childhood memories 😋

But—there's a catch. Enabling provenance adds another dimension of credibility: how valid is the actual provenance info? We tested different states of provenance credibility and found that they had a significant impact on users' credibility ratings of *the content itself*. 4/n

May 31, 2023 at 8:43 PM

But—there's a catch. Enabling provenance adds another dimension of credibility: how valid is the actual provenance info? We tested different states of provenance credibility and found that they had a significant impact on users' credibility ratings of *the content itself*. 4/n