kennypeng.me

Come chat about "Sparse Autoencoders for Hypothesis Generation" (west-421), and "Correlated Errors in LLMs" (east-1102)!

Short thread ⬇️

Come chat about "Sparse Autoencoders for Hypothesis Generation" (west-421), and "Correlated Errors in LLMs" (east-1102)!

Short thread ⬇️

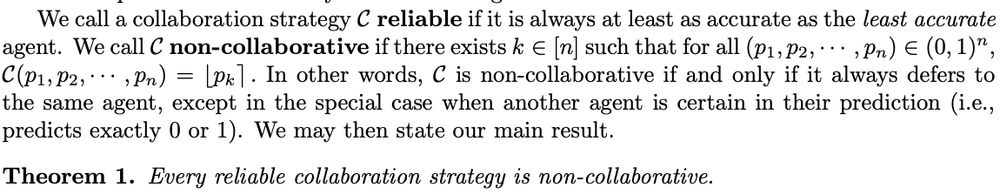

For the theory, see arxiv.org/abs/2312.09841 (5/7)

For the theory, see arxiv.org/abs/2312.09841 (5/7)

arxiv.org/abs/2506.07962

arxiv.org/abs/2506.07962

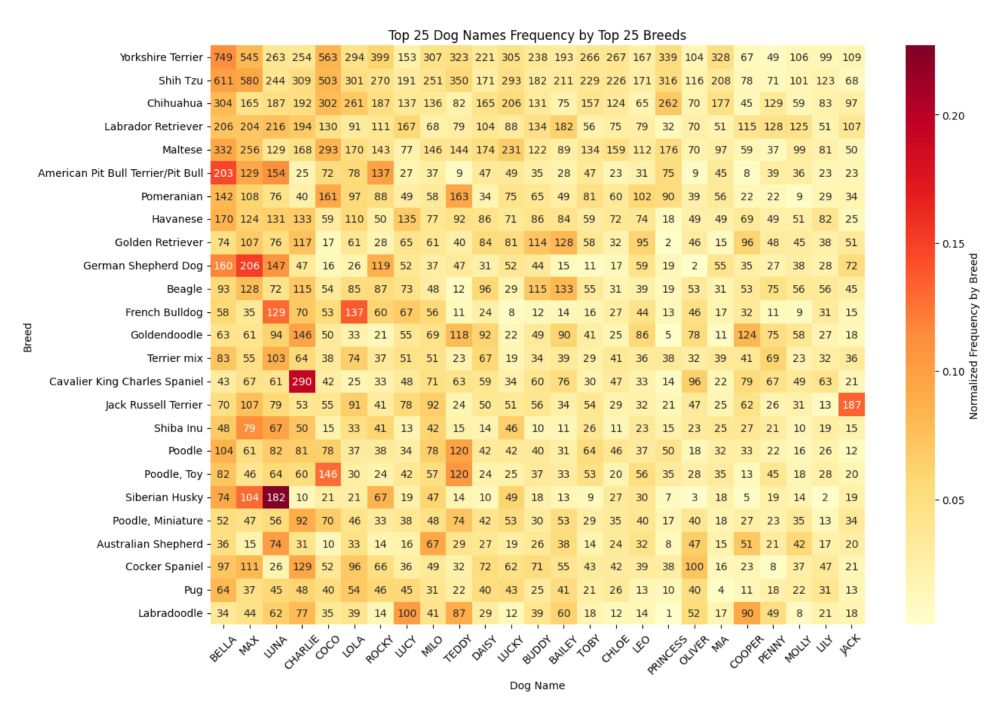

We also briefly explored a “dog park simulator.”

We also briefly explored a “dog park simulator.”

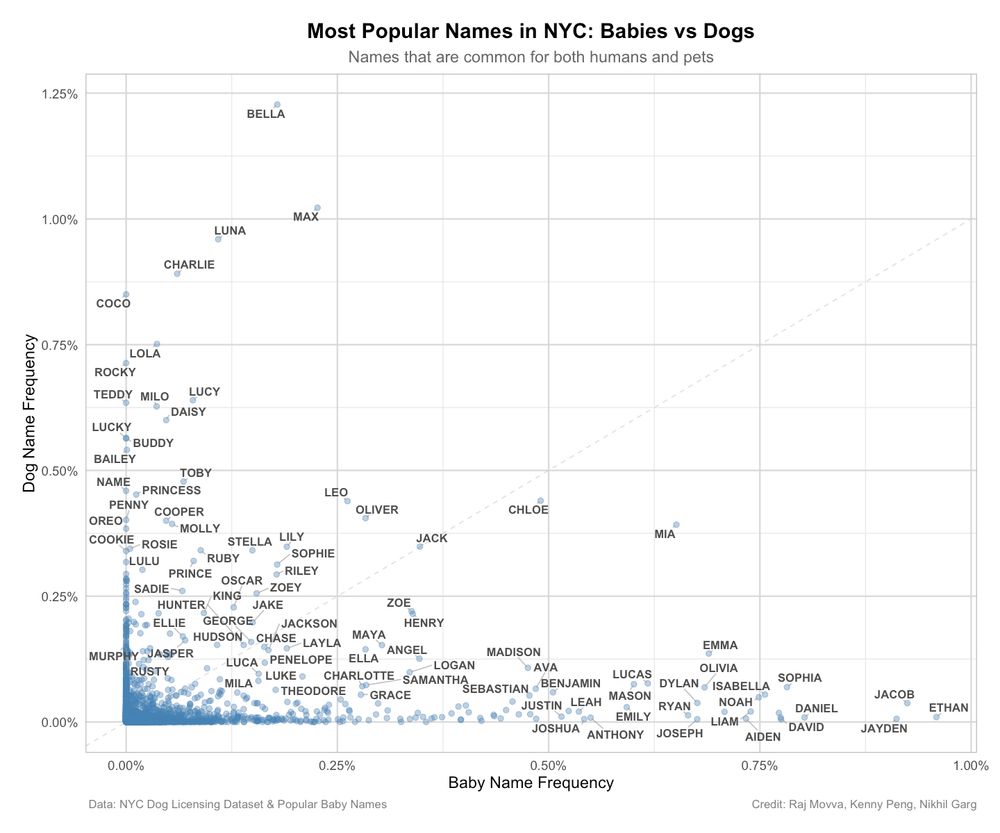

Luna is the only popular name that is becoming more common among dogs AND babies.

Luna is the only popular name that is becoming more common among dogs AND babies.

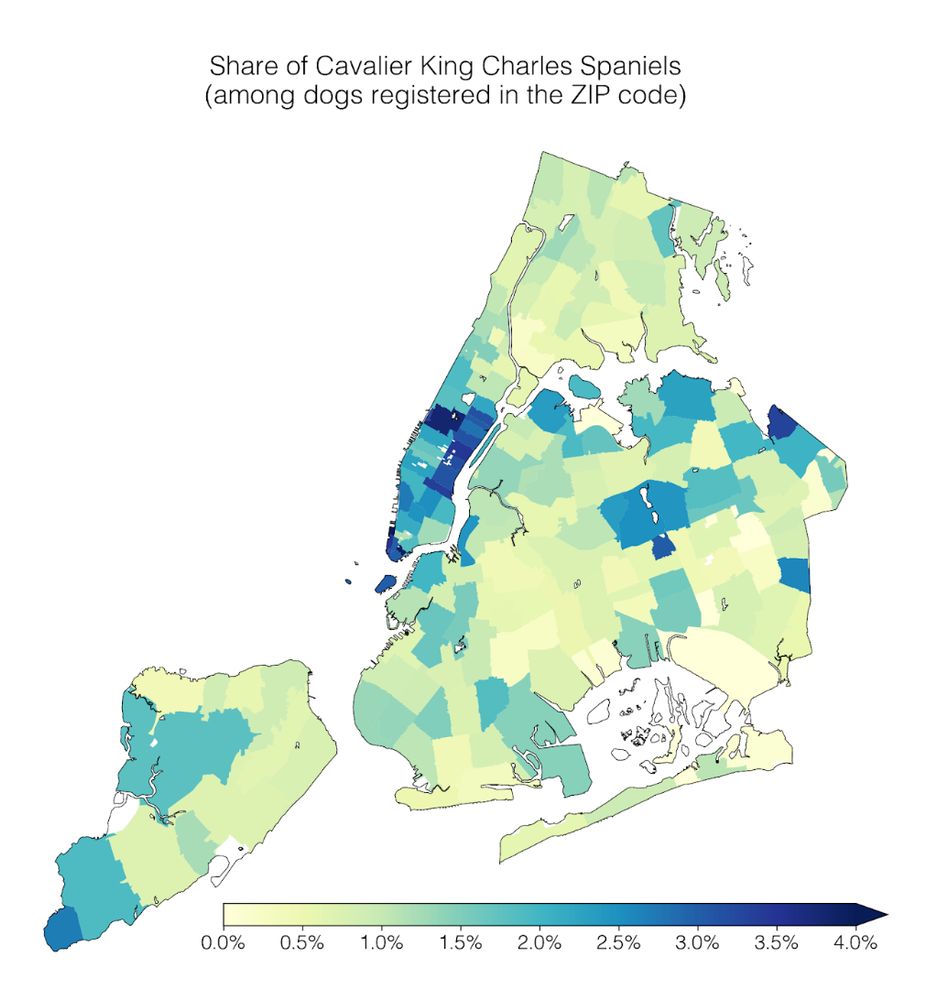

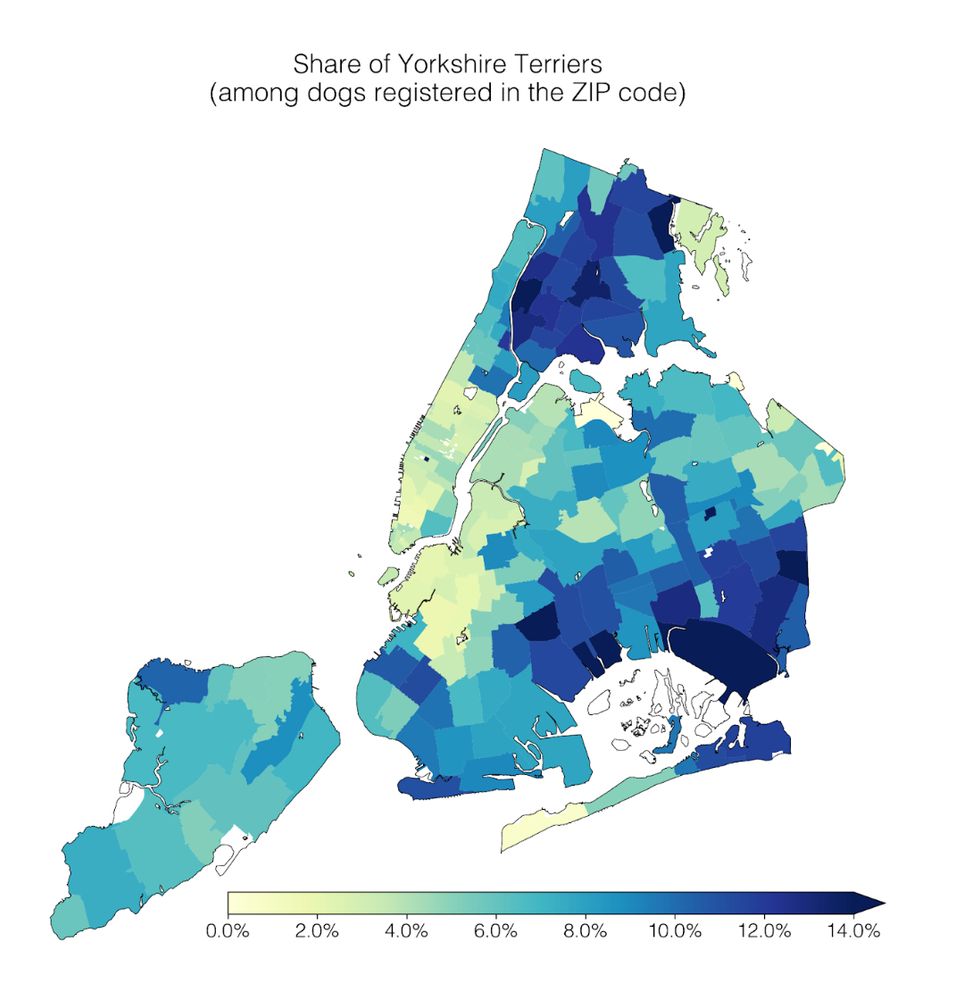

1) Geospatial trends: Cavalier King Charles Spaniels are common in Manhattan; the opposite is true for Yorkshire Terriers.

1) Geospatial trends: Cavalier King Charles Spaniels are common in Manhattan; the opposite is true for Yorkshire Terriers.

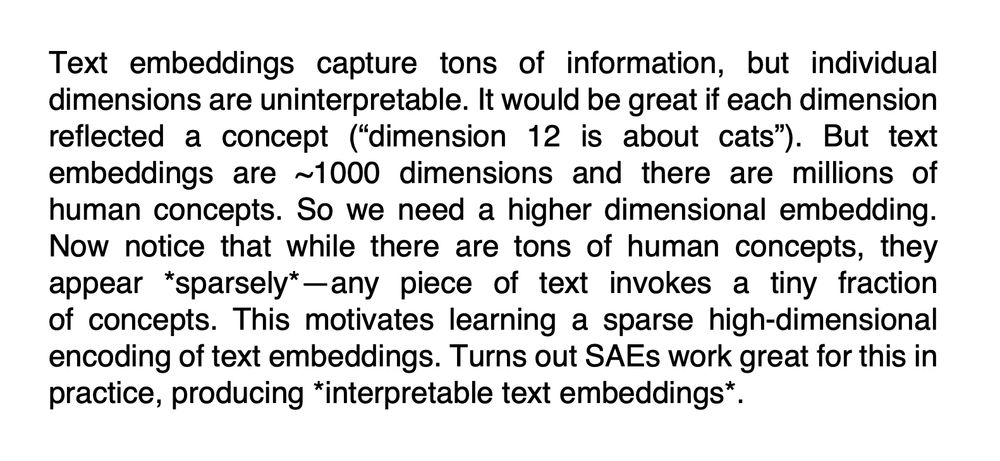

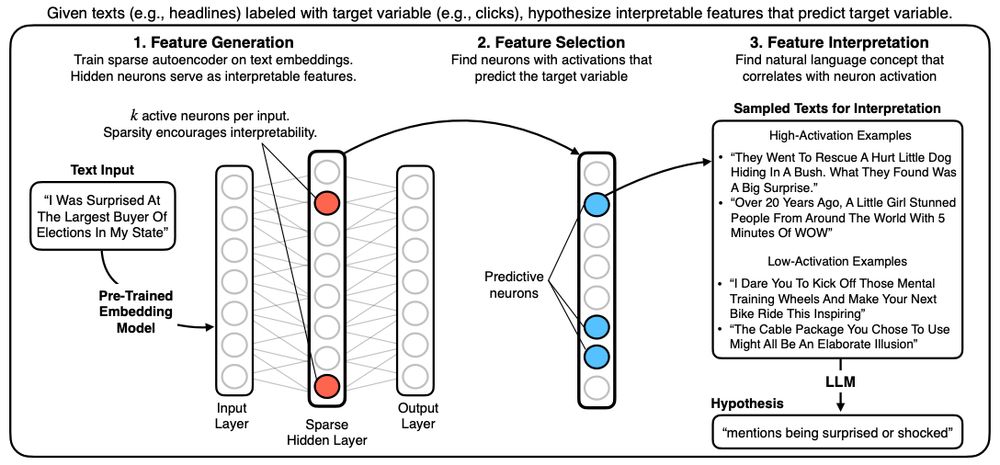

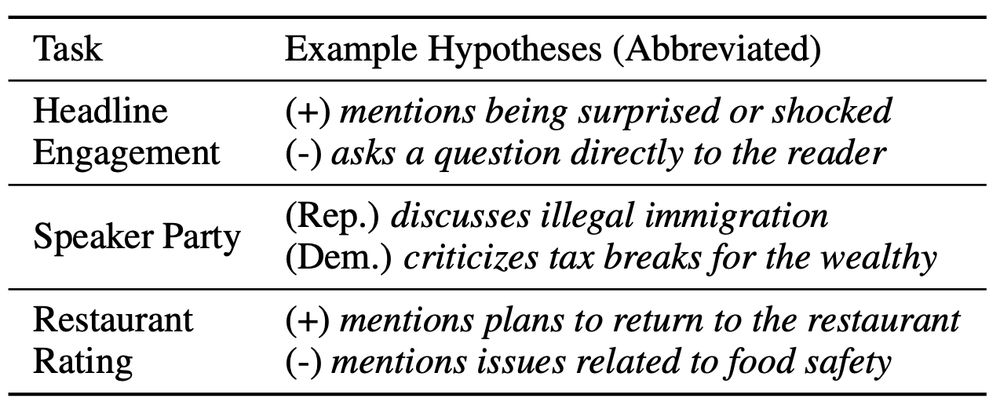

HypotheSAEs generates interpretable features of text data that predict a target variable: What features predict clicks from headlines / party from congressional speech / rating from Yelp review?

arxiv.org/abs/2502.04382

HypotheSAEs generates interpretable features of text data that predict a target variable: What features predict clicks from headlines / party from congressional speech / rating from Yelp review?

arxiv.org/abs/2502.04382

(And if you're at #AAAI, I'm presenting at 11:15am today in the Humans and AI session. Poster 12:30-2:30.)

arxiv.org/abs/2411.15230

(And if you're at #AAAI, I'm presenting at 11:15am today in the Humans and AI session. Poster 12:30-2:30.)

arxiv.org/abs/2411.15230

Poster is in a few hours, come chat!

Wed 11am-2pm | West Ballroom A-D #5505

Poster is in a few hours, come chat!

Wed 11am-2pm | West Ballroom A-D #5505