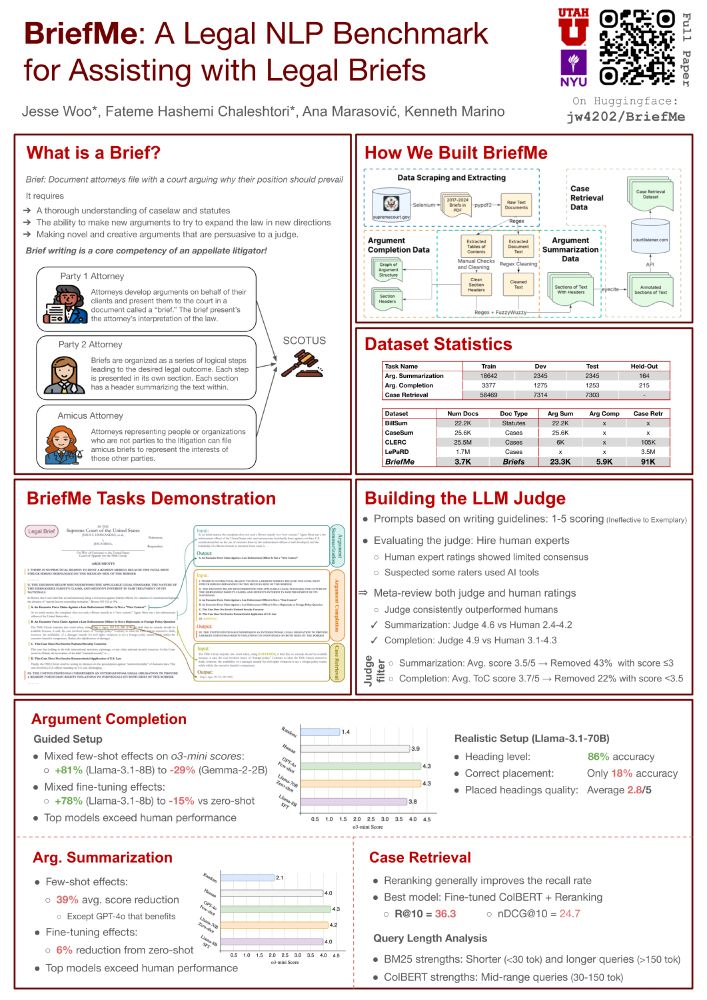

See you at the welcome reception & catch me at the poster session on 𝐓𝐮𝐞𝐬𝐝𝐚𝐲 (𝐉𝐮𝐥𝐲 𝟐𝟗) 𝐚𝐭 𝟏𝟎:𝟑𝟎𝐚𝐦, where Jesse will present our work introducing new tasks for supporting legal brief writing: arxiv.org/abs/2506.06619

See you at the welcome reception & catch me at the poster session on 𝐓𝐮𝐞𝐬𝐝𝐚𝐲 (𝐉𝐮𝐥𝐲 𝟐𝟗) 𝐚𝐭 𝟏𝟎:𝟑𝟎𝐚𝐦, where Jesse will present our work introducing new tasks for supporting legal brief writing: arxiv.org/abs/2506.06619

As backstory, Jesse Woo started this project when I taught a ML Datasets class at Columbia.

Then we joined up with @anamarasovic.bsky.social and @fatemehc.bsky.social and really kicked it into high gear. Would not have happened without the full team!

We introduce the first benchmark specifically designed to help LLMs assist lawyers in writing legal briefs 🧑⚖️

📄 arxiv.org/abs/2506.06619

🗂️ huggingface.co/datasets/jw4...

As backstory, Jesse Woo started this project when I taught a ML Datasets class at Columbia.

Then we joined up with @anamarasovic.bsky.social and @fatemehc.bsky.social and really kicked it into high gear. Would not have happened without the full team!

We are looking forward to a series of talks on semantic granularity, covering topics such as machine teaching, interpretability and much more!

Room 104 E

Schedule & details: sites.google.com/view/fgvc12

@cvprconference.bsky.social #CVPR25

We are looking forward to a series of talks on semantic granularity, covering topics such as machine teaching, interpretability and much more!

Room 104 E

Schedule & details: sites.google.com/view/fgvc12

@cvprconference.bsky.social #CVPR25

Randall Balestriero, Kai Han, Mia Chiquier, Kenneth Marino (@kennethmarino.bsky.social), Elisa Ricci, Thomas Fel (@thomasfel.bsky.social)

Randall Balestriero, Kai Han, Mia Chiquier, Kenneth Marino (@kennethmarino.bsky.social), Elisa Ricci, Thomas Fel (@thomasfel.bsky.social)

We evaluate LMs on a cognitive task to find:

- LMs struggle with certain simple causal relationships

- They show biases similar to human adults (but not children)

🧵⬇️

Really proud of this work and of my fantastic colleagues at Google DeepMind who put in so much hard work.

See you all in Singapore!

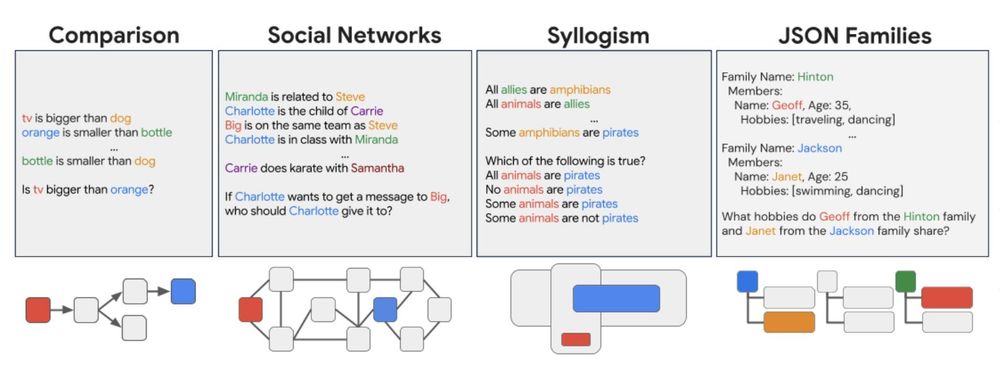

We have a tool for you! Our latest #ICLR work on long-context/relational reasoning evaluation for LLMs ReCogLab!

github.com/google-deepm...

Thread ⬇️

Really proud of this work and of my fantastic colleagues at Google DeepMind who put in so much hard work.

See you all in Singapore!

OpenAI had access to all of FrontierMath data from the beginning, but they verbally agreed that data would not be used in model training. Although there was a legal agreement not to disclose the partnership

* Treats it as an RL problem

* Trains rather than just prompting

* Beats closed models

* Releases code and model so other people can build off of their work

Many great ideas in this paper too, definitely read

arxiv.org/pdf/2411.02337

* Treats it as an RL problem

* Trains rather than just prompting

* Beats closed models

* Releases code and model so other people can build off of their work

Many great ideas in this paper too, definitely read

arxiv.org/pdf/2411.02337

Earlier that day:

Earlier that day:

www.cs.utah.edu/graduate/pro...

Deadline Dec 15

www.cs.utah.edu/graduate/pro...

Deadline Dec 15

Can’t take a lot of credit, was only an advisor on the project. Gabriel Sarch (PhD candidate at CMU) did a great job on this project; you should come talk to him!

Link to paper: arxiv.org/pdf/2406.14596

Can’t take a lot of credit, was only an advisor on the project. Gabriel Sarch (PhD candidate at CMU) did a great job on this project; you should come talk to him!

Link to paper: arxiv.org/pdf/2406.14596

Also, I’m hiring PhD students this fall at Utah; there’s still time to apply: www.cs.utah.edu/graduate/pro...

Happy to chat if you’re at the conference!

Also, I’m hiring PhD students this fall at Utah; there’s still time to apply: www.cs.utah.edu/graduate/pro...

Happy to chat if you’re at the conference!

dippedrusk.com/posts/2024-0...

dippedrusk.com/posts/2024-0...

I don’t like the ICLR continuous back and forth format.

I find it exhausting as both an author and a reviewer.

And the end result is often that reviewers don’t engage very much anyway so we might as well design it as a single response and then discussion.

I don’t like the ICLR continuous back and forth format.

I find it exhausting as both an author and a reviewer.

And the end result is often that reviewers don’t engage very much anyway so we might as well design it as a single response and then discussion.

Arboretum: A Large Multimodal Dataset Enabling AI for Biodiversity

arxiv.org/pdf/2406.17720

Truly enormous dataset of animals/plants/fungi. Over 130M images, 300k species.

Truly staggering scale and number of classes. A whole new scale of challenge for fine grain recognition.

Arboretum: A Large Multimodal Dataset Enabling AI for Biodiversity

arxiv.org/pdf/2406.17720

Truly enormous dataset of animals/plants/fungi. Over 130M images, 300k species.

Truly staggering scale and number of classes. A whole new scale of challenge for fine grain recognition.