github.com/yuvenduan/POCO

github.com/yuvenduan/POCO

Read the paper here: arxiv.org/abs/2506.14957

Read the paper here: arxiv.org/abs/2506.14957

POCO dominates in context-dense predictions based on REAL neural data 🧠

POCO dominates in context-dense predictions based on REAL neural data 🧠

That means at single-cell resolution across entire brains, POCO mimics biological organization purely from neural activity patterns ✨

That means at single-cell resolution across entire brains, POCO mimics biological organization purely from neural activity patterns ✨

After pre-training, POCO’s speed & flexibility allow it to adapt to new recordings with minimal fine-tuning, opening the door for real-time applications.

After pre-training, POCO’s speed & flexibility allow it to adapt to new recordings with minimal fine-tuning, opening the door for real-time applications.

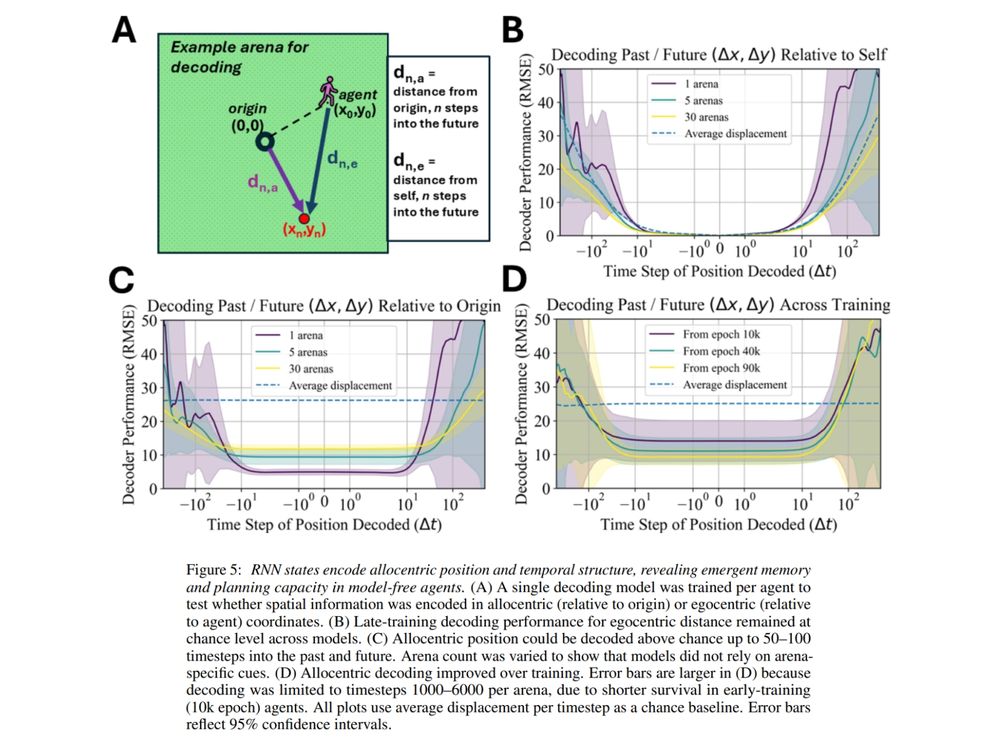

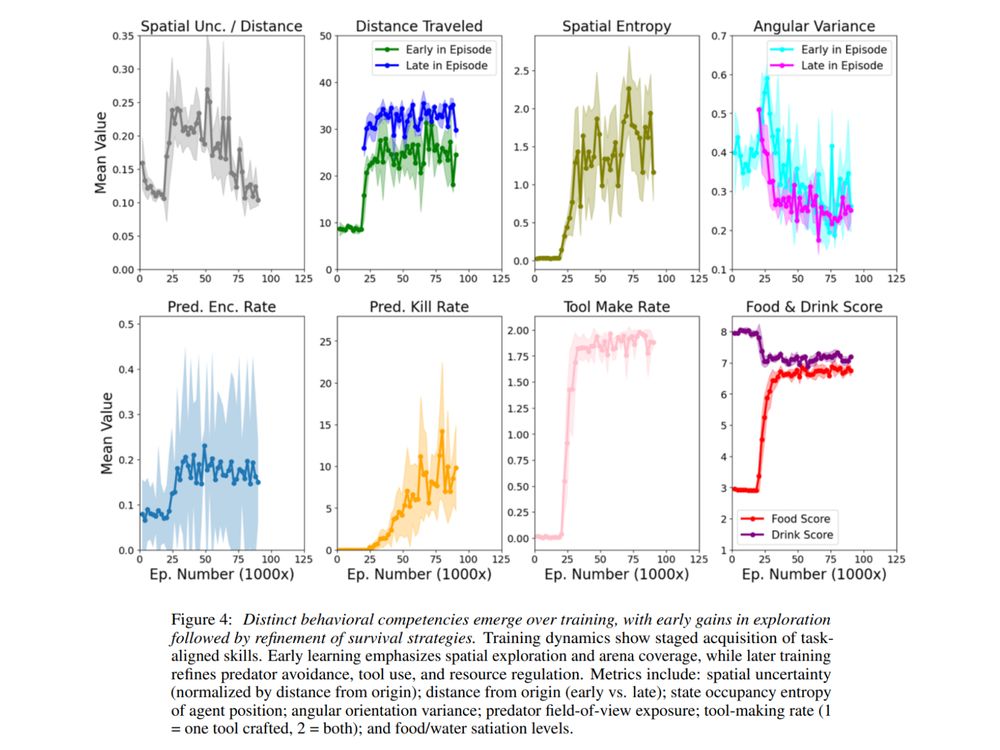

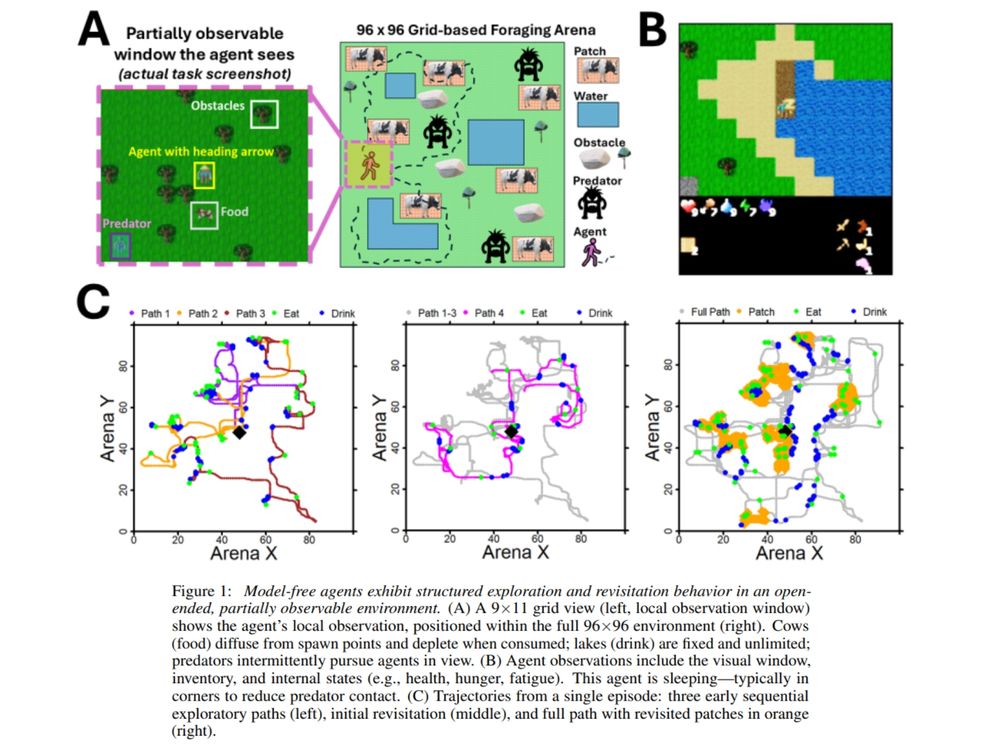

After a quick wander, the agent switches from exploring to visiting patches from memory: revisiting food not seen for over 500-1000 steps, skirting predator zones & timing resource visits.

After a quick wander, the agent switches from exploring to visiting patches from memory: revisiting food not seen for over 500-1000 steps, skirting predator zones & timing resource visits.

The agent can only "see" a small patch around itself, so no bird’s-eye view.

The agent can only "see" a small patch around itself, so no bird’s-eye view.