Webpage🌐: model-similarity.github.io

Code🧑💻: github.com/model-simila...

Data📂: huggingface.co/datasets/bet...

Joint work with Shashwat Goel, Ilze Auzina, Karuna Chandra, @bayesiankitten.bsky.social @pkprofgiri.bsky.social, Douwe Kiela,

MatthiasBethge and Jonas Geiping

Webpage🌐: model-similarity.github.io

Code🧑💻: github.com/model-simila...

Data📂: huggingface.co/datasets/bet...

Joint work with Shashwat Goel, Ilze Auzina, Karuna Chandra, @bayesiankitten.bsky.social @pkprofgiri.bsky.social, Douwe Kiela,

MatthiasBethge and Jonas Geiping

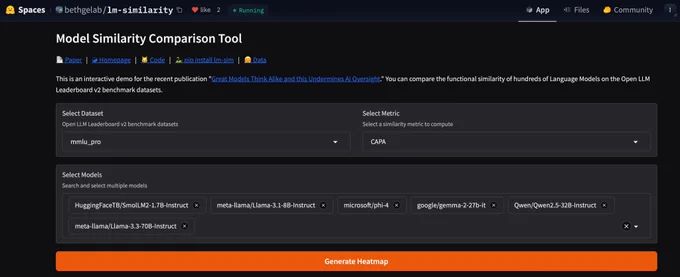

space huggingface.co/spaces/bethg..., thanks to sample-level predictions on OpenLLMLeaderboard. Or run pip install lm-sim, we welcome open-source contributions!

space huggingface.co/spaces/bethg..., thanks to sample-level predictions on OpenLLMLeaderboard. Or run pip install lm-sim, we welcome open-source contributions!

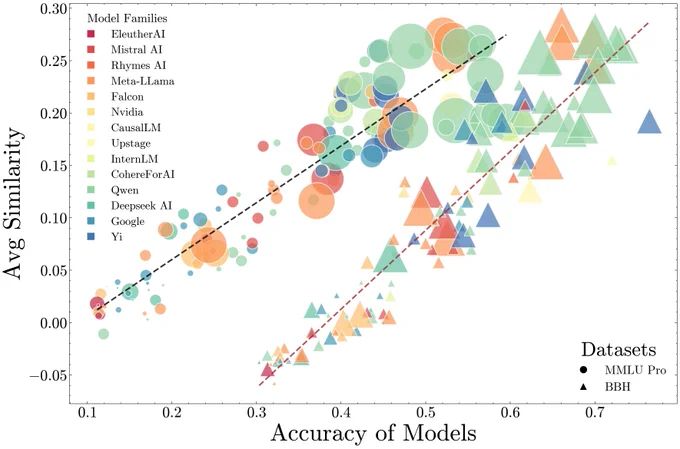

Appendix: Slightly steeper slope for instruct models, and switching architecture to Mamba doesnt reduce similarity!

Appendix: Slightly steeper slope for instruct models, and switching architecture to Mamba doesnt reduce similarity!

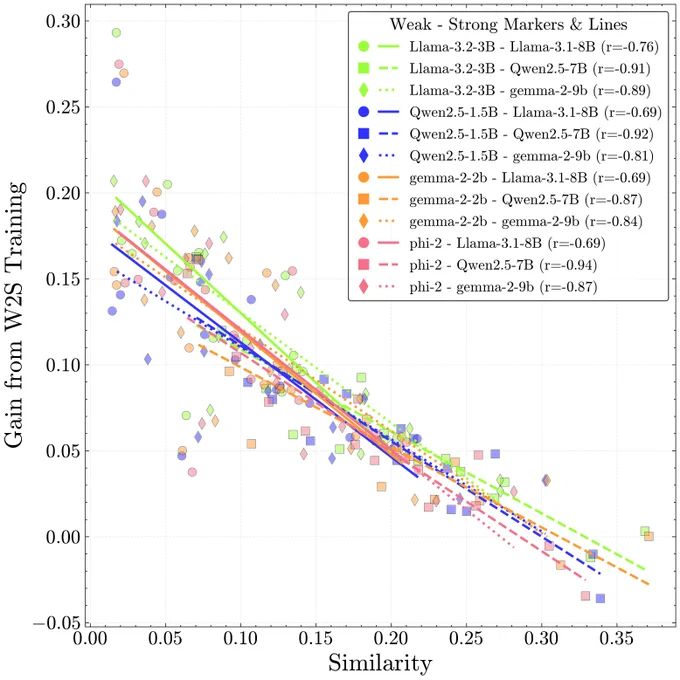

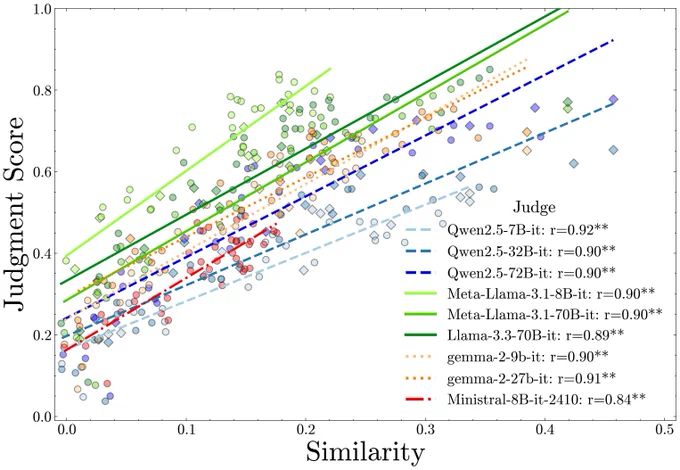

💡Similar models make similar mistakes.

❗Two 90% accuracy models have lesser scope to disagree than two 50% models. We adjust for chance agreement due to accuracy.

Taking this into account, we propose a new metric, Chance Adjusted Probabilistic Agreement (CAPA)

💡Similar models make similar mistakes.

❗Two 90% accuracy models have lesser scope to disagree than two 50% models. We adjust for chance agreement due to accuracy.

Taking this into account, we propose a new metric, Chance Adjusted Probabilistic Agreement (CAPA)